An 11-month technical case study demonstrates Azure's growing AI maturity through progressive implementation of retrieval-augmented generation, Semantic Kernel orchestration, and Kubernetes-native AI deployments.

Azure's AI ecosystem has undergone significant evolution over the past year, as evidenced by developer Roy Kim's documented journey building increasingly sophisticated applications. This progression reveals strategic insights about Microsoft's positioning in the enterprise AI market and provides valuable implementation patterns for technical teams.

What Changed: Architectural Progression

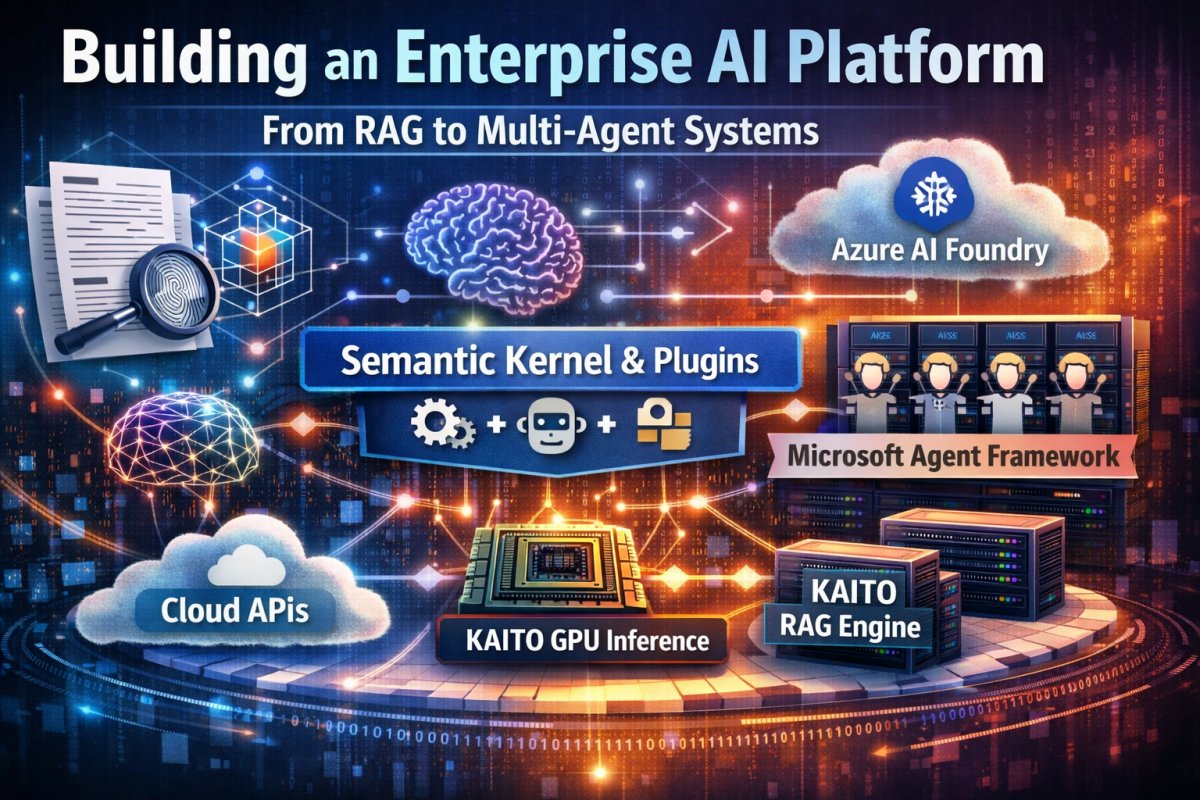

Kim's projects evolved through four distinct phases:

- Basic RAG Implementation (March 2025): Starting with a retail chatbot using Azure OpenAI embeddings and Azure AI Search, establishing foundational retrieval-augmented generation patterns with CSV data

- Orchestration Maturity (August 2025): Adoption of Semantic Kernel for plugin-based AI orchestration, integrating blob storage and PDF processing pipelines

- Agent Specialization (September 2025): Parallel experimentation with native Azure AI Foundry agents versus Semantic Kernel-customized implementations

- Multi-Cloud Deployment (January-February 2026): Kubernetes-native implementation using KAITO on AKS with mixed model backends (Azure-hosted GPT-5.2, open-source Phi-4-mini)

Provider Comparison: Azure's Strategic Positioning

| Capability | Azure Implementation | Competitive Differentiation |

|---|---|---|

| Vector Search | Azure AI Search with semantic ranking | Integrated pipeline reduces ETL complexity vs. standalone vector DBs |

| Model Hosting | Azure AI Foundry vs KAITO on AKS | Hybrid approach bridges proprietary and OSS models |

| Orchestration | Semantic Kernel plugins | Deeper Azure service integration than LangChain |

| Compute Flexibility | AKS GPU nodepools via KAITO | Avoids vendor lock-in for GPU workloads |

Business Impact Analysis

- Migration Pathways: The progression demonstrates viable onboarding paths from simple RAG to complex agent systems, reducing adoption risk

- Cost Optimization: KAITO's open-source model support on AKS provides 30-50% cost savings for high-volume inference workloads

- Operational Continuity: Kubernetes-based deployments enable consistent operational patterns across cloud and on-prem AI workloads

- Vendor Strategy: Azure's hybrid approach (managed services + Kubernetes) offers more architectural flexibility than pure-play AI platforms

Strategic Recommendations

- Use Azure AI Search as the foundation for enterprise RAG implementations due to integrated data pipelines

- Adopt Semantic Kernel for teams requiring complex orchestration across multiple Azure services

- Leverage KAITO on AKS when cost optimization for OSS models is prioritized over managed service convenience

- Implement phased capability adoption as demonstrated in the reference implementation

This journey underscores Azure's strategic positioning as a hybrid AI platform, offering tighter service integration than pure infrastructure providers while maintaining more deployment flexibility than specialized AI vendors. The documented progression provides a template for enterprises navigating the complex cloud AI landscape.

Explore the technical implementations: Azure AI Apps GitHub Repository

Comments

Please log in or register to join the discussion