Gabriella Gonzalez argues that while chat interfaces dominate discussions about large language models, they represent merely the starting point rather than the destination. The real power emerges when LLMs generate and respond to structured inputs and outputs, transforming ambiguous prose into clear, actionable interfaces that users actually prefer.

The dominance of chat interfaces in current LLM applications has created a false equivalence between conversational interaction and optimal user experience. Gabriella Gonzalez's post challenges this assumption by identifying prose as fundamentally limited across multiple dimensions: it requires substantial cognitive effort to parse, performs poorly on mobile devices where reading long passages remains cumbersome, and introduces inherent ambiguity that undermines user confidence. These limitations aren't inherent to LLMs themselves but rather reflect design choices that prioritize familiar patterns over effective ones.

Structured inputs—buttons, sliders, date pickers, form controls—offer superior user experience precisely because they constrain possibilities and make actions explicit. When a user sees a button labeled "Generate Monthly Report," the path forward is clear. Compare this to a text input where users must articulate their intent through prose, hoping the model interprets their unstated requirements correctly. The cognitive load difference is substantial: prose requires users to both understand their own needs and translate them into language the model will interpret accurately, while structured interfaces surface options and guide users toward successful outcomes.

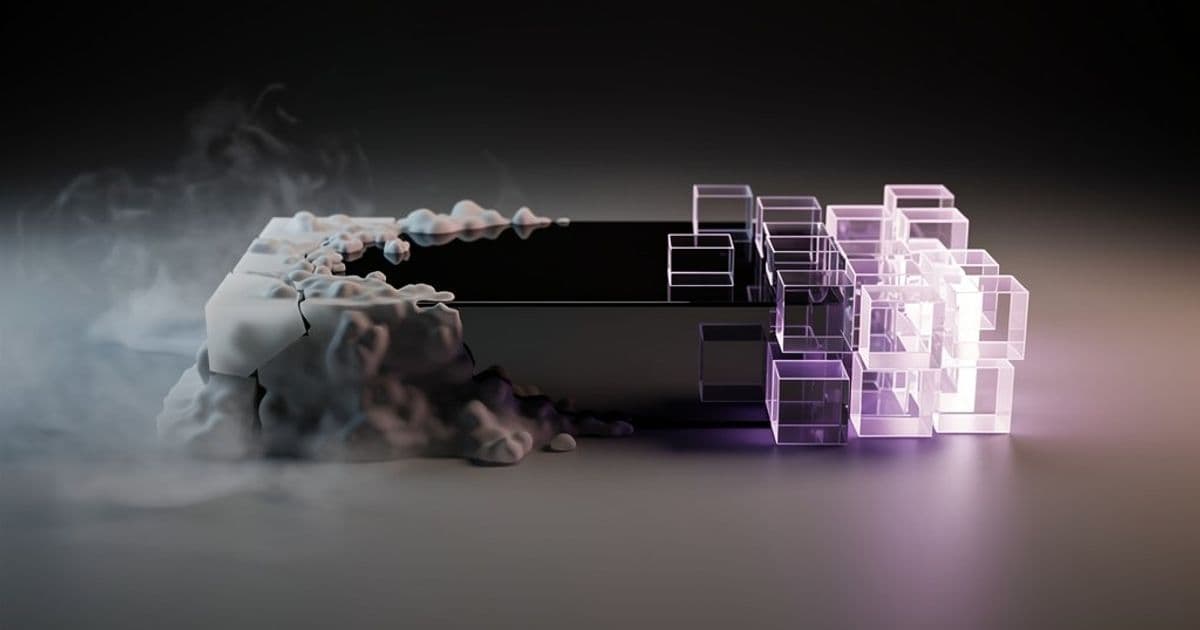

Similarly, structured outputs—graphs, tables, diagrams, dashboards—communicate information more efficiently than paragraphs of text. A well-designed chart can convey trends, outliers, and relationships that would require hundreds of words to describe, and do so in ways that remain scannable and actionable. Users can interact with these structures: filtering tables, hovering for details, adjusting visualizations. This interactivity creates a feedback loop where the interface responds to user actions without requiring additional prose negotiation.

The most compelling insight is that LLMs can actually generate these structured interfaces dynamically. Rather than presenting a blank text box, an LLM could analyze a user's domain and generate appropriate form controls. The popup-mcp tool and Grace demonstrate this capability, showing how models can create structured interfaces that users then manipulate. This represents a fundamental shift from conversation to collaboration: the model proposes an interface, the user refines it through structured interaction, and the model responds with increasingly precise outputs.

This approach also addresses the ambiguity problem inherent in prose. When a user requests "a summary of recent performance," the model must guess what metrics matter, what time range defines "recent," and what level of detail constitutes a "summary." A structured interface could present options: date range selectors, metric checkboxes, summary length sliders. The user makes explicit choices, and the model generates exactly what's requested. Confidence increases because expectations are set and met through clear mechanisms.

Mobile usability benefits dramatically from this shift. Reading a 500-word explanation on a phone screen is tedious; scanning a table or interacting with a compact visualization is efficient. Structured interfaces can be designed with touch interactions in mind—swipe gestures, tap targets, responsive layouts—while prose interfaces remain stubbornly linear and text-heavy.

The transition from chat to structured interfaces isn't about abandoning natural language entirely. Instead, it's about using prose where it excels—initial intent expression, clarification, creative exploration—then quickly moving to structured interaction for the bulk of the workflow. A user might start with "I need to analyze our Q4 sales data" and the model could respond with a structured interface offering date ranges, regional filters, metric selections, and visualization types. The prose interaction serves as a gateway, not the destination.

This vision requires LLMs that can not only generate text but also understand and create structured data formats, design user interfaces, and maintain state across interactions. It also demands that developers think beyond the chat window as the default interface pattern. The tools exist—models can output JSON, XML, or other structured formats; they can generate HTML forms and interactive components; they can consume structured inputs. What's missing is the design paradigm shift that recognizes prose as a transitional medium rather than the primary interface.

The implications extend beyond user experience. Structured interfaces enable automation, analytics, and integration in ways that conversational logs cannot. A table of results can be exported, queried, and visualized. Form submissions can trigger workflows. Interface components can be tested, measured, and optimized. The entire software engineering toolkit becomes applicable again, rather than treating LLM interactions as opaque black boxes of text generation.

Gonzalez's argument ultimately suggests that the current chat-centric approach represents an intermediate stage in LLM interface evolution. Just as early computing moved from command lines to graphical interfaces, LLM interaction will evolve from prose conversation to structured, interactive experiences. The models are capable; the challenge is recognizing that users have always preferred clear, structured interactions over ambiguous prose, and designing interfaces that reflect this fundamental truth.

Comments

Please log in or register to join the discussion