A veteran engineer argues that current AI coding practices create unsustainable technical debt and proposes a 'context layer' to dynamically link business logic with code generation.

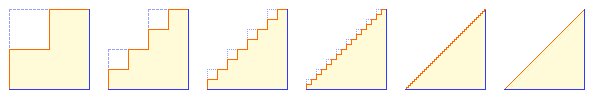

For engineers embracing AI coding assistants, a fundamental tension persists: the allure of rapid prototyping clashes with the precision required for maintainable systems. Veteran engineer Yagmin, with over two decades in startups, articulates this dilemma through a vivid analogy—the 'magic ruler' paradox. Imagine a tool that constructs buildings instantly by thought, but subtly distorts measurements with each use. While effective for simple structures, this inherent tolerance flaw makes complex architectures unstable despite exhaustive error-correction efforts.

This metaphor exposes the core limitation of 'vibe coding'—the practice of delegating code generation, refactoring, and testing to AI agents without direct oversight. Though capable of producing functional output, the approach accumulates 'uncanny valley technical debt' where code technically works but remains fundamentally misaligned with system requirements. As Yagmin observes: 'I wouldn't want to vibe code anything I might want to extend'.

Contrasting this is Spec-Driven Development (SDD), where engineers meticulously define requirements before generating code. SDD solves the tolerance problem through human oversight but introduces 'documentation debt'. Contextual details—business rules, architecture constraints, user flows—pile up in Markdown files that decay without structural links to actual code. Worse, SDD duplicates organizational effort: product teams define requirements that engineers must manually reconstruct into LLM prompts, creating process friction.

The crux lies in what Yagmin terms the 'process gap': critical decisions made during stakeholder meetings become disconnected from implementation. When implementing features like dark mode across a complex codebase, engineers spend days reconstructing context already established in meetings. This bifurcation between planning and execution wastes cognitive resources despite LLM assistance.

Yagmin's solution? A context layer—a shared abstraction linking business logic directly to code artifacts. This dynamic interface would:

- Be editable by both humans and LLMs

- Maintain bidirectional synchronization between requirements and implementation

- Eliminate repetitive 'context engineering' in prompts

- Serve as a living organizational knowledge base

Such a layer enables true 'process engineering', integrating LLMs throughout development rather than just final code generation. Stakeholder decisions could directly populate the context layer, preserving institutional knowledge and ensuring alignment. Technical specifications become living artifacts rather than static documents.

Historically, similar abstraction leaps revolutionized development—scripting languages abstracted memory management, allowing focus on higher-order problems. Today's tools already contain the components: user stories, architectural diagrams, and validation rules need only be dynamically coupled with code repositories. Modern LLMs can maintain these links, transforming how organizations evolve software.

Yagmin hints at an active proof-of-concept, positioning this as the next evolutionary step beyond today's fragmented AI coding workflows. For engineering leaders, the implication is clear: solving AI's tolerance flaw requires architectural innovation, not just better prompts.

Comments

Please log in or register to join the discussion