Cloudflare's new Local Uploads feature for R2 object storage reduces cross-region write latency by up to 75% through edge-based writes with background replication.

Cloudflare has launched Local Uploads for R2 in open beta, a feature that dramatically improves write performance for globally distributed users by reducing cross-region write latency by up to 75%. The innovation allows object data to be written close to the client location and then asynchronously replicated to the bucket's primary region, eliminating the traditional latency penalty of distant uploads.

How Local Uploads Works

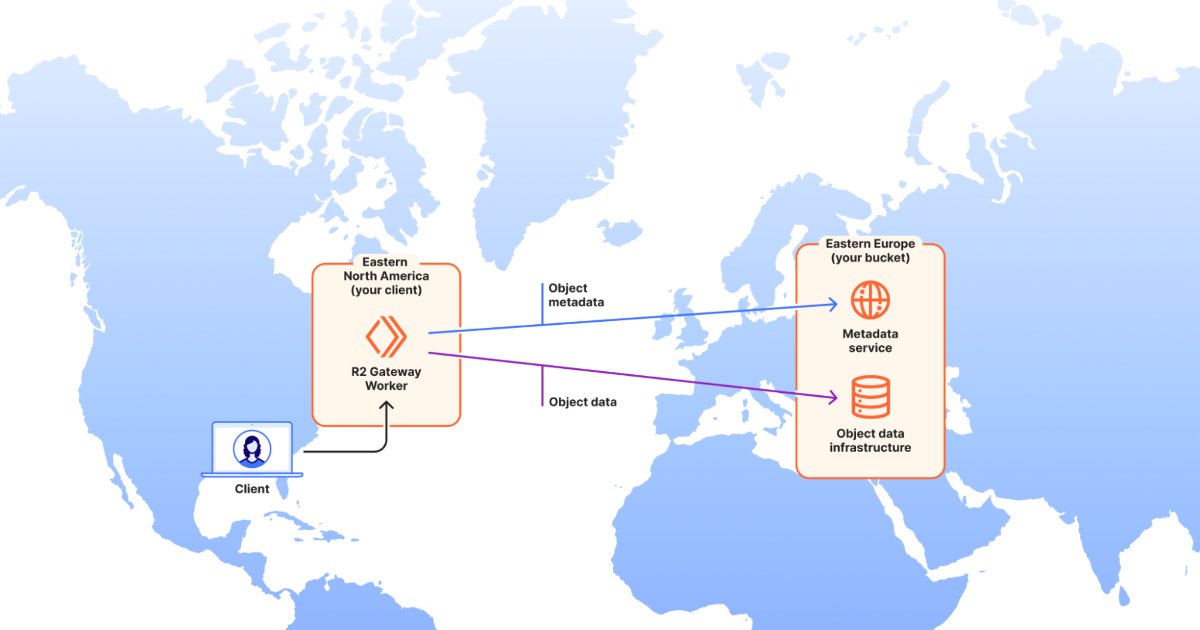

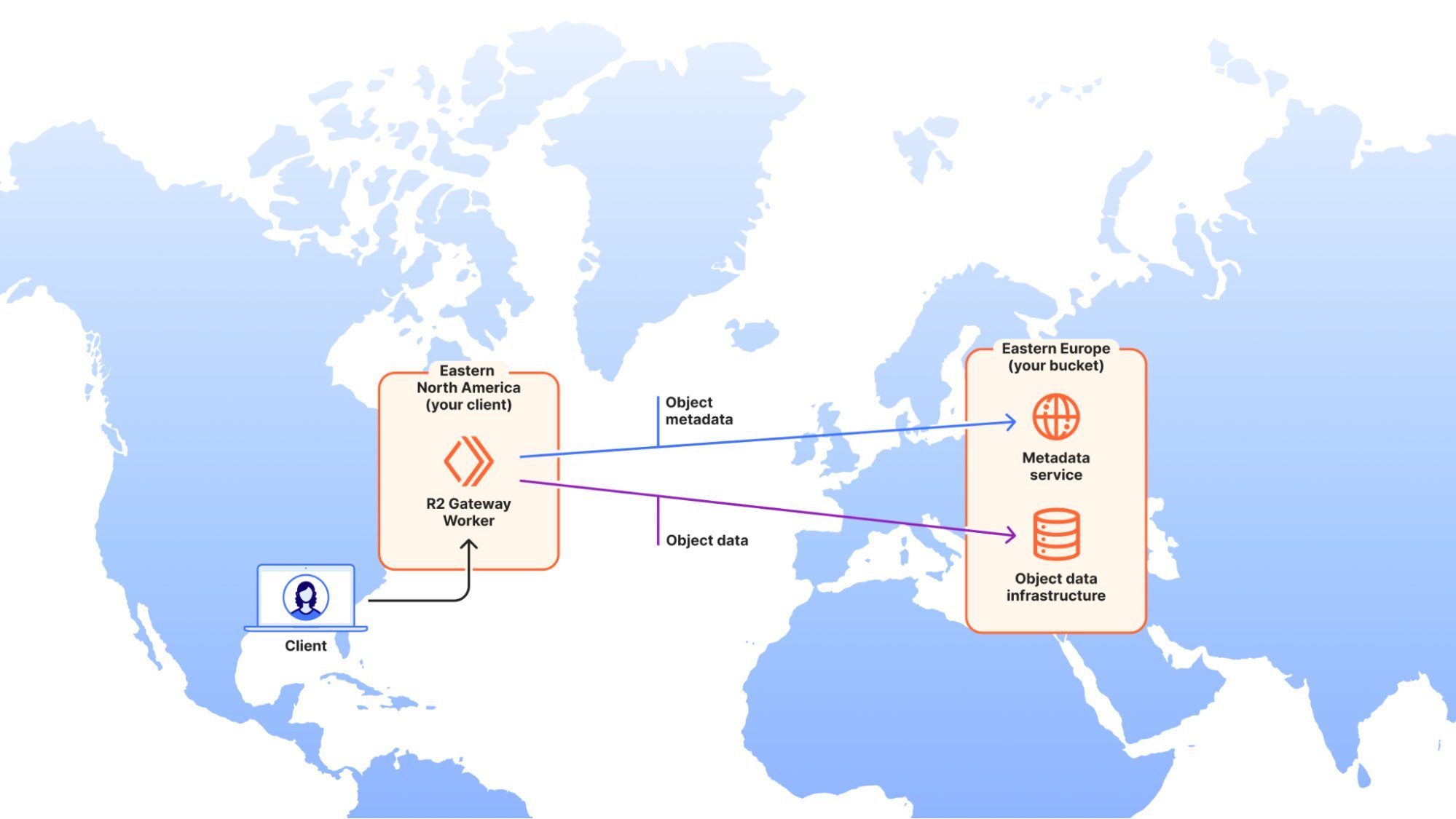

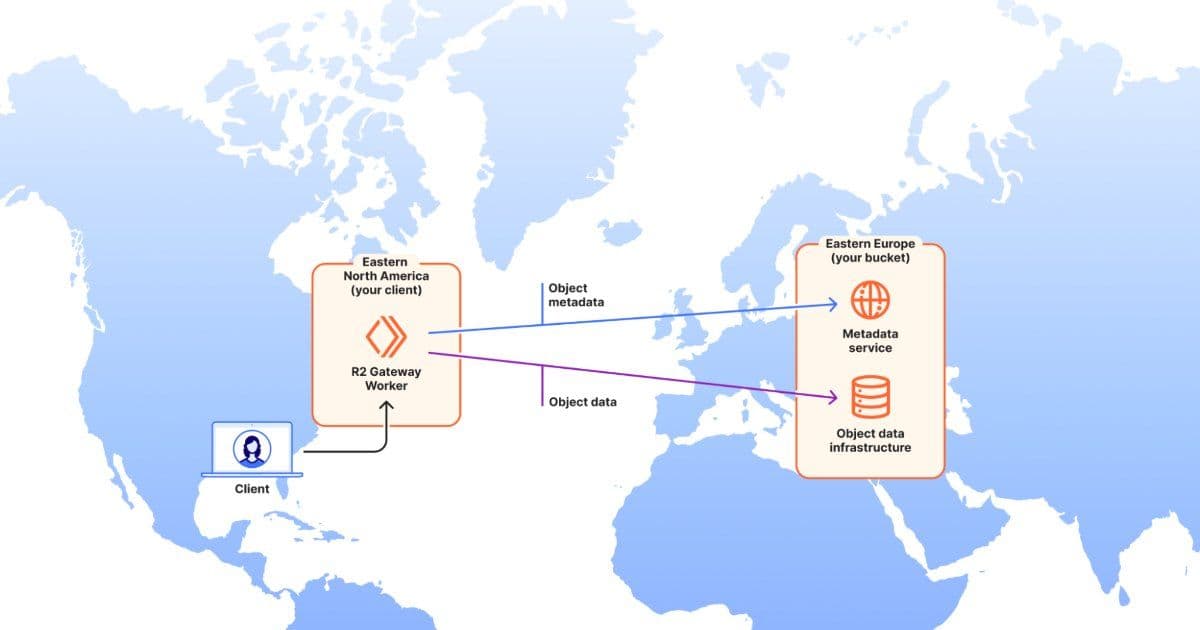

When enabled, Local Uploads changes how R2 handles write requests. Instead of routing all uploads to the bucket's primary region, the system writes object data to storage infrastructure in the client's region while simultaneously publishing object metadata to the bucket's designated location. Cloudflare manages the background replication process using queued tasks and automatic retries to ensure data durability and reliability.

The key insight is that the object becomes immediately accessible after the initial write completes. According to Cloudflare's engineering team, there's no waiting period for background replication to finish before the object can be read. This is achieved by decoupling physical storage from logical metadata and building a global metadata service on Durable Objects—a Cloudflare Workers feature that maintains consistent data across distributed locations.

Performance Impact

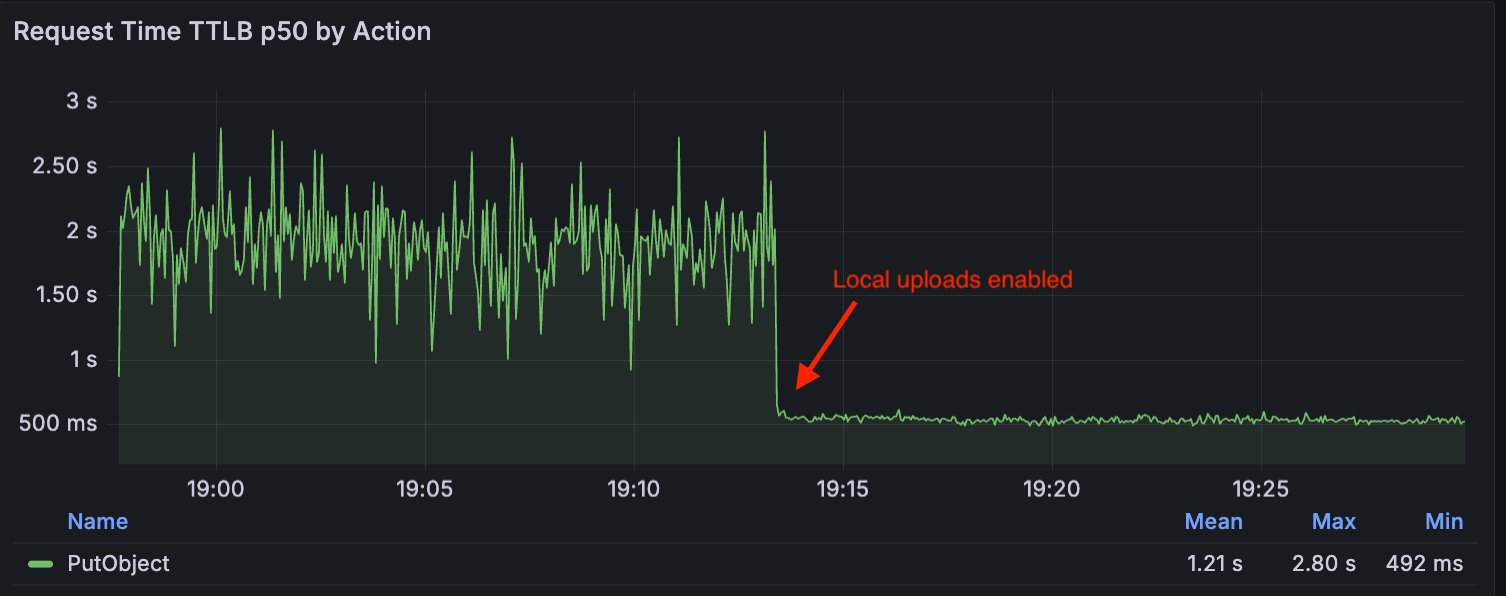

In both private beta tests with customers and synthetic benchmarks, Cloudflare observed up to 75% reduction in Time to Last Byte (TTLB) when upload requests originate from regions different than the bucket's location. TTLB measures from when R2 receives the upload request to when it returns a 200 response.

This performance gain is particularly significant for applications with users spread across multiple geographic regions. A 1GB file upload that previously might have taken several seconds due to round-trip time can now complete much faster, as the upload terminates at the nearest Cloudflare Point of Presence (PoP) rather than traversing the internet to the bucket's primary region.

Technical Architecture

The system leverages Cloudflare's global edge network to terminate uploads locally. When a client uploads an object, the write happens at the edge location closest to the user. The metadata is still published to the bucket's primary region, but the actual data transfer benefits from the proximity of the edge location.

Behind the scenes, Cloudflare uses a pull-based replication pipeline with atomic commits to ensure data consistency. The replication tasks run asynchronously, meaning the client receives confirmation of the upload as soon as the local write completes, not when the replication finishes.

Comparison with Other Cloud Providers

Cloudflare isn't alone in addressing the cross-region upload challenge. AWS offers S3 Transfer Acceleration, which uses globally distributed edge locations to optimize routing and reduce internet variability. However, the approaches differ significantly:

- R2 Local Uploads: Performs local writes with asynchronous replication to the bucket's primary region

- S3 Transfer Acceleration: Optimizes routing through CloudFront edge locations, then uploads to the bucket over AWS's private network

Google Cloud Storage and Azure Blob Storage don't currently offer upload layers that accept local writes globally, making Cloudflare's approach unique among major cloud providers.

Use Cases and Availability

The feature targets specific workloads where:

- Users are distributed globally

- Fast and reliable uploads are critical

- Better upload performance is needed without changing the bucket's main location

Customers can monitor the geographic distribution of read and write requests through the request distribution by region graph on their R2 bucket's metrics page.

Cost and Implementation

Importantly, there's no additional cost to enable Local Uploads on Cloudflare R2. Upload requests incur the standard Class A operation costs, just as they do without the feature enabled. This makes it an attractive option for cost-conscious teams looking to improve performance without increasing their cloud bill.

The feature is currently in open beta, and Cloudflare has provided a demo to test local uploads from different locations. Organizations can evaluate the performance benefits for their specific use cases before committing to production deployment.

Why This Matters

For applications serving users across multiple continents, upload latency has long been a challenge. Traditional object storage requires all writes to go to a single region, creating a fundamental trade-off between data locality and performance. Cloudflare's Local Uploads breaks this constraint by allowing the benefits of edge computing—low latency and proximity to users—while maintaining the simplicity of a single bucket location.

This approach could be particularly valuable for:

- Content management systems with global contributors

- Backup and disaster recovery solutions

- Media upload platforms

- Collaborative applications requiring real-time file sharing

The 75% latency reduction represents a significant improvement in user experience, especially for interactive applications where upload speed directly impacts user satisfaction and productivity.

Learn more about R2 Local Uploads in Cloudflare's official documentation

Comments

Please log in or register to join the discussion