Autonomous driving startup comma.ai reveals its in-house data center built for $5 million that delivers 80% cost savings over cloud alternatives while enabling full infrastructure control.

In an era when tech startups default to hyperscale cloud providers, autonomous vehicle technology company comma.ai has taken a radically self-reliant approach. The San Diego-based firm detailed its privately built data center that cost approximately $5 million to construct – a fraction of the estimated $25+ million equivalent cloud expenditure.

The decision stems from fundamental concerns about cloud dependency. "Cloud companies make onboarding very easy, and offboarding very difficult," the company stated, highlighting vendor lock-in risks and spiraling costs for compute-intensive operations. Unlike cloud environments that abstract infrastructure complexities, comma.ai's approach forces engineers to confront physics directly: Watts, bits, and FLOPs become daily considerations.

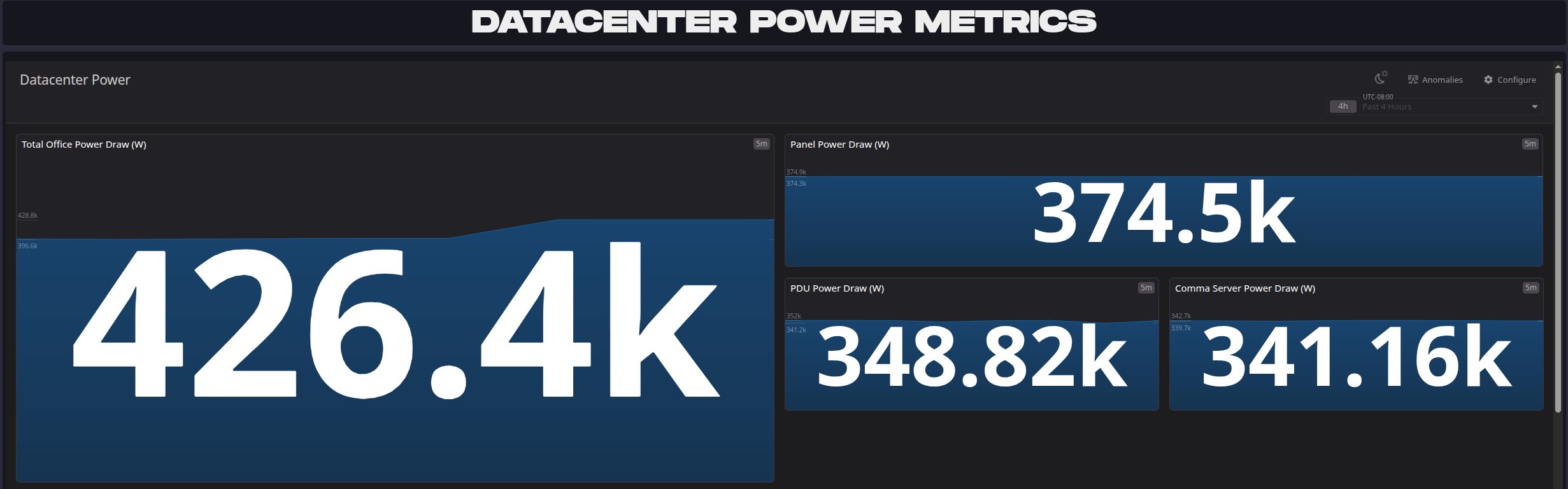

At 450kW peak capacity, the facility leverages San Diego's mild climate for innovative cooling. Instead of energy-hungry CRAC systems, comma.ai uses outside air cooling with PID-controlled fans that balance temperature and humidity. This solution consumes just "a couple dozen kW" compared to traditional systems.

The compute core consists of 75 custom-built TinyBox Pro machines housing 600 GPUs, maintained by a small engineering team. Storage is handled by Dell servers with 4PB SSD capacity designed for high-throughput access. "Our main storage arrays have no redundancy and each node needs to saturate network bandwidth with random access reads," comma.ai noted, prioritizing speed over redundancy for non-critical data.

Software infrastructure showcases equal innovation:

- minikeyvalue (mkv) storage delivers 1TB/s read speeds

- Miniray (comma.ai's open-source task scheduler) enables distributed computing

- PyTorch-based training pipelines with custom experiment tracking

- Monorepo code management synced via NFS

"When all you have available is your current compute, the quickest improvements are usually speeding up your code or fixing fundamental issues," the company observed, contrasting this with cloud environments where scaling often masks inefficiencies.

The $540,000 annual power bill remains a pain point in high-cost San Diego, prompting plans for future self-powering solutions. Despite this, the overall savings and technical control position comma.ai uniquely in the autonomous driving space – where processing massive sensor data requires both computational efficiency and cost discipline.

This build-your-own-infrastructure approach offers a template for other startups facing similar compute-intensive workloads. By sharing technical specifics, comma.ai challenges the assumption that cloud is the only path for scaling AI operations, proving substantial savings and engineering benefits come from owning the stack.

Comments

Please log in or register to join the discussion