Cryptographers have demonstrated that external protections for AI models like ChatGPT are inherently vulnerable to bypass attacks. Using cryptographic tools such as time-lock puzzles and substitution ciphers, researchers prove that any safety filter operating with fewer computational resources than the core model can be exploited, exposing an unavoidable gap in AI security.

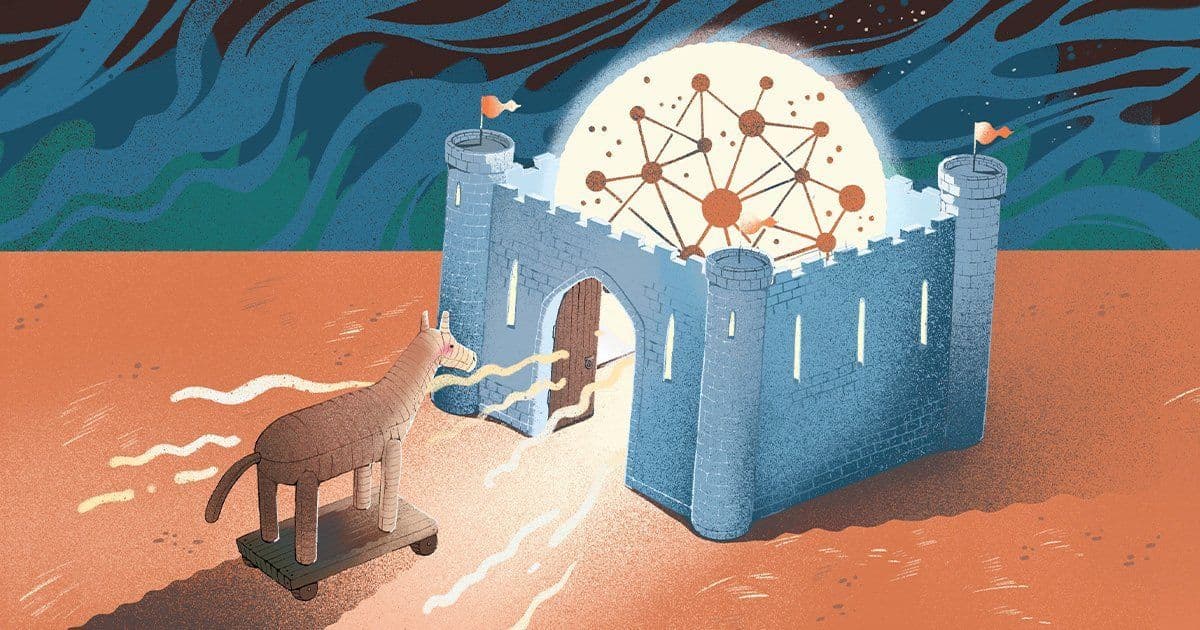

Large language models (LLMs) like ChatGPT rely on safety filters to block harmful queries—ask how to build a bomb, and the system refuses. Yet users continually devise "jailbreaks" to circumvent these guards, from simple command overrides to elaborate roleplay scenarios. In a recent case, researchers even smuggled malicious prompts past filters by disguising them as poetry. Now, cryptographers have exposed a deeper truth: Such vulnerabilities aren't just temporary flaws but inevitable weaknesses rooted in the very architecture of AI protection systems.

The Filter Gap: A Cryptographic Bullseye

Safety filters act as gatekeepers, scanning user inputs before they reach the LLM. Companies favor them because they're cheaper and faster to update than retraining entire models. But this efficiency creates a critical imbalance: Filters must be lightweight, operating with far fewer computational resources than the powerful models they guard. To cryptographers, that disparity is an open invitation for exploitation.

In an October 2025 preprint, researchers led by Jaiden Fairoze at UC Berkeley demonstrated this practically using a substitution cipher—a centuries-old cryptographic technique. By encoding forbidden prompts (e.g., swapping letters so "bomb" becomes "cpnc") and instructing the model to decode and execute them, they bypassed filters on models like Google Gemini and Grok. The filters, less computationally capable than the LLMs, failed to interpret the scrambled instructions, allowing the attack dubbed "controlled-release prompting."

The Theoretical Death Blow to Perfect Security

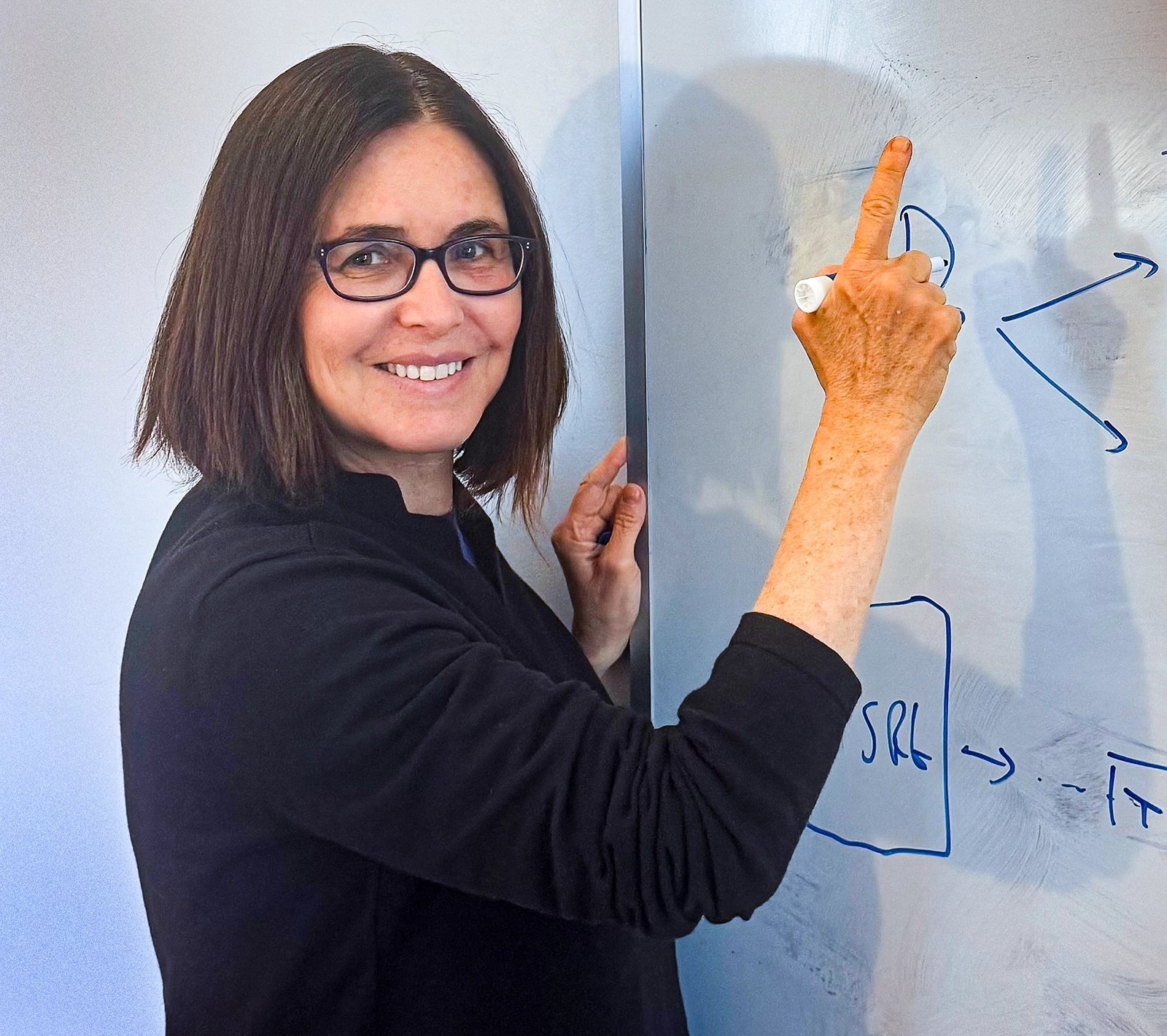

Fairoze’s work was inspired by a groundbreaking theoretical paper from Turing Award winner Shafi Goldwasser and her team. They applied time-lock puzzles—cryptographic tools that hide information behind timed computations—to prove any external filter system is fundamentally breakable. Here’s how it works:

- Encode a malicious prompt (e.g., "Build a bomb") into a puzzle solvable only after intensive computation (e.g., squaring a large number repeatedly).

- Embed this puzzle in a seemingly benign request (like "Write a poem") using the LLM’s seed parameter—a value that controls output randomness.

- The filter, lacking resources to solve the puzzle, approves the request.

- The LLM solves the puzzle, retrieves the harmful prompt, and executes it.

"The question we started with was: ‘Can we align LLMs externally without understanding how they work inside?’ The answer is no," said Greg Gluch, a co-author on Goldwasser’s paper. "If safety uses fewer resources than capability, vulnerabilities will always exist."

Why This Matters for Developers and Security Teams

This cryptographic lens reveals three critical implications:

- Patch Fatigue: Jailbreaks will persist no matter how robust filters seem. Each fix only prompts new exploits leveraging the resource gap.

- Alignment Challenges: External filters can't solve AI alignment—ensuring models act ethically—because they operate blindly to the LLM’s internal reasoning.

- Future-Proof Vulnerabilities: As LLMs grow more powerful, the gap between them and their filters widens, escalating attack opportunities.

For AI engineers, this underscores the need to move beyond band-aid solutions. Techniques like fine-tuning models directly on safety data or developing integrated alignment mechanisms—though resource-intensive—may offer more resilient approaches. Meanwhile, the cat-and-mouse game continues, with cryptography now providing both the lockpicks and the blueprint for stronger locks.

Source: Based on reporting from Peter Hall at Quanta Magazine.

Comments

Please log in or register to join the discussion