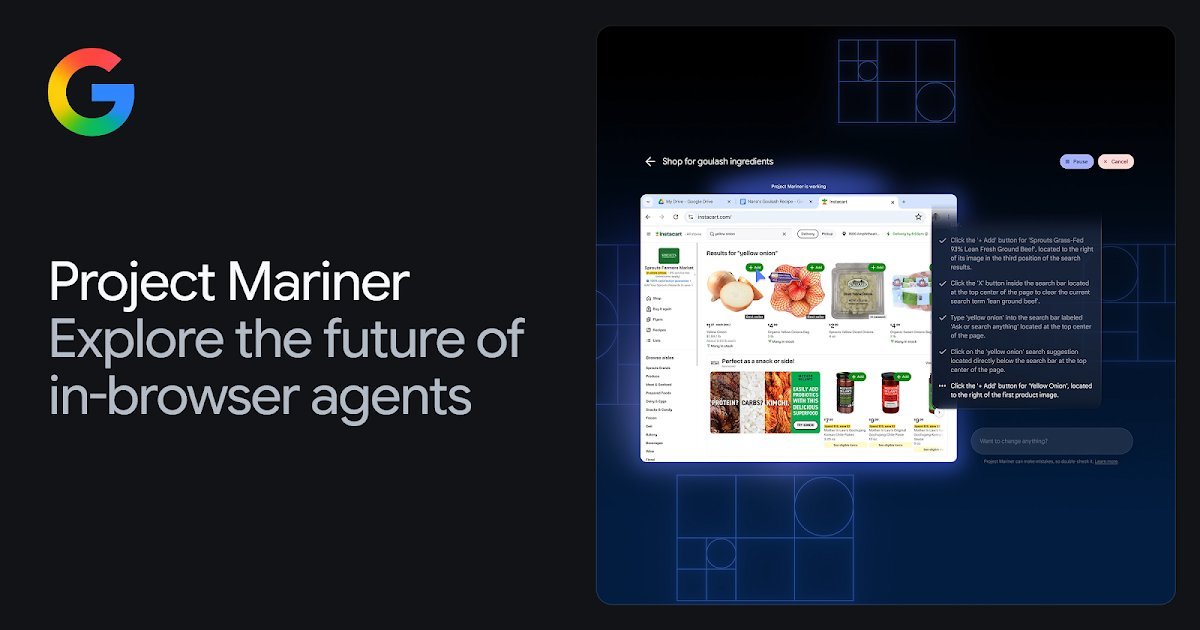

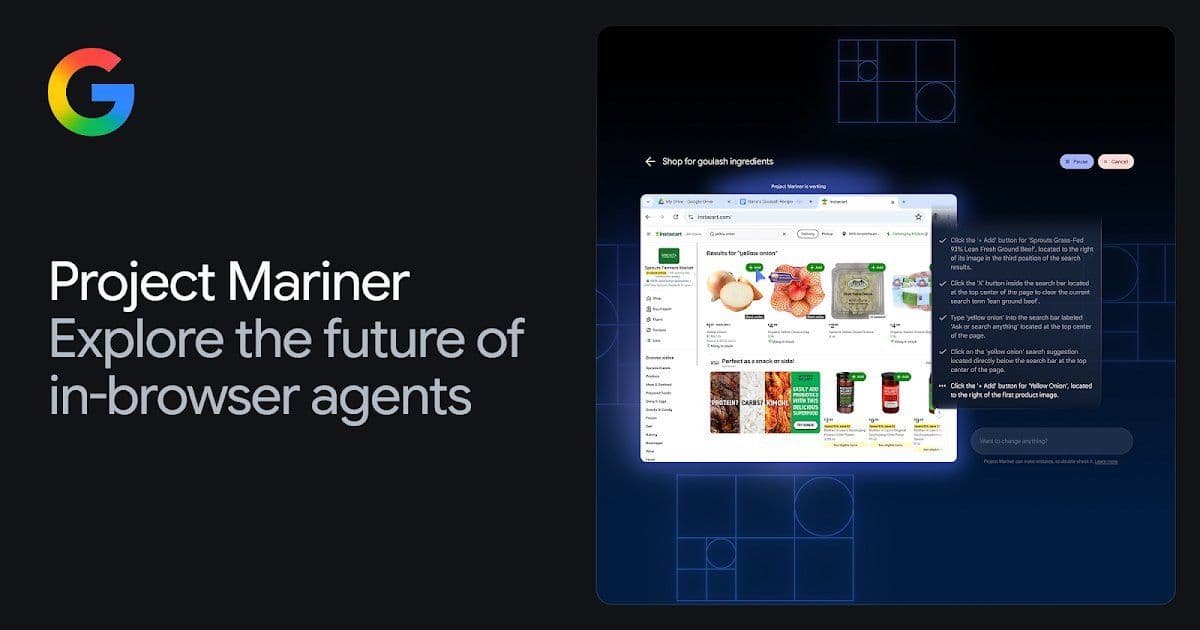

Google DeepMind unveils Project Mariner, an AI system using eye-tracking technology to interpret web elements with human-like understanding. This breakthrough could revolutionize web automation and accessibility tools by enabling AI to comprehend complex UIs. The research signals a leap toward visual-intelligence systems that interact with digital interfaces contextually.

Imagine software that doesn’t just scrape web pages but genuinely understands them—recognizing text hierarchies, interpreting form fields, and identifying functional components like a human would. Google DeepMind’s newly revealed Project Mariner aims to achieve precisely that, leveraging eye-tracking technology to train AI models in visual comprehension of browser interfaces.

Traditional automation tools rely on DOM structures or pixel patterns, often failing with dynamically generated content. Mariner takes a fundamentally different approach:

"By simulating human visual attention patterns, the model observes, identifies, and understands web elements—including text blocks, code snippets, images, and interactive forms—to build a holistic representation of on-screen content,"

explains DeepMind's research team. This method allows the AI to infer relationships between elements (like recognizing a "Submit" button's purpose relative to a form) without relying solely on underlying code.

The implications span multiple domains:

- Accessibility: AI could dynamically adapt interfaces for users with disabilities by understanding visual context beyond alt-text.

- Testing & Development: Automated QA tools might visually validate UI behavior in ways current selectors cannot.

- RPA Evolution: Robotic Process Automation could handle unstructured web tasks requiring contextual judgment.

Critically, the model doesn’t require manual annotation. It learns by analyzing gaze patterns from humans interacting with web pages, creating a self-supervised training loop. While still in research phase, Mariner hints at a future where AI navigates digital environments with perceptual intuition—potentially transforming how we build and interact with the web. As interfaces grow more dynamic, such visual intelligence may become essential infrastructure.

Comments

Please log in or register to join the discussion