MiniMax open-sourced M2.5, a 10B-parameter coding model scoring 80.2% on SWE-Bench Verified with 100 TPS inference at $1/hour, driving 10K+ agents built in 24 hours.

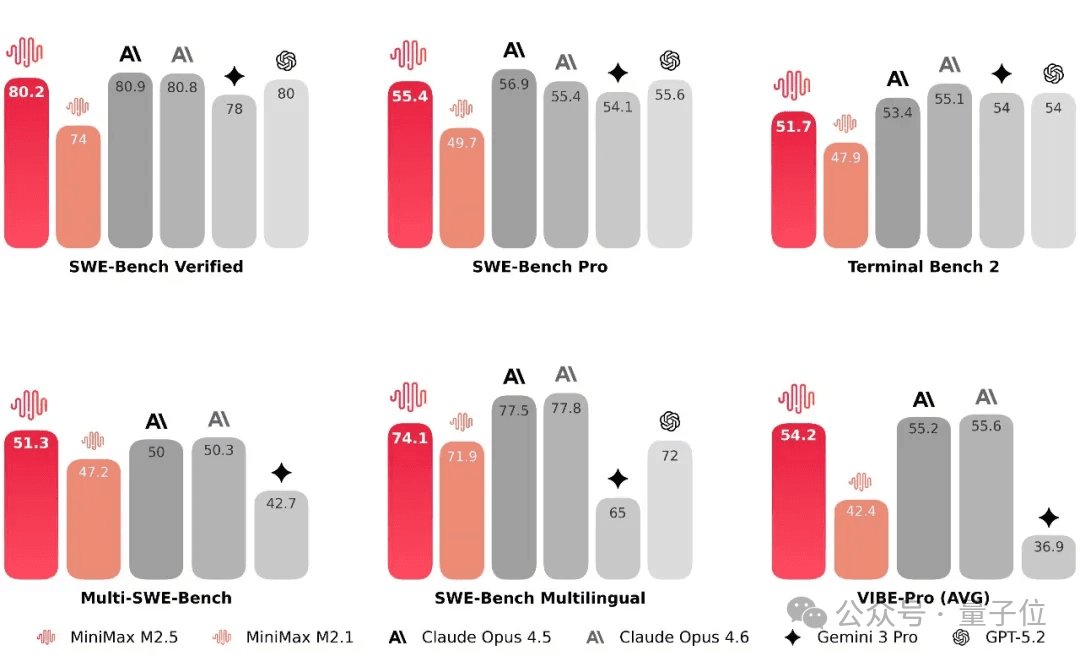

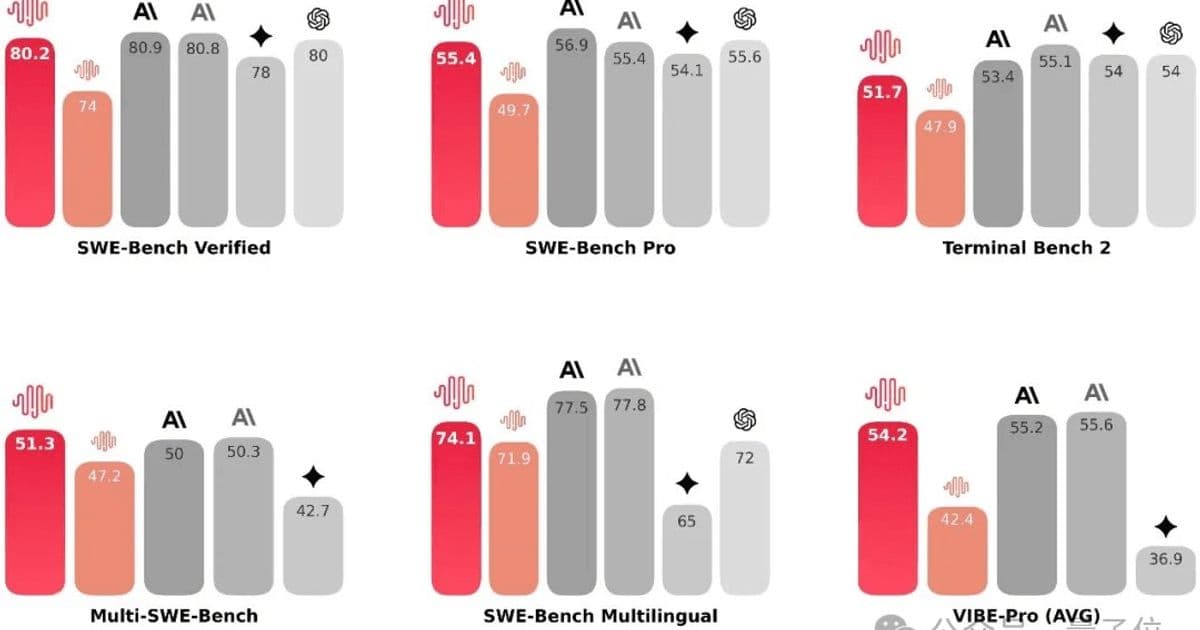

MiniMax has released M2.5, positioning it as a "production-grade native agent model" that delivers flagship-level performance with just 10 billion activated parameters. The model scored 80.2% on SWE-Bench Verified and ranked first on Multi-SWE-Bench, surpassing Claude Opus 4.6 in multi-language, complex environments.

Technical Architecture and Performance

The M2.5 model exhibits what MiniMax calls "native spec behavior" - proactively deconstructing architecture and functional planning before coding, closely resembling a human architect. This approach enables the model to handle full-stack development including frontend, backend, and database work, as well as Vibe Coding that turns natural language into executable system designs.

On the performance front, M2.5 achieves 100+ tokens per second inference throughput, which is twice as fast as mainstream flagship models. This speed translates directly to cost efficiency: at 100 tokens/second, continuous operation costs $1/hour; at 50 tokens/second, the cost drops to $0.3/hour.

Rapid Development Through Agent RL Scaling

MiniMax attributes the rapid improvement from M2 to M2.5 - with SWE-Bench scores jumping from 69.4 to 80.2 in just 108 days - to large-scale Agent Reinforcement Learning (RL Scaling). The company's proprietary Forge framework plays a crucial role in this acceleration by decoupling the training engine from the agent, enabling generalized optimization across any scaffolding.

The Forge framework achieves 40x training acceleration through asynchronous scheduling and tree-based merging, representing a significant advancement in how agent models can be trained and optimized.

Real-World Deployment and Adoption

Internally, M2.5 has already demonstrated substantial impact at MiniMax. The model autonomously handles 30% of real business tasks across R&D, product, sales, HR, and finance departments. In coding specifically, M2.5-generated code accounts for 80% of newly committed code within the company.

Following its launch on MiniMax Agent on February 12, the model was globally open-sourced the next day. Within 24 hours, users had built over 10,000 experts on the platform, indicating strong community adoption and interest.

Market Positioning and Competition

MiniMax positions M2.5 as a "full-stack AI employee" that can rival established models like Claude Opus 4.6. The combination of high performance (80.2% SWE-Bench Verified), low cost ($1/hour), and rapid inference (100+ TPS) creates a compelling value proposition for developers and organizations looking to automate software development tasks.

The model's ability to handle complex, multi-language environments while maintaining cost efficiency could make it particularly attractive for startups and enterprises looking to scale their development capabilities without proportional increases in human resources.

Technical Implications

The success of M2.5's architecture - achieving flagship performance with only 10 billion activated parameters - suggests that efficient model design and training methodologies can rival brute-force scaling approaches. This aligns with broader industry trends toward more efficient AI systems that deliver high performance without requiring massive computational resources.

The Forge framework's ability to achieve 40x training acceleration through asynchronous scheduling and tree-based merging represents a significant technical achievement that could influence how future agent models are developed and optimized.

Open Source Strategy

By open-sourcing M2.5, MiniMax is following a strategy similar to other AI companies that have found success through community-driven development and adoption. The rapid creation of 10,000+ experts within 24 hours suggests that the open-source community sees significant value in the model's capabilities.

This approach also allows MiniMax to benefit from community contributions while establishing its technology as a standard in the agent model space. The combination of high performance, low cost, and open access creates a strong competitive position in the rapidly evolving AI development tools market.

The release of M2.5 represents a significant milestone in the evolution of AI coding assistants and agent models. By combining high performance with cost efficiency and rapid deployment capabilities, MiniMax has created a tool that could fundamentally change how software development is approached in organizations of all sizes.

As the AI development tools landscape continues to evolve, models like M2.5 that can deliver flagship performance at a fraction of the cost of traditional approaches may become increasingly important. The success of this model could influence how other companies approach the development and deployment of AI coding assistants and agent models in the future.

Comments

Please log in or register to join the discussion