Dell's PowerEdge R670 pushes 1U dual-socket density further with 20x E3.S NVMe bays, PCIe Gen5 risers, and tool-less serviceability. The R670 represents a calculated shift toward EDSFF storage form factors while maintaining backward compatibility.

The Dell PowerEdge R670 is a 1U dual-socket server that attempts to solve a persistent problem: how to pack more storage, I/O, and compute into a standard rack unit without sacrificing serviceability. Dell has managed to give this generation new tricks that greatly increase the usefulness of a 1U server.

External Hardware Overview

The R670 measures 815.14mm (32.09 inches) deep without the bezel. The bezel adds just under 2mm or 0.11in, but it serves two functions: it secures SSDs from accidental removal and provides a cleaner aesthetic. More importantly, Dell offers a front I/O configuration that cannot be used with the bezel installed—a trade-off that signals where Dell is placing its priorities.

The front I/O block includes a USB Type-C service port and a power button on the right rack ear. The left side contains the standard rack ear. This configuration places service ports front and center for data center technicians who need quick access without reaching around to the rear.

Storage Density: The E3.S Transition

The headline feature is storage density. Instead of the traditional 8x or 10x U.2 2.5" NVMe bays, the R670 offers 16x E3.S drive bays standard, with an option to expand to 20x on the front face.

E3.S is a thinner SSD form factor from the EDSFF (Enterprise & Data Center SSD Form Factor) family. The thinner profile enables higher density per rack unit. With 61.44TB SSDs, each block of eight drives provides just under 1PB of storage. The 20-drive configuration can surpass 1PB per U—a density that required exotic storage chassis with smaller form factor SSDs just a few years ago.

The transition to EDSFF is deliberate. Looking ahead to PCIe Gen6 servers, the U.2 connector will no longer be supported. Dell is positioning the R670 as a bridge to that future while the industry transitions.

The drive bays are arranged in two groups of eight, with 16 drives on each side of the chassis. This symmetric layout helps with thermal management and cable routing.

Rear I/O and Power

The rear of the R670 contains redundant 800W 80Plus Platinum power supplies, one on each side. This dual-side placement helps with cable management in racks that have PDUs on both sides—a common configuration in modern data centers.

The rear I/O block includes:

- VGA port

- Two USB Type-A ports

- Out-of-band iDRAC port for remote management

PCIe Expansion: Tool-less Riser Design

The R670 supports three low-profile risers in our test configuration. Dell offers other options for different card sizes, rear storage configurations, and specialized accelerator cards.

Riser 2 in our system provides two PCIe Gen5 x16 low-profile slots. Modern servers require cables to add PCIe lanes to the risers, and the R670 follows this pattern.

Dell's tool-less riser experience is notable. The risers can be removed without screws or specialized tools, reducing maintenance time in production environments.

Riser 4 is a low-profile riser slot with a neat feature: both the front and rear of cards can be secured using tool-less supports. This small detail matters in high-density deployments where cards need to be swapped quickly.

Internal Architecture

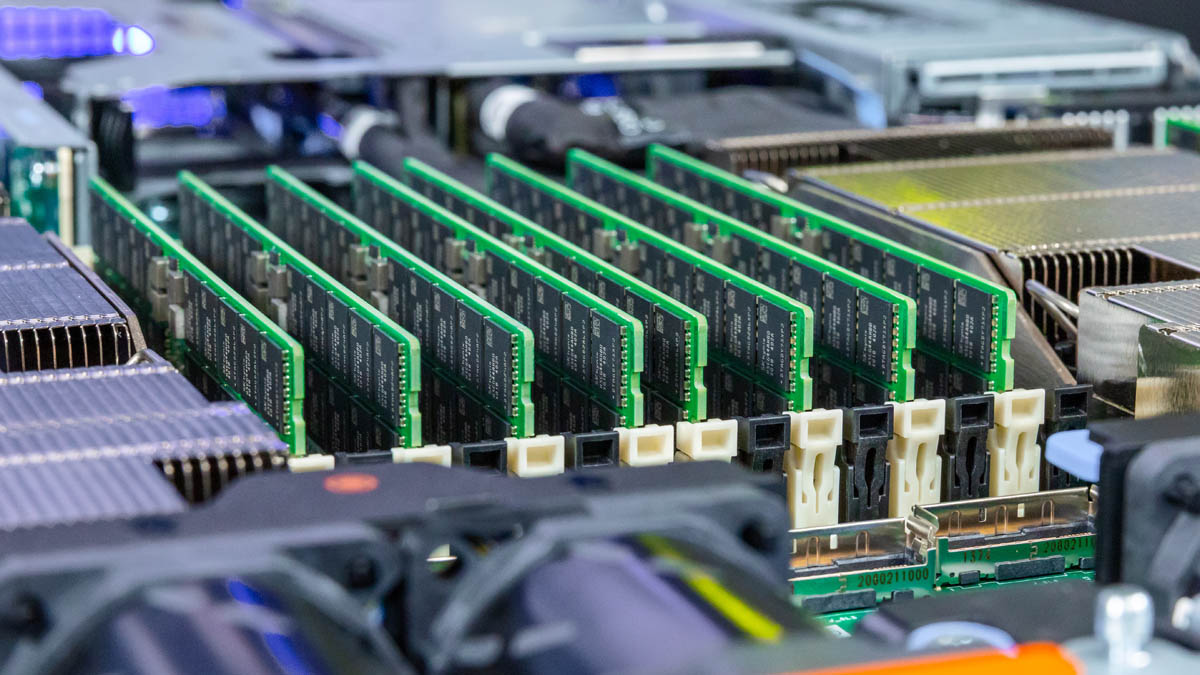

The internal layout follows Dell's standard 1U dual-socket design, but with optimizations for the E3.S drive bays. The chassis accommodates Intel Xeon 6 processors with DDR5 memory, though specific processor and memory configurations would depend on the exact SKU.

The shift to E3.S requires rethinking internal airflow and thermal management. The thinner drives allow more bays, but they also change how cooling air moves through the chassis. Dell's thermal engineering in the R670 appears to prioritize density while maintaining acceptable operating temperatures.

Trade-offs and Considerations

The front I/O configuration that cannot be used with the bezel is a deliberate trade-off. Data centers that prioritize physical security (via bezels) will need to choose between that protection and front-side serviceability. This decision reflects Dell's understanding of different operational models—some environments value rapid access over physical security, while others require both.

The move to E3.S is forward-looking but introduces compatibility questions. Organizations with existing U.2 drive investments will need adapters or complete drive replacement. However, the density gains are substantial enough that the transition makes financial sense for new deployments requiring maximum storage per rack unit.

Deployment Considerations

For organizations considering the R670:

Storage density: 20x E3.S bays with 61.44TB drives = ~1.2PB per 1U. This changes capacity planning for storage-heavy workloads.

Serviceability: Tool-less risers and front I/O reduce maintenance windows. The USB Type-C service port supports modern management workflows.

Power: 800W redundant supplies provide headroom for high-core-count Xeon 6 processors and full drive populations. The dual-side placement simplifies PDU cabling.

Future-proofing: EDSFF adoption positions the R670 for PCIe Gen6 transitions, though the exact timeline for that transition remains industry-dependent.

The R670 represents Dell's calculated bet that storage density and serviceability will drive 1U server purchases more than raw compute density alone. For workloads that need both high-performance storage and dual-socket compute in a compact form factor, the R670 delivers a specific value proposition that wasn't available in previous generations.

Comments

Please log in or register to join the discussion