DoorDash has deployed a sophisticated AI safety system called SafeChat that processes millions of daily interactions across chat, images, and voice calls, achieving a 50% reduction in safety incidents through a carefully engineered combination of machine learning models and human oversight.

DoorDash's implementation of SafeChat represents a significant case study in deploying AI for safety-critical infrastructure rather than user engagement. The system, which handles millions of interactions daily, demonstrates how a layered architecture can balance cost, latency, and accuracy while operating at production scale.

What Changed: From Reactive to Proactive Safety

Traditional safety systems in marketplace platforms typically relied on user reporting and manual review, creating delays between incident occurrence and intervention. DoorDash's SafeChat shifts this paradigm by applying machine learning models to detect and respond to unsafe content in near real-time across three communication channels: text messages, images, and voice calls.

The system's core innovation lies in its enforcement strategy. Rather than simply flagging content for later review, SafeChat enables immediate actions such as allowing Dashers to cancel orders when high-risk messages are detected, terminating calls with inappropriate content, or restricting future communications between parties. This creates a safety net that operates at the speed of conversation rather than the speed of human review.

Architecture: A Three-Phase Evolution

Phase 1: The Three-Layer Foundation

DoorDash engineers initially implemented a three-layered text moderation approach that prioritized cost efficiency and recall:

First Layer (Moderation API): A low-cost, high-recall filter that automatically cleared approximately 90% of messages with minimal latency. This layer served as a coarse filter, catching obvious violations while allowing safe traffic to pass through quickly.

Second Layer (Fast LLM): Messages that weren't cleared by the first layer were processed by a fast, low-cost large language model with higher precision. This model identified 99.8% of messages as safe, providing a second checkpoint before more expensive processing.

Third Layer (Precise LLM): The remaining messages—those flagged by the second layer—were evaluated by a more precise, higher-cost LLM. This model scored messages across multiple dimensions: profanity, threats, and sexual content. These scores enabled specific safety actions, such as allowing Dashers to cancel orders when high-risk messages were detected.

This architecture ensured that the most expensive processing was reserved for the smallest subset of potentially problematic messages, optimizing both cost and response time.

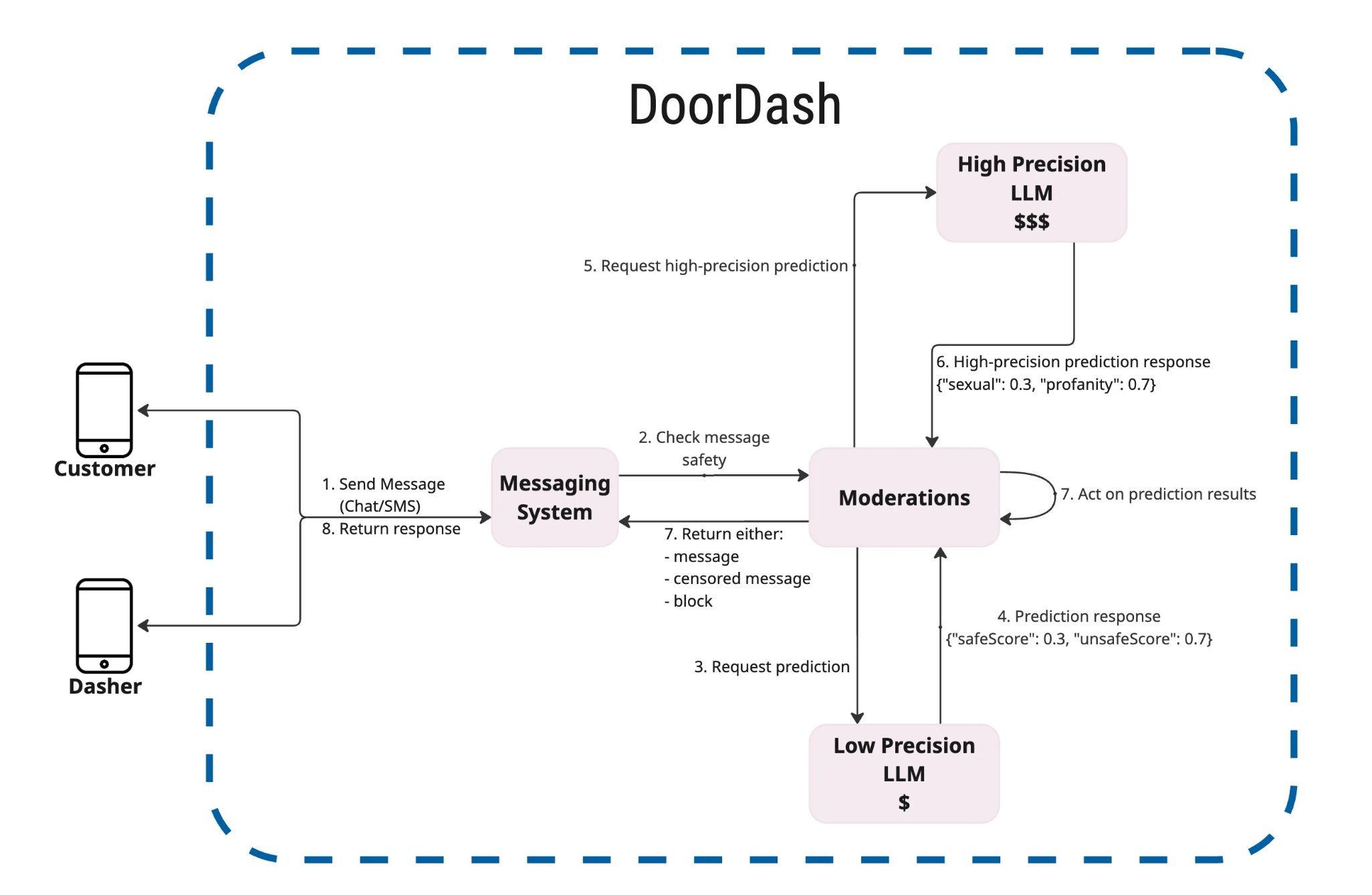

Phase 2: The Two-Layer Optimization

Building on learnings from Phase 1, DoorDash trained an internal model on approximately 10 million messages from the initial deployment. This internal model became the new first layer, automatically clearing most messages with improved accuracy. Only flagged messages advanced to the precise LLM for detailed scoring.

The performance improvements were significant:

- Layer 1 responses occur in under 300 milliseconds

- Flagged messages may take up to three seconds for full processing

- The system handles 99.8% of traffic with the internal model, dramatically improving scalability and reducing costs

DoorDash's two-layer text moderation architecture (Source: DoorDash Tech Blog)

Image and Voice Processing

Image Moderation: DoorDash selected computer vision models specifically for throughput and granularity. The engineering team tuned thresholds and confidence scores through iterative human review to reduce both false positives and false negatives. The system processes hundreds of thousands of images daily while maintaining latency compatible with live interactions.

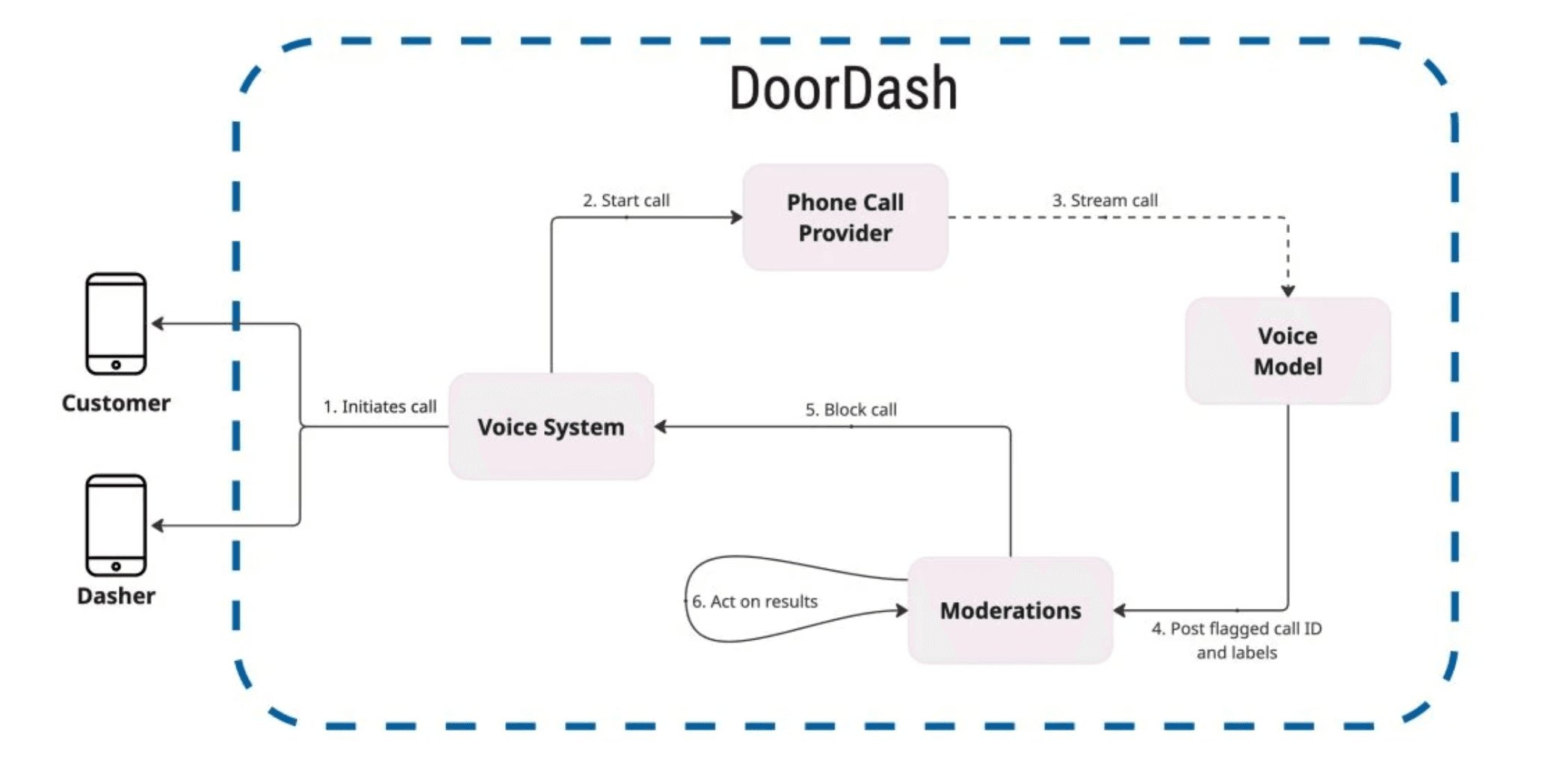

Voice Moderation: This channel presented unique challenges. DoorDash initially deployed voice moderation in observe-only mode to calibrate confidence scores. Once thresholds were validated through this observational period, the system could take automated actions such as interrupting calls or restricting future communications.

DoorDash voice moderation architecture (Source: DoorDash Tech Blog)

Business Impact: Measurable Safety Improvements

The results speak to the system's effectiveness. According to DoorDash, SafeChat has contributed to a roughly 50% reduction in low and medium-severity safety incidents since deployment. This represents a substantial improvement in platform safety without requiring proportional increases in human moderation staff.

The enforcement layer applies proportionate actions based on violation severity and recurrence:

- First offenses: Block or redact unsafe messages

- Repeated violations: Terminate calls, restrict communications, or escalate to human safety agents

- Severe or repeated violations: Trigger account reviews or suspensions

This graduated response system ensures appropriate consequences while avoiding overly harsh penalties for minor infractions.

Technical Considerations for Implementation

Cost Optimization Strategy

DoorDash's layered approach demonstrates a practical cost-optimization strategy for AI moderation:

- High-recall, low-cost filters handle the majority of traffic

- Mid-tier models provide additional precision for ambiguous cases

- High-precision, high-cost models process only the smallest subset of flagged content

This architecture is particularly relevant for platforms processing millions of daily interactions where per-message processing costs would otherwise be prohibitive.

Latency vs. Accuracy Trade-offs

The system's design acknowledges that different communication channels have different latency requirements:

- Text chat: Can tolerate 300ms-3s processing times

- Voice calls: Require real-time or near-real-time processing

- Image uploads: Need processing before content becomes visible

DoorDash's observe-only mode for voice moderation shows a pragmatic approach to deploying safety systems where immediate automated action might be premature. By first gathering data on confidence scores and thresholds, the team could validate the system's behavior before enabling automated interventions.

Human-in-the-Loop Design

Despite the automation, SafeChat maintains human oversight through escalation pathways. This is crucial for edge cases where AI models might struggle, such as cultural context, sarcasm, or nuanced threats. The system's effectiveness likely depends on this feedback loop, where human safety agents review escalated cases and provide training data for model improvement.

Broader Implications for Platform Safety

DoorDash's SafeChat implementation offers several lessons for other platforms considering similar systems:

1. Start with High-Recall, Low-Cost Filters: Begin with simple, inexpensive rules that catch obvious violations before investing in complex models.

2. Iterate Based on Real Data: The transition from three-layer to two-layer architecture was driven by training on 10 million actual messages, not theoretical models.

3. Consider Channel-Specific Requirements: Text, image, and voice each need different approaches, thresholds, and latency considerations.

4. Balance Automation with Human Oversight: Even sophisticated AI systems benefit from human review, especially for edge cases and model improvement.

5. Measure What Matters: DoorDash focused on reducing safety incidents rather than just improving model accuracy metrics, aligning technical work with business outcomes.

Challenges and Limitations

While the reported 50% reduction in incidents is impressive, the system likely faces ongoing challenges:

- False Positives: Overly aggressive moderation could frustrate users and create unnecessary friction

- Cultural Nuances: Models trained on one demographic may struggle with communication styles from different regions or cultures

- Adversarial Behavior: Bad actors may adapt their language to evade detection

- Privacy Considerations: Processing voice calls and private messages requires careful attention to data handling and user consent

DoorDash's iterative approach suggests they're continuously refining the system, but these challenges remain relevant for any platform deploying AI moderation.

Conclusion

DoorDash's SafeChat demonstrates that AI-driven safety systems can operate effectively at scale when designed with careful attention to cost, latency, and accuracy trade-offs. The multi-layered architecture provides a blueprint for other platforms looking to implement similar systems, showing how to start simple and evolve based on real-world data.

The 50% reduction in safety incidents represents not just a technical achievement but a meaningful improvement in user experience and platform trust. As more platforms grapple with safety challenges, DoorDash's approach offers a practical example of how AI can be applied to protect users without sacrificing performance or scalability.

For engineers and architects considering similar implementations, the key takeaway is that successful AI moderation requires more than just good models—it demands thoughtful system design, continuous iteration, and a clear understanding of the specific safety challenges your platform faces.

For more details on DoorDash's technical implementation, see their official tech blog and related engineering publications.

Comments

Please log in or register to join the discussion