PJM Interconnection, the grid operator for the Mid-Atlantic and parts of the Midwest, is planning to require large data centers to either build their own power generation or commit to curtailing their electricity use during peak demand periods. The move is a direct response to the unprecedented surge in power consumption from AI data centers, which threatens grid stability and could force the operator to implement rolling blackouts.

The grid operator PJM Interconnection unveiled a plan on Friday designed to manage the surging power demand from data centers that Big Tech companies need to train and run AI models. The proposal, detailed in a press release, would require new large data centers—those requesting 25 megawatts or more of power—to either bring their own dedicated generation (like natural gas turbines or fuel cells) or agree to curtail their electricity use when the grid is under stress.

This isn't a theoretical exercise. PJM, which serves 65 million people across 13 states and the District of Columbia, has seen its projected power demand skyrocket. In its 2022 forecast, it estimated a 2.4% annual load growth through 2032. By 2024, that projection jumped to 4.5% annually, driven almost entirely by data center construction in Northern Virginia and Ohio. PJM's own analysis shows that without intervention, the region could face capacity shortfalls by 2030.

What's Actually Changing

The core of the proposal is a new category for "non-firm" load. Currently, most electricity customers have a "firm" connection, meaning they are guaranteed power unless there's a catastrophic grid failure. The new rules would create a pathway for data centers to connect as "non-firm" customers, accepting that their power can be cut during emergencies. In exchange, they would pay lower rates and avoid the multi-year wait times for new grid connections.

PJM's plan also includes a "must-run" provision for any new generation built specifically to serve a data center. If that dedicated generator fails, the data center would be required to curtail its load immediately, rather than relying on the broader grid as a backup. This prevents a single point of failure from cascading into a larger outage.

The operator is also proposing a new "Data Center Load Flexibility" program, which would pay data centers to voluntarily reduce their consumption during peak demand events. This is similar to existing programs for industrial customers but tailored to the unique, batch-processing nature of AI training workloads, which can often be paused or shifted in time.

The Underlying Problem: AI's Insatiable Appetite

The urgency stems from the physical reality of AI model training. Training a large language model like GPT-4 requires tens of thousands of GPUs running continuously for weeks. A single rack of NVIDIA H100 GPUs can draw 30-40 kilowatts, and modern AI data centers contain tens of thousands of racks. The power density is unprecedented; traditional enterprise data centers might use 5-10 kW per rack.

This isn't just about raw power draw. AI workloads are also highly variable. Training runs can be scheduled, but inference (running the model for users) is constant. The rise of AI agents and automated systems means power demand is becoming less predictable. PJM's existing planning models, built around more stable industrial and residential loads, are struggling to keep up.

The situation is exacerbated by the slow pace of new power generation. Building a natural gas plant can take 5-7 years, while a data center can be constructed in 18-24 months. This mismatch creates a dangerous lag where demand outstrips supply. PJM's queue for new generation interconnection requests is over 2,000 projects long, with wait times stretching to a decade.

Limitations and Industry Pushback

The proposal has drawn immediate criticism from data center developers and Big Tech companies. The core complaint is that building dedicated on-site generation is prohibitively expensive and logistically complex. A 100 MW data center would need a power plant roughly the size of a small town, requiring permits, fuel supply contracts, and emissions controls that can take years to secure.

Companies like Amazon, Google, and Microsoft have publicly committed to powering their operations with 100% renewable energy by 2030. On-site natural gas turbines would directly contradict those goals. While some companies are exploring fuel cells and battery storage, the technology isn't yet scalable to the multi-gigawatt levels required for hyperscale data centers.

There's also a fairness question. PJM's rules would apply to new data centers, but what about existing ones? The operator is also considering a "load reduction" mandate for all large data centers, which could force existing facilities to invest in expensive retrofits or face disconnection. This has created uncertainty for companies that have already invested billions in the region.

From a grid stability perspective, the plan has merit. Decentralized generation and load flexibility are proven strategies for managing peak demand. However, the scale required for AI data centers is untested. A single 100 MW data center going offline during a curtailment event could create its own voltage instability if not managed carefully.

What Comes Next

PJM's proposal is currently in the stakeholder feedback phase. The operator will hold a series of meetings through early 2026 before submitting a final tariff to the Federal Energy Regulatory Commission (FERC) for approval. If adopted, the rules could take effect in late 2026 or early 2027.

The outcome will have national implications. PJM is the largest grid operator in the U.S., and its rules often become a template for other regions facing similar pressures. California's CAISO and Texas's ERCOT are watching closely, as both are also grappling with data center power demand.

For the AI industry, this represents a new constraint on growth. The cost of compute isn't just about GPU prices and electricity rates anymore; it's also about securing a reliable power connection. Companies may start shifting data center construction to regions with more robust grids or to states with abundant natural gas reserves, even if it means moving away from traditional tech hubs.

The debate also highlights a fundamental tension in the energy transition. The same AI technology that's driving the need for more power is also being used to optimize grid operations and accelerate renewable energy deployment. PJM itself uses AI for load forecasting and grid balancing. The question is whether these tools can scale fast enough to keep the lights on.

In the meantime, data center developers are exploring creative solutions. Some are partnering with utilities to build new transmission lines. Others are looking at nuclear power, with small modular reactors (SMRs) being touted as a potential long-term solution. But SMRs are still in the regulatory approval stage and won't be available at scale until the 2030s.

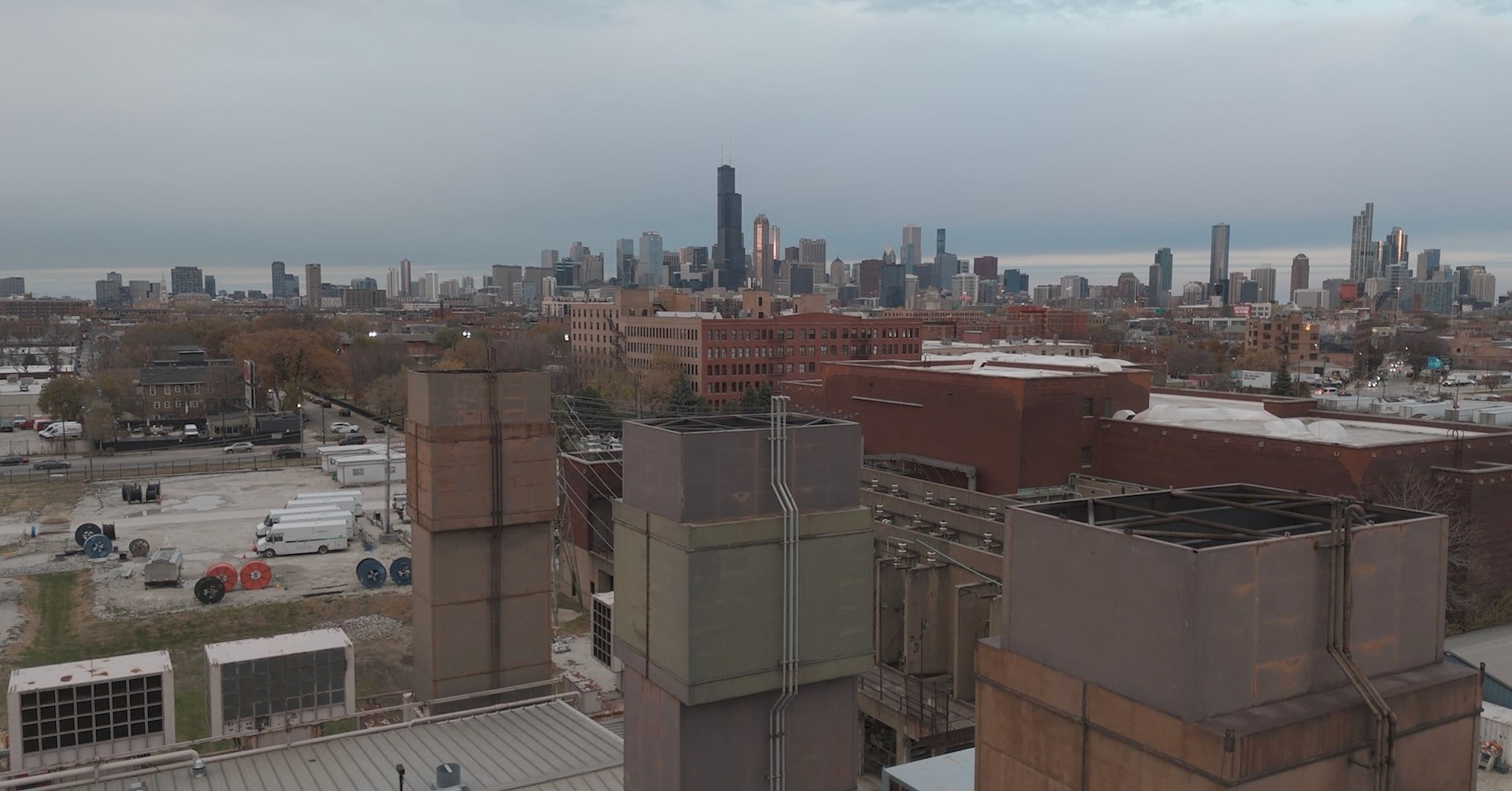

The PJM proposal is a stark reminder that the AI revolution has a physical backbone. The cloud isn't an abstract concept; it's a collection of concrete buildings drawing megawatts of power from a grid that wasn't designed for this level of demand. The next few years will determine whether the grid can adapt, or if the AI boom will hit a hard power limit.

Comments

Please log in or register to join the discussion