A new fork of the Tinygrad machine learning framework demonstrates path tracing capabilities, showcasing neural rendering potential in a lightweight codebase.

A new experimental fork of the Tinygrad machine learning framework has emerged, focusing specifically on path tracing and neural rendering capabilities. The gtinygrad repository, created by developer quantbagel, positions itself as a "minimal tinygrad path tracing playground" that explores the intersection of differentiable programming and computer graphics.

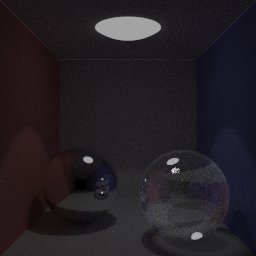

Unlike the parent Tinygrad project - which prioritizes simplicity while implementing core deep learning operations - gtinygrad specifically modifies the framework to support ray tracing operations. The most notable demonstration is an implementation of a Cornell Box renderer, a standard graphics benchmark that simulates global illumination through path tracing. The implementation appears in the examples/raytrace_demo.py script, producing images like this:

Cornell Box rendering created with gtinygrad (Source: quantbagel/gtinygrad)

Cornell Box rendering created with gtinygrad (Source: quantbagel/gtinygrad)

Technically, gtinygrad maintains Tinygrad's core philosophy of minimalism while extending its capabilities into computer graphics. The fork shows how automatic differentiation - typically used for training neural networks - can be repurposed for optimizing light transport simulations. According to commit history, recent changes include header simplifications and AMD-specific testing flags (AMD_DISABLE_SDMA), suggesting exploration of hardware acceleration paths.

Several limitations deserve note:

- Experimental Status: The project remains a playground rather than production-ready code

- Divergence Risk: Currently 14 commits ahead but 43 commits behind mainline Tinygrad

- Performance Constraints: Path tracing remains computationally intensive, even with GPU acceleration

- Feature Scope: Focuses specifically on rendering vs. Tinygrad's broader ML capabilities

The codebase maintains Tinygrad's accessible structure (62% Python, 27% C) while adding visualization-specific components. For those interested in differentiable rendering techniques, gtinygrad offers a practical starting point more approachable than industrial-scale frameworks like PyTorch3D.

While not positioned as a competitor to established rendering libraries, gtinygrad provides educational value by demonstrating how minimal autograd systems can extend beyond traditional machine learning applications. The project continues Tinygrad's tradition of keeping foundational concepts accessible, now applied to light transport problems.

Comments

Please log in or register to join the discussion