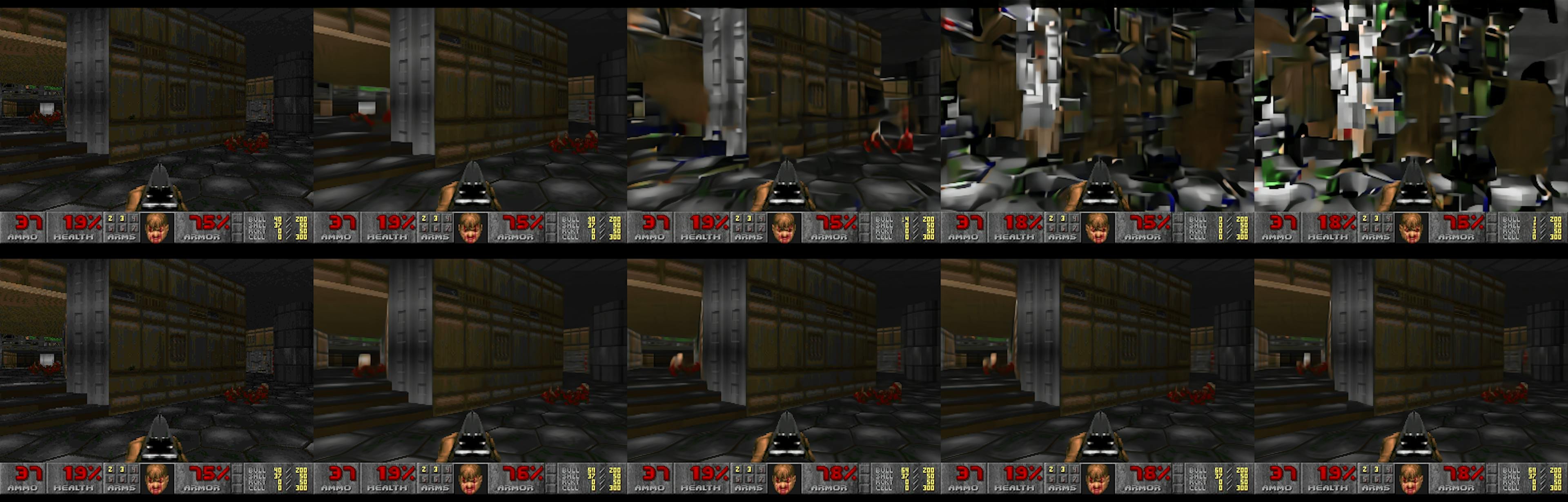

Researchers have developed a system that uses reinforcement learning agents to generate training data for diffusion models, enabling real-time simulation of game environments like DOOM that are nearly indistinguishable from actual gameplay.

The intersection of reinforcement learning and generative AI has produced a fascinating new approach to game simulation. A team of researchers has developed a system that trains AI agents to play games like DOOM, then uses the resulting gameplay data to train diffusion models that can generate new game frames in real-time, creating an entirely synthetic gaming experience.

The Core Innovation

The system, called GameNGen, works by first training a reinforcement learning agent to play a game environment. This agent explores the game world, makes decisions, and generates sequences of gameplay that serve as training data. The researchers use Proximal Policy Optimization (PPO) to train the agent, providing it with downscaled frame images and in-game maps at 160x120 resolution, along with the last 32 actions it performed.

Once sufficient gameplay data is collected, a generative diffusion model is trained on this data. The model learns to predict what the next frame in a game sequence should look like, given the current state and recent actions. This creates a system that can generate new game content on the fly, without actually running the underlying game engine.

Technical Implementation

The reinforcement learning component uses a simple convolutional neural network as its feature extractor, with two-layer MLPs for the actor and critic heads. The agent is trained on CPU using Stable Baselines 3 infrastructure, running 8 parallel games with a replay buffer size of 512. After 10 million environment steps, the agent has explored enough of the game space to provide rich training data.

The generative model uses Stable Diffusion 1.4 as its foundation, with all U-Net parameters unfrozen during training. The researchers employ a batch size of 128 and a learning rate of 2e-5 with the Adafactor optimizer. They modify the diffusion loss to use v-prediction and implement noise augmentation with 10 embedding buckets and a maximum noise level of 0.7.

Training occurs on 128 TPU-v5e devices with data parallelization, generating approximately 900 million frames total. The context length is set to 64, meaning the model considers its own last 64 predictions along with the last 64 actions when generating new frames.

Results and Implications

The most striking finding is that the AI-generated game simulations are nearly indistinguishable from real gameplay. The system can generate new frames in real-time, creating a seamless gaming experience that doesn't require the original game engine to be running.

This has profound implications for game development and interactive entertainment. Game studios could potentially use this technology to create vast, procedurally generated game worlds that respond dynamically to player actions. The computational cost of running complex game engines could be significantly reduced, as the heavy lifting shifts to AI models that can run on specialized hardware.

Beyond Gaming

While the research focuses on game simulation, the underlying approach has broader applications. Any system that involves sequential decision-making and visual output could potentially benefit from this combination of reinforcement learning and generative models. This could include virtual training environments, architectural visualization, or even scientific simulations where visual fidelity matters.

The work builds on previous research in autoregressive world models and real-time generative models, pushing the boundaries of what's possible with current AI technology. As these systems become more sophisticated, we may see entirely new forms of interactive media emerge - experiences that blend the responsiveness of games with the creative potential of generative AI.

The research represents a significant step toward AI systems that can not only understand and generate content but also interact with and respond to dynamic environments in real-time. As the technology matures, it could fundamentally change how we think about interactive entertainment and simulation.

Comments

Please log in or register to join the discussion