NVIDIA engineers have released preliminary Linux kernel patches enabling Extended GPU Memory (EGM) virtualization for Grace Hopper and Blackwell architectures. This breakthrough allows GPUs in virtualized environments to access system memory across nodes, potentially revolutionizing high-performance computing workloads in cloud and cluster deployments.

NVIDIA has taken a significant step toward transforming virtualized high-performance computing with its newly proposed Linux kernel support for Extended GPU Memory (EGM) virtualization. Engineer Ankit Agrawal unveiled the RFC patch series targeting systems powered by NVIDIA's Grace Hopper and Blackwell architectures—platforms designed for massive AI and scientific workloads.

Breaking Memory Barriers

EGM shatters traditional GPU memory limitations by enabling accelerators to access system memory across complex infrastructures. As Agrawal explains:

"The GPU can utilize system memory located on the same socket, different sockets, or even different nodes in a multi-node system through the path: GPU ↔️ NVSwitch ↔️ GPU ↔️ CPU."

This capability is critical for memory-intensive workloads like large language model training and complex simulations, where datasets frequently exceed local GPU memory capacity.

Virtualization Architecture Unveiled

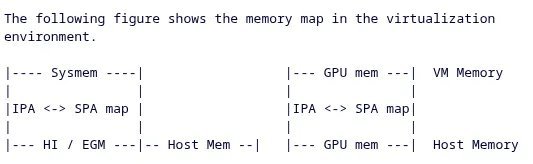

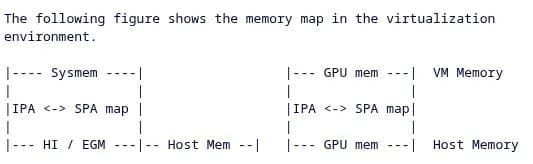

{{IMAGE:2}}

The virtualization implementation partitions host memory into two distinct regions:

- Hypervisor region: Reserved for host OS operations

- Hypervisor-Invisible (HI) region: Dedicated to virtual machines and EGM allocations

The HI segment—managed entirely by NVIDIA's stack—is excluded from the host's EFI map during boot. Its base address and size are exposed via ACPI DSDT properties, allowing guest VMs to recognize and leverage the expanded memory pool through a new nvgrace-egm VFIO driver.

Current Status and Future Roadmap

Tested on Grace Blackwell platforms running Linux kernel v6.17-rc4 and QEMU, the patches enable basic VM operations with NVIDIA drivers recognizing EGM capabilities. However, full CUDA workload support awaits companion "Nested Page Table" patches still in development. This dependency highlights the complexity of coordinating memory management across physical and virtualized layers.

Why This Matters for Tech Leaders

This development signals NVIDIA's strategic push toward virtualization-friendly infrastructure for AI factories and hyperscale environments:

- Cloud Providers: Enables memory-elastic GPU instances without physical hardware changes

- HPC Clusters: Facilitates memory sharing across nodes for distributed workloads

- AI Developers: Reduces manual sharding for massive datasets during training

As these patches mature, they could fundamentally shift how enterprises deploy GPU resources—treating pooled system memory as a seamless extension of accelerator capacity in virtualized and containerized environments. The open collaboration with the Linux kernel community also underscores NVIDIA's growing commitment to open infrastructure amidst increasing data center dominance.

Source: Phoronix (Michael Larabel, September 2025)

Comments

Please log in or register to join the discussion