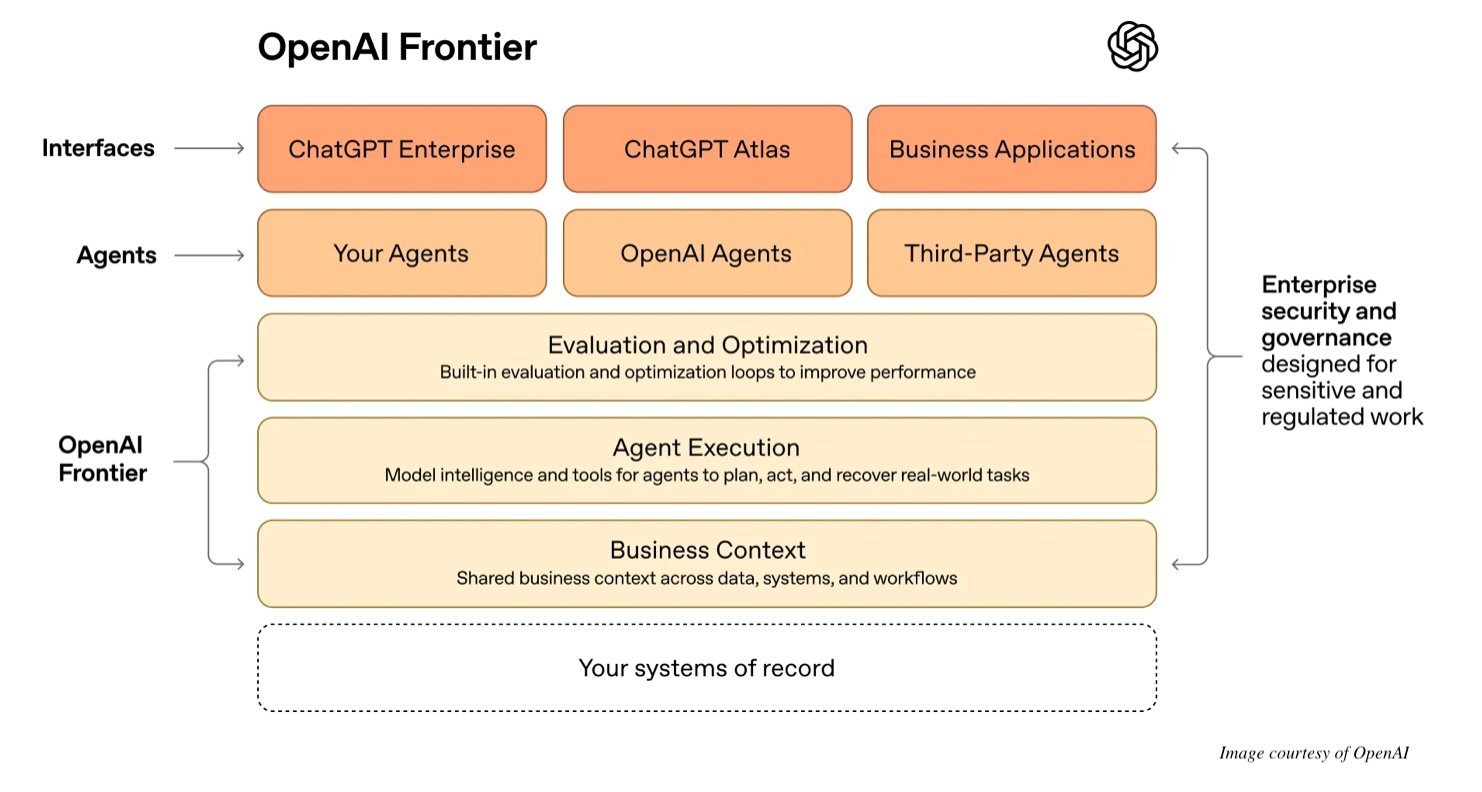

OpenAI launches Frontier, an enterprise platform enabling unified deployment of AI agents across business systems with built-in governance, addressing fragmentation risks through shared context and integration standards.

OpenAI has launched Frontier, a comprehensive platform designed to help enterprises build, deploy, and manage AI agents at scale. Unlike isolated agent deployments that create operational silos, Frontier establishes a unified framework where agents share business context through existing systems like CRMs and data warehouses. This architectural approach directly tackles fragmentation—a critical pain point where disconnected agents increase complexity instead of reducing it.

The Fragmentation Challenge

When AI agents operate independently, they lack awareness of broader organizational workflows. This limits their effectiveness and creates redundant or contradictory actions. Frontier solves this through three core capabilities:

Shared Business Context: Agents integrate with existing tools via open standards, accessing real-time data from sources like Salesforce or Snowflake without migration. For example: An HR onboarding agent can reference CRM data to personalize new-hire documentation while simultaneously updating payroll systems.

Institutional Knowledge Onboarding: Agents ingest company-specific terminology, compliance rules, and historical decision patterns through customizable training pipelines. This transforms generic LLMs into domain-specialized collaborators.

Identity and Governance: Role-based permissions, audit logs, and boundary controls enforce regulatory compliance. A financial agent might be restricted to read-only market data access while requiring human approval for trade executions.

Integration Without Disruption

Frontier’s emphasis on interoperability avoids vendor lock-in via its open standards approach. Enterprises retain existing agents and applications—whether custom-built or third-party—while layering Frontier’s orchestration. As OpenAI states: ">You can bring your existing data and AI together where it lives." This contrasts with platforms requiring full ecosystem replacement.

Enterprise Adoption Concerns

Community reactions highlight valid considerations:

- Vendor Lock-in Fears: Critics like Hacker News user louiereederson note that binding workflow automation to an LLM vendor risks obsolescence given rapid model evolution. Frontier mitigates this through API-driven integrations but retains OpenAI as the control plane.

- Shift Toward Enterprise: Individual users express frustration as OpenAI prioritizes business use cases. However, Frontier’s architecture inherently serves complex organizations where cross-system coordination delivers maximum ROI.

Operational Support Model

OpenAI deploys Forward Deployed Engineers (FDEs) to assist with implementation. These teams design agent workflows while creating feedback loops between enterprise deployments and OpenAI Research—accelerating iterative improvements based on real-world usage.

Strategic Implications

By treating agents as "coworkers" rather than tools, Frontier shifts focus from task automation to systemic intelligence. Its success hinges on balancing flexibility with control: Can organizations maintain agent autonomy while ensuring governance? As LLM capabilities evolve, Frontier’s open integration layer provides adaptability—but enterprises must still evaluate long-term dependency risks.

Sergio De Simone is a software engineer with 25+ years of experience across mobile platforms and enterprise systems.

Sergio De Simone is a software engineer with 25+ years of experience across mobile platforms and enterprise systems.

Comments

Please log in or register to join the discussion