The rise of autonomous AI agents introduces a fundamental shift in security posture management, moving beyond traditional cloud resource protection to address the unique risks of reasoning, decision-making systems. Microsoft Defender's new AI Security Posture Management capabilities provide multi-cloud visibility and contextual risk analysis for these complex agent ecosystems.

The autonomous agent revolution represents a pivotal moment in cloud security strategy. Unlike traditional applications or static cloud resources, AI agents actively reason, make decisions, invoke tools, and interact with other systems on behalf of users. This autonomy creates powerful business capabilities but fundamentally alters the security equation, introducing risks that traditional security tools weren't designed to address.

The Agent Security Challenge: Beyond Traditional Cloud Protection

Traditional cloud security posture management focuses on protecting resources like virtual machines, databases, and storage accounts. These resources are largely passive—they wait for commands and execute predefined functions. AI agents, by contrast, are active participants in the digital ecosystem. They can chain multiple tools, access sensitive data sources, and make autonomous decisions based on dynamic inputs.

This architectural difference creates several unique security challenges:

- Expanded Attack Surface: Each agent connects to models, platforms, tools, knowledge sources, and identity systems. Every connection point represents a potential vulnerability.

- Dynamic Behavior: Unlike static applications, agents adapt their behavior based on inputs, making traditional signature-based detection insufficient.

- Indirect Attack Vectors: Threats like indirect prompt injection (XPIA) exploit the agent's trust in external data sources rather than targeting the agent directly.

- Cascading Compromise: In multi-agent architectures, compromising a coordinator agent can provide control over multiple subordinate agents.

Microsoft Defender's approach to AI Security Posture Management (AI-SPM) addresses these challenges by providing comprehensive visibility across the entire agent stack—from the underlying models to the tools they invoke and the data they access.

Scenario Analysis: Understanding Agent-Specific Risks

Scenario 1: Data-Connected Agents and Sensitive Information Exposure

Many agents are designed to process organizational data, from customer transaction records to financial documents. While these agents deliver significant business value, they also create critical exposure points. The risk differs fundamentally from direct database access: data exfiltration through an agent can blend with normal agent activity, making detection exceptionally difficult.

Consider an agent connected to a blob container containing customer PII. If an attacker gains access through an internet-exposed API, they could potentially extract sensitive information through the agent's normal operations. Traditional security monitoring might miss this activity because it appears as legitimate agent queries.

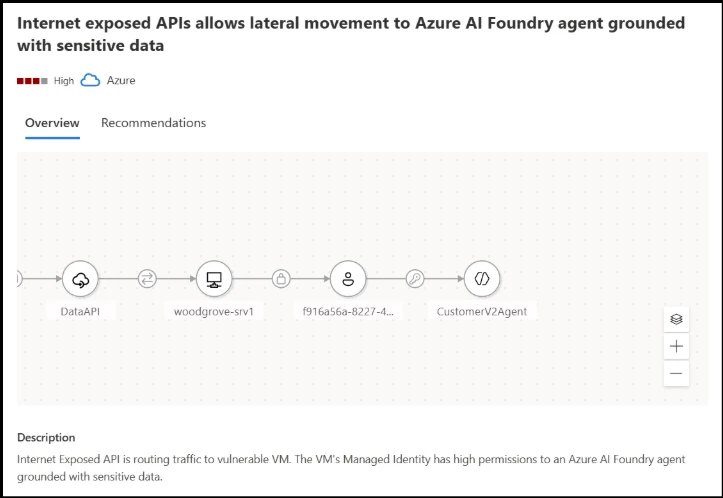

Microsoft Defender provides attack path analysis that visualizes these risks. For example, the platform can map how an attacker might leverage an internet-exposed API to access an AI agent grounded with sensitive data, showing the complete attack chain from the initial entry point to the sensitive data source.

Figure 1: Attack path analysis showing how an internet-exposed API could provide access to an AI agent connected to sensitive data, with remediation steps highlighted.

Scenario 2: Indirect Prompt Injection (XPIA) in Autonomous Agents

Indirect prompt injection represents one of the most sophisticated threats to AI agents. Unlike direct prompt injection, where attackers issue harmful instructions directly to the model, XPIA hides malicious commands in external data sources that agents process. The agent unknowingly ingests this crafted content and executes hidden commands because it trusts the source.

This attack vector is particularly dangerous for agents performing high-privilege operations. A single manipulated data source—such as a webpage fetched through a browser tool or an email being summarized—can silently influence agent behavior, resulting in unauthorized access, data exfiltration, or system compromise.

Microsoft Defender identifies agents susceptible to XPIA by analyzing their tool combinations and configurations. The platform assigns risk factors based on both the functionality of connected tools and the potential impact of misuse. For agents with both high autonomy and high XPIA risk, Defender recommends implementing human-in-the-loop controls to reduce potential impact.

{{IMAGE:3}}

Figure 2: Security recommendation generated for an agent with indirect prompt injection risk, highlighting the need for proper guardrails.

{{IMAGE:4}}

Figure 3: Recommendation for an agent with both high autonomy and high indirect prompt injection risk, suggesting human-in-the-loop controls.

Scenario 3: Coordinator Agents in Multi-Agent Architectures

In complex multi-agent systems, not all agents carry equal risk. Coordinator agents—those responsible for managing and directing multiple sub-agents—act as command centers within the architecture. A compromise of a coordinator doesn't affect just a single workflow; it cascades to every sub-agent under its control.

These coordinators often have customer-facing interfaces, further amplifying their risk profile. Their broad authority makes them attractive targets, requiring dedicated security controls and comprehensive visibility.

Microsoft Defender accounts for agent roles within multi-agent architectures, providing specific visibility into coordinator agents. The platform uses attack path analysis to identify how agent-related risks form exploitable paths, mapping weak links with context. This visualization helps security teams take preventative actions before breaches occur.

{{IMAGE:5}}

Figure 4: Attack path demonstrating how an internet-exposed API could provide access to a coordinator agent in Azure AI Foundry.

Foundational Hardening: Reducing the Overall Attack Surface

Beyond addressing specific risk scenarios, Microsoft Defender provides broad hardening guidance designed to reduce the overall attack surface of any AI agent. This includes:

- Identity and Access Controls: Implementing least-privilege access for agents, ensuring they only have permissions necessary for their function.

- Network Segmentation: Isolating agent environments and controlling inbound/outbound communications.

- Data Governance: Ensuring agents only access appropriate data sources with proper encryption and access logging.

- Tool Validation: Verifying that tools invoked by agents are secure and properly authenticated.

- Monitoring and Logging: Implementing comprehensive audit trails for agent activities, particularly those involving sensitive data or privileged operations.

The new Risk Factors feature helps teams prioritize which weaknesses to mitigate first, ensuring the right issues receive the right level of attention. This prioritization is critical in complex environments where multiple agents may have overlapping vulnerabilities.

Multi-Cloud Strategy for AI Security Posture

Modern enterprises rarely operate in a single cloud environment. AI workloads are increasingly distributed across multiple platforms, each with its own security model and tooling. Microsoft Defender's AI Security Posture Management addresses this reality by providing unified visibility across:

- Microsoft Azure AI Foundry: Microsoft's integrated AI development platform

- AWS Bedrock: Amazon's managed service for foundation models

- Google Cloud Vertex AI: Google's unified AI platform

This multi-cloud approach ensures that critical vulnerabilities and potential attack paths are identified and mitigated consistently, regardless of where agents are deployed. Security teams gain a single pane of glass for monitoring agent security posture across their entire infrastructure.

Strategic Implications for Cloud Security Teams

The shift to autonomous agents requires a fundamental evolution in security strategy. Traditional approaches focused on protecting static resources must expand to include:

- Agent Lifecycle Security: From development through deployment and retirement

- Behavioral Monitoring: Detecting anomalous agent behavior rather than just signature-based threats

- Supply Chain Security: Ensuring the models, tools, and data sources agents connect to are secure

- Incident Response: Developing playbooks specifically for agent-related security incidents

Security teams should begin by establishing comprehensive visibility into all AI assets. This inventory should include not just the agents themselves, but also the models they use, the tools they invoke, the data they access, and the identities they operate under.

Next, teams should implement contextual risk analysis. Understanding what each agent can do and what it's connected to enables informed decisions about security controls. For example, an agent with access to sensitive financial data and internet connectivity requires different controls than an internal-only agent processing non-sensitive information.

Finally, organizations should adopt a "security by design" approach for AI agents. Rather than bolting security onto existing agents, new agents should be designed with security controls from the ground up. This includes implementing proper guardrails, access controls, and monitoring from the initial design phase.

The Path Forward

The autonomous agent era is here, and it's transforming how businesses operate. The security challenges are significant, but they're not insurmountable. With comprehensive visibility, contextual risk analysis, and proper hardening controls, organizations can build and deploy AI agents securely.

Microsoft Defender's AI Security Posture Management provides the tools necessary for this transformation. By addressing the unique challenges of agent security—from data-connected agents to indirect prompt injection risks to coordinator agent vulnerabilities—Defender enables organizations to embrace the agent revolution while maintaining robust security posture.

For organizations beginning their AI agent journey, the recommendation is clear: start with visibility, implement contextual risk analysis, and build security controls into your agent architecture from the beginning. The agents that will define the next decade of business operations should be powerful, efficient, and secure by design.

To learn more about Security for AI with Defender for Cloud, visit the Microsoft Defender for Cloud documentation and explore the AI Security Posture Management capabilities.

Comments

Please log in or register to join the discussion