A comprehensive benchmark of seven leading Google Search API providers reveals significant performance disparities, with SerpApi achieving the fastest average response time and p99 latency. The tests, conducted with over 100 identical search queries, highlight how speed optimizations impact real-world developer workflows and application responsiveness.

The landscape of Google Search API providers has undergone significant churn in recent years, with new entrants displacing legacy players and driving overall performance improvements. This volatility makes independent performance validation crucial for developers building search-dependent applications. To provide clarity, a recent benchmark rigorously tested seven prominent services: SerpApi, Bright Data, DataForSEO, ScraperAPI, Scrapingdog, SearchApi.io, and Serper.dev.

Using identical search queries for "google search api" and executing over 100 requests per provider (more where free tiers allowed), the tests focused on two critical metrics: average response time and p99 latency. The latter is particularly vital, measuring the threshold below which 99% of responses fall – a key indicator of real-world reliability and worst-case performance for user-facing applications.

The Speed Standings

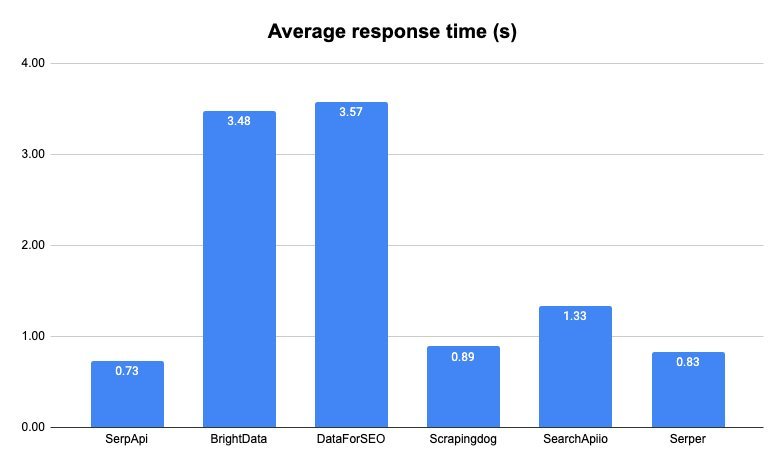

SerpApi emerged as the clear leader in average response time at 0.73 seconds, narrowly beating Serper.dev (0.83 seconds) and significantly outperforming others. ScraperAPI's average of 14.84 seconds starkly highlighted the performance gap across the field. However, average speed only tells part of the story.

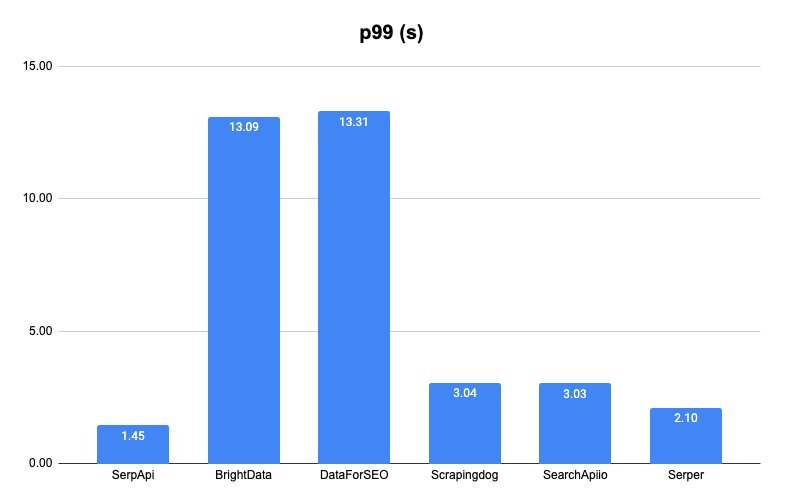

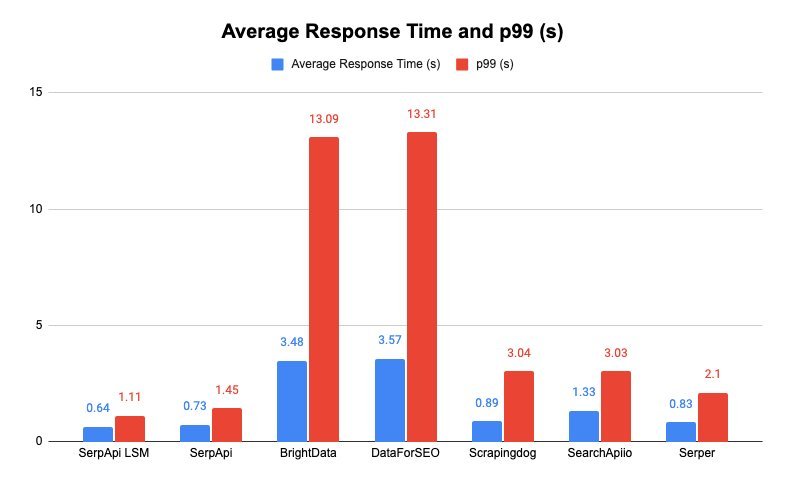

For p99 latency – where consistency under load matters most – SerpApi again led decisively at 1.45 seconds. This was 44.8% faster than Serper.dev's 2.10 seconds and orders of magnitude better than providers like ScraperAPI (61.42 seconds) or Bright Data (13.09 seconds). High p99 values can cripple applications requiring predictable latency, making this metric critical for production systems.

Pushing the Envelope: Ludicrous Speed

SerpApi also showcased its premium "Ludicrous Speed Max" tier, demonstrating further optimizations. Activating this option slashed their average response to 0.64 seconds and p99 to 1.11 seconds – pushing performance boundaries beyond the standard tier.

Methodology and Transparency

The benchmark author (a SerpApi employee) emphasized transparency, urging readers to validate results using free trials: "Benchmarks were run in a way you can run them entirely yourself to validate these numbers." This reproducibility is essential, given the inherent challenges in accurately measuring distributed API performance. Tests used each provider's fastest Google Search API option, with identical queries executed sequentially to minimize external variables.

Why Speed Isn't Just a Number

For developers, these latency figures translate directly to user experience and system efficiency. Applications relying on real-time search data – think competitive monitoring, dynamic pricing engines, or research tools – demand sub-second responses. High p99 times introduce unpredictable delays, potentially causing timeouts, poor UX, and increased infrastructure costs from retries. SerpApi's dominance in both average and p99 metrics suggests architectural efficiencies that minimize variability, a significant advantage for latency-sensitive workloads.

While raw speed is paramount for many use cases, developers should also consider factors like cost, result richness, anti-bot evasion success rates, and geographic coverage when choosing a provider. Nevertheless, this benchmark underscores that in the race for search API supremacy, milliseconds matter – and the gap between leaders and the pack is widening.

Source: SerpApi Blog - Who Has the Fastest Google Search API?

Comments

Please log in or register to join the discussion