At the Chaos Communication Congress, Signal President Meredith Whittaker and VP Udbhav Tiwari presented a stark assessment of agentic AI systems, arguing their current implementation creates unacceptable vulnerabilities. They detailed how OS-level integration exposes sensitive data to malware, how probabilistic reasoning makes multi-step tasks unreliable, and how the industry's rush to deploy these systems ignores fundamental security trade-offs.

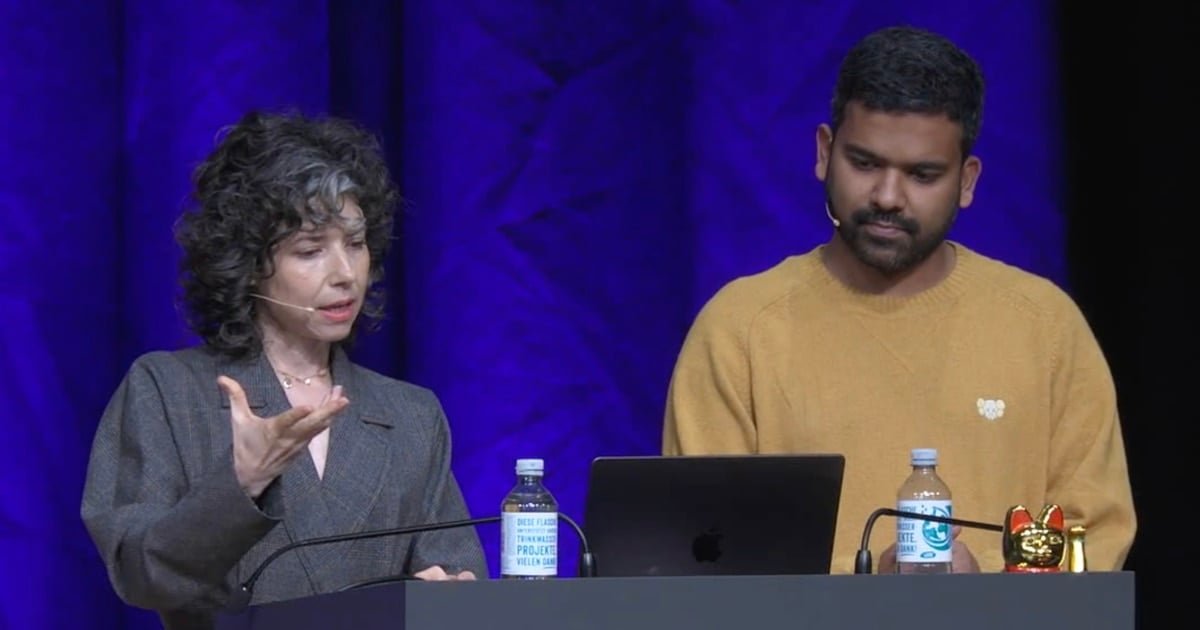

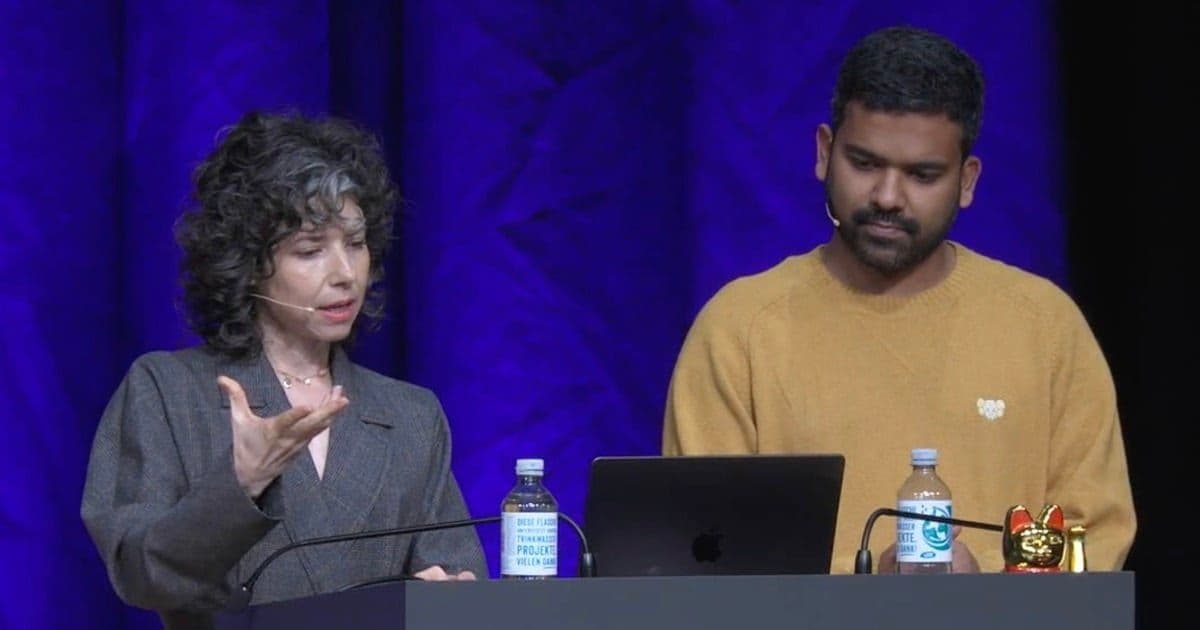

At the 39th Chaos Communication Congress in Hamburg, Signal President Meredith Whittaker and Vice President of Strategy and Global Affairs Udbhav Tiwari delivered a presentation that challenged the prevailing narrative around agentic AI. Their talk, titled "AI Agent, AI Spy," argued that the industry is building systems that are simultaneously insecure, unreliable, and incompatible with privacy expectations.

The core of their critique centers on how these AI agents are being integrated into operating systems and applications. Unlike traditional software, agentic AI requires deep access to user data and system functions to operate autonomously. This creates a fundamental tension: the more capable the agent needs to be, the more dangerous its access becomes if compromised.

The Database Problem

Microsoft's Recall feature for Windows 11 exemplifies the security vulnerability. Recall continuously captures screenshots, performs OCR on text, and conducts semantic analysis to build what amounts to a forensic database of every user action. It creates a timeline of applications used, text viewed, dwell times, and topics of interest.

Tiwari explained that this approach creates a single, high-value target for attackers. Malware can access this database directly, and indirect prompt injection attacks can manipulate the agent's behavior. This completely undermines end-to-end encryption protections. Signal has responded by adding flags to prevent screen recording, but Tiwari noted this is only a temporary workaround, not a solution.

The problem extends beyond individual applications. When agentic AI operates at the OS level, it has access to entire digital lives. A compromised agent becomes a master key to everything: communications, financial data, personal documents, and system controls.

The Reliability Math

Whittaker presented a mathematical argument about why agentic AI cannot currently be trusted with important tasks. These systems are probabilistic, meaning they make educated guesses rather than following deterministic rules. Each step in a multi-step task introduces error, and these errors compound.

She walked through the numbers: if an agent performs each step with 95% accuracy (which exceeds current capabilities), a 10-step task would succeed only 59.9% of the time. A 30-step task would drop to 21.4% success. Using more realistic accuracy rates makes the situation worse. At 90% per-step accuracy, that 30-step task succeeds only 4.2% of the time.

The reality is even more stark. Whittaker noted that the best current agent models fail about 70% of the time on complex tasks. This means users cannot rely on agents to complete anything important without human oversight, which defeats much of the automation promise.

The Consent Problem

Beyond technical issues, Whittaker and Tiwari highlighted that users are being opted into these systems without meaningful consent. The default is activation, and users must actively disable features they never explicitly requested. This pattern mirrors the privacy violations of earlier tech industry mistakes, where convenience was prioritized over user agency.

A Path Forward

The Signal leadership didn't propose abandoning AI agents entirely, but they called for immediate changes to how the industry deploys them:

Stop reckless deployment: Companies should halt the rush to integrate agentic AI at OS levels until security vulnerabilities are addressed. Plain-text database access that malware can exploit is unacceptable.

Default to opt-out: Users should not be automatically enrolled. Opt-in should be mandatory, with clear explanations of what data the agent will access and how it will be used.

Radical transparency: AI companies must provide granular, auditable explanations of how their systems work. This includes what data is collected, how it's processed, and how decisions are made. The current black-box approach prevents independent security analysis.

Why This Matters

Whittaker and Tiwari's warning carries weight because of their backgrounds. Whittaker previously worked at Google, where she led a team that exposed the company's controversial AI ethics practices before leaving to lead Signal. Tiwari brings experience from Mozilla and policy work on privacy and competition issues.

Their concern is that the industry is repeating past mistakes at a massive scale. The rush to deploy agentic AI is driven by competitive pressure and investor expectations, not by proven security or reliability. If high-profile failures occur—such as a compromised agent leaking sensitive corporate data or causing financial losses—public trust could collapse.

This would not only harm the AI industry but also set back legitimate uses of the technology. The presentation suggests that without fundamental changes to deployment practices and security architecture, agentic AI may be solving problems that don't exist while creating ones that do.

The complete presentation is available through the Chaos Communication Congress archives. For more on Signal's privacy-first approach, see their official documentation.

Comments

Please log in or register to join the discussion