The latest release of simdjson introduces advanced SIMD string escaping and batch integer formatting, accelerating Twitter JSON parsing by 30% and demonstrating significant untapped potential for CPU vectorization.

The simdjson project, renowned for leveraging CPU vector instructions to parse JSON at gigabytes-per-second speeds, has released version 4.3 with substantial performance improvements. This update proves that even after years of optimization, significant headroom remains for SIMD (Single Instruction, Multiple Data) acceleration in modern processors.

Core Technical Enhancements

At the heart of simdjson 4.3 are two critical optimizations:

- SIMD-Accelerated String Escaping: By processing multiple escape sequences concurrently using vector registers, the library dramatically reduces branch mispredictions during string handling.

- Batch Integer Formatting: Numeric values are converted to strings in parallel chunks rather than sequentially, minimizing pipeline stalls.

These techniques leverage:

- ARM64 NEON instructions for ARM-based systems

- SSE2 intrinsics for x86 architectures

- Runtime dispatching for LoongArch CPUs using LSX/LASX extensions

The architectural impact extends beyond raw throughput. Microsoft's Visual C++ contributions improved build times by 30%, accelerating developer iteration cycles.

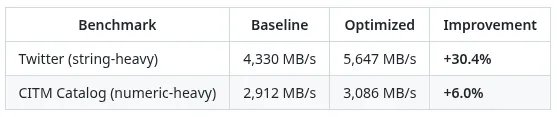

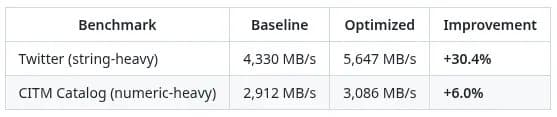

Benchmark Analysis

Performance gains vary based on JSON structure, demonstrating how data characteristics influence SIMD effectiveness:

| Benchmark Dataset | Profile | Improvement |

|---|---|---|

| Twitter API JSON | String-heavy (80%+ text) | 30% faster |

| CITM Catalog | Numeric-dominant | 6% faster |

The Twitter benchmark improvement is particularly significant because:

- String operations traditionally bottleneck parsing

- Escape sequence handling (e.g.,

\n,\uXXXX) is computationally expensive - SIMD parallelizes these operations across 128-bit (SSE) or 512-bit (AVX-512) registers

Twitter benchmark visualization showing reduced processing latency

Twitter benchmark visualization showing reduced processing latency

Hardware Implications

These optimizations reveal important hardware considerations:

- Core Utilization: Wider vector units (like AVX-512) show greater gains, but even SSE2 delivers substantial improvements

- Power Efficiency: SIMD reduces instructions-per-operation, potentially lowering energy consumption during heavy JSON workloads

- Memory Subsystem: Faster parsing decreases memory residency time, easing pressure on caches

Compatibility spans x86, ARM, and LoongArch architectures, with runtime detection ensuring optimal instruction selection. The library remains header-only C++ for easy integration.

Practical Applications

For developers and system architects:

- API Servers: Reduce JSON serialization/deserialization overhead

- Data Pipelines: Accelerate ETL processes handling JSON logs

- Embedded Systems: ARM NEON optimizations benefit IoT devices

The project provides comprehensive documentation and v4.3 release details. Independent benchmarks across CPU generations are available via Phoronix tests.

This release underscores that despite JSON's reputation as a 'slow' text format, aggressive vectorization can rival binary protocols. As datasets grow, such optimizations become critical infrastructure investments.

Comments

Please log in or register to join the discussion