Tidepool Heavy Industries argues that while LLMs enable natural language interfaces, their high latency necessitates hybrid approaches combining structured GUI elements with conditional logic to accelerate interactions and reduce cognitive friction.

Natural language interfaces represent a quantum leap in human-computer interaction, yet their adoption comes with significant performance tradeoffs that demand architectural reconsideration. As Tidepool Heavy Industries argues persuasively, the latency inherent in large language model inference—often spanning tens of seconds per interaction—creates fundamental usability constraints that graphical user interfaces solved decades ago. This technological tension forms the core of a critical design challenge: how to preserve the semantic flexibility of natural language interaction while avoiding the temporal cost of conversational back-and-forth.

The latency differential becomes stark when contextualized within traditional computing operations. While modern interfaces respond to clicks within milliseconds, LLM interactions operate on the scale of seconds—a thousandfold difference in responsiveness. This gap manifests most painfully in multi-turn dialogues where users must endure cumulative waiting periods equivalent to entire workflow cycles in conventional software. Streaming responses alleviate but don't eliminate this friction, particularly when exploring decision trees requires iterative refinement.

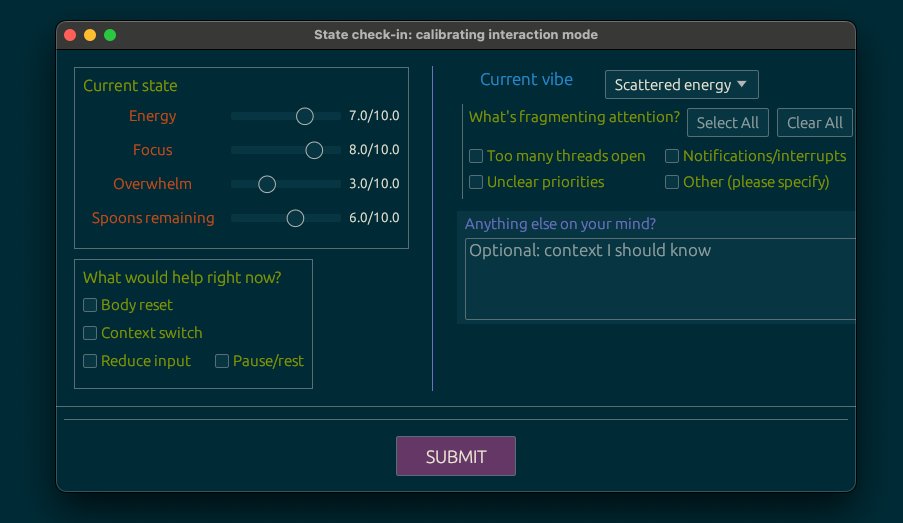

Tidepool's solution materializes in popup-mcp, an open-source framework enabling LLMs to generate context-aware GUI elements on demand. This Rust-based implementation transcends conventional form builders through three transformative capabilities:

- Dynamic Conditional Rendering: Elements appear or modify their state based on real-time user input through logical expressions (e.g., revealing additional questions when a slider exceeds threshold values)

- Anticipatory Interface Design: LLMs generate potential conversation branches upfront, exposing their internal reasoning about probable dialogue trajectories

- Universal Escape Hatches: Every selection field includes an 'Other' option that instantly reveals a text input—preserving natural language flexibility without round-trip latency

This architectural approach fundamentally reframes latency economics. By replacing sequential conversational turns with parallel interface exploration, users amortize the high cost of LLM inference across multiple instantaneous interactions. When selecting options from a pre-rendered popup takes milliseconds rather than the seconds required for equivalent natural language exchanges, the aggregate time savings compound dramatically—especially in specification-heavy workflows like technical configuration or creative worldbuilding.

The conditional rendering feature provides unprecedented visibility into model cognition. By materializing hypothetical dialogue branches before user input occurs, popup-mcp reveals where LLMs anticipate conversation flows might diverge—often exposing flawed assumptions or knowledge gaps that would otherwise require multiple iterations to surface. This diagnostic capability transforms interface design from passive response handling into active model introspection.

Comparisons with Claude's AskUser tool highlight popup-mcp's distinctive value. While both implement structured interaction, Claude's terminal-based implementation lacks conditional rendering capabilities and operates as a closed system. Tidepool's vision extends further: an open, scriptable interface architecture where both LLMs and local code can manipulate UI elements, supported by pre/post-execution hooks for deeper workflow integration.

This hybrid approach doesn't eliminate natural language interaction but strategically positions it as a fallback mechanism rather than primary interface modality. The 'Other' fields preserve natural language's strengths for edge cases while optimizing the majority pathway through structured affordances. Such architectures acknowledge that interface design must respect biological perception thresholds—human attention spans operate on sub-second timescales, making multi-second delays inherently disruptive to cognitive flow.

Implications extend beyond current implementations. As computing moves toward agentic systems requiring complex human oversight, structured interfaces with conditional logic could become essential scaffolding for manageable delegation. The pattern transfers seamlessly across platforms—native desktop popups, terminal interfaces, and web components could all implement this latency-reduction strategy. What begins as a solution to LLM delays might ultimately reshape how we design all complex decision-support systems.

Critically, this approach counters the anthropomorphic temptation to model all interactions on human conversation. Just as spreadsheets didn't replicate accountants' verbal exchanges but invented superior tabular abstraction, we must develop interaction paradigms optimized for computational realities rather than biological precedents. The most humane interfaces might ultimately be those that respect our time more than our speech patterns—a design philosophy whose time has decisively arrived.

Comments

Please log in or register to join the discussion