The Trump administration's new AI policy directive mandates federal systems reflect 'historical accuracy' while banning 'woke' content, raising constitutional concerns about government interference in model training. As major AI firms pursue lucrative government contracts, critics warn this could compromise algorithmic neutrality and set dangerous precedents for ideological control over foundational AI models.

The Thin Line Between Guardrails and Censorship in AI Policy

At a 2022 Google AI event focused on responsible development, a troubling duality emerged: The same techniques used to reduce algorithmic bias could be weaponized to enforce ideological conformity. This concern materialized dramatically last week when the Trump administration unveiled its AI Action Plan, which directs federal agencies to procure only AI systems that "objectively reflect truth" while prohibiting models influenced by "social engineering agendas"—specifically targeting DEI initiatives and climate science.

Decoding the 'Anti-Woke' AI Mandate

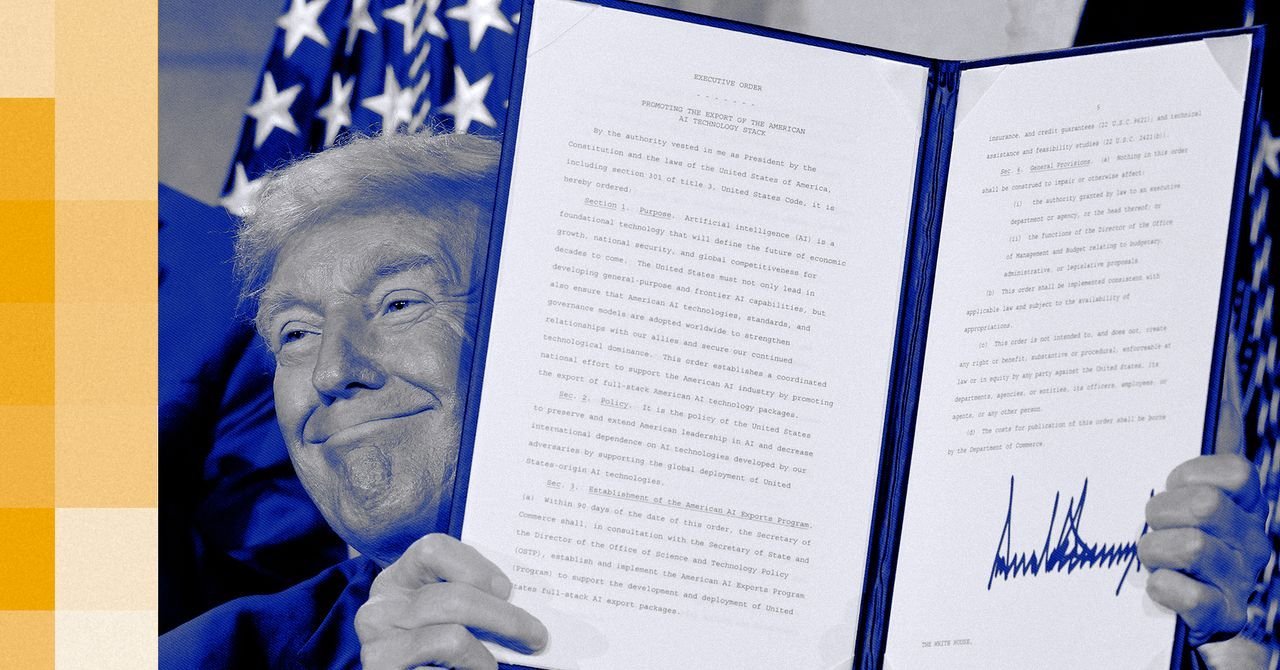

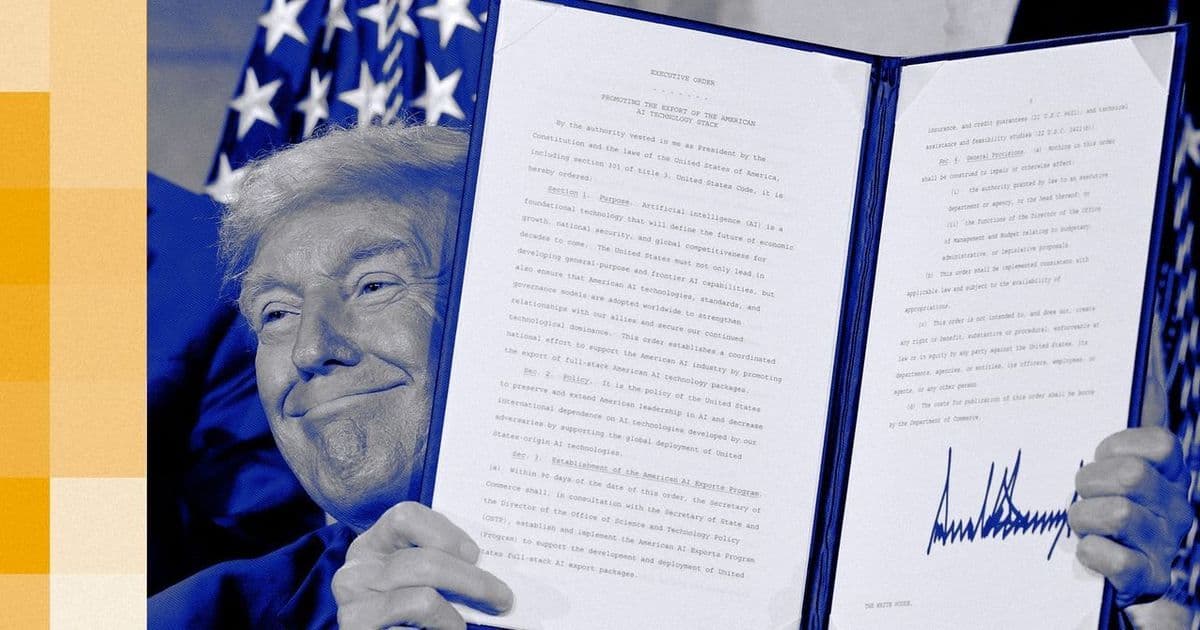

The 28-page policy framework contains seemingly benign language about preserving free speech, but its implementation guidelines reveal a pointed agenda. Commerce Department officials are instructed to eliminate references to "misinformation, Diversity, Equity, and Inclusion, and climate change" in AI governance rules. The accompanying executive order—"Preventing Woke AI in the Federal Government"—explicitly condemns AI that acknowledges systemic racism or gender inequality.

"The American people do not want woke Marxist lunacy in the AI models," Trump declared during the plan's unveiling, signing the order that bars federal contracts for models deemed ideologically non-compliant.

This creates a dangerous paradox: While the policy claims to protect free expression, it penalizes private companies for constitutional speech decisions about model behavior—like whether to highlight racial bias or climate impacts.

Constitutional Fault Lines and Industry Silence

Legally, the mandate treads precarious ground. As Steven Levy reports in Wired, the First Amendment should protect AI firms' rights to design models that reflect scientific consensus on climate change or address systemic bias. Yet major players like OpenAI, Google, and Anthropic have remained conspicuously silent despite the policy's implications.

An OpenAI engineer privately acknowledged technical feasibility of "anti-woke" adjustments, but emphasized: "This isn't a technical dispute—it's a constitutional one." The industry's reluctance to challenge the order appears tied to the plan's broader perks: streamlined data center approvals, research funding, and restrictions on state-level AI regulation.

The China Parallel and Information Integrity

Ironically, the policy singles out China for manipulating AI to align with Communist Party narratives while potentially replicating similar dynamics. Senator Edward Markey warns:

"Republicans want to use the power of government to make ChatGPT sound like Fox & Friends."

With AI becoming primary information conduits, government-defined "truth" could reshape reality perception. The Commerce Department must now audit Chinese models for political alignment—a precedent that might soon apply to U.S. systems.

Sovereignty at the Prompt

This confrontation exposes AI's vulnerability to political capture. Models trained on humanity's knowledge now face filtration through partisan lenses. As federal procurement becomes enforcement leverage, the independence of our digital knowledge infrastructure hangs in the balance—not through crude censorship, but through calibrated reinforcement of approved narratives. The algorithms shaping our understanding of history, science, and society may soon answer to a new authority: state-sanctioned truth.

Comments

Please log in or register to join the discussion