The shift from hand-coding to AI-assisted development isn't just another tool update—it's a fundamental change in how we think about software creation. While previous paradigm shifts took years to mature, LLM tooling has gone from experimental to production-ready in months, forcing a reevaluation of what 'writing code' even means.

The Speed of Change

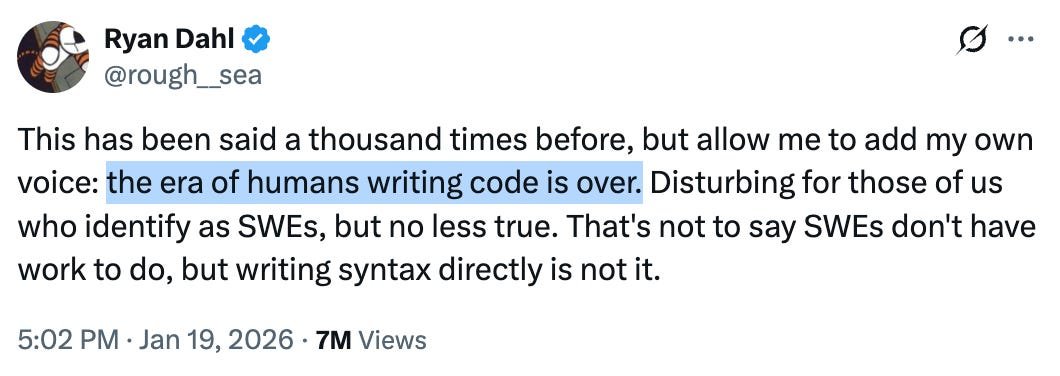

The claim that "writing code by hand is almost dead" feels dramatic because it should take years for such a shift to materialize. Historically, major technological transitions in computing followed a predictable pattern: Assembly to high-level languages took a decade, monoliths to microservices spanned years, and even the move from physical servers to cloud infrastructure unfolded gradually. These were multi-year journeys with clear migration paths, tooling ecosystems, and established best practices.

LLM tooling has compressed this timeline to months. In 2023, AI code assistants were novelties—useful for boilerplate but unreliable for complex logic. By mid-2024, they could handle substantial portions of typical application code. Today, they're not just generating snippets but architecting entire systems, debugging their own output, and iterating toward working solutions.

This acceleration creates a cognitive dissonance. We're experiencing the velocity of a paradigm shift without the accompanying maturity of tooling, standards, or even shared vocabulary. The "why" and "how" of AI-assisted development are still being figured out, even as the "what" becomes increasingly capable.

Staff+ Engineers and VPs: The Unexpected Power Users

Microsoft's internal AI usage dashboard reveals a surprising pattern: senior engineers and executives are adopting AI tools at higher rates than their junior counterparts. This contradicts the common assumption that AI would primarily replace entry-level coding tasks.

Several factors explain this trend:

1. Context Over Syntax Senior engineers spend more time on architecture, system design, and cross-team coordination than writing code. AI tools excel at translating high-level requirements into implementation details—exactly the gap between strategic thinking and tactical execution that senior folks navigate daily.

2. Exploration and Prototyping Staff+ engineers often need to quickly validate architectural approaches or explore multiple solutions. AI can generate working prototypes in minutes, allowing for rapid iteration before committing to a direction. This is far more efficient than building proof-of-concepts manually.

3. Documentation and Communication The same AI that writes code can generate design documents, API specifications, and technical explanations. For VPs and directors who need to communicate complex technical decisions to non-technical stakeholders, this is invaluable.

4. Code Review at Scale Senior engineers review more code than they write. AI tools can pre-screen pull requests, flag potential issues, and even suggest improvements, allowing humans to focus on architectural concerns rather than syntax errors.

The pattern suggests that AI isn't replacing junior developers—it's augmenting senior engineers' ability to execute at scale. The junior developer's role may shift from writing code to curating, reviewing, and integrating AI-generated solutions.

The 20-Minute SaaS Replacement

The author replaced a $120/year micro-SaaS with LLM-generated code in 20 minutes. The SaaS in question hadn't added features in four years and had a broken billing system for three years—a classic example of "write once, don't update later" software that still generates revenue through inertia.

Here's how the replacement worked:

1. Feature Extraction The LLM was prompted with: "Analyze this SaaS and list all its features." The model identified core functionality: user authentication, data storage, basic reporting, and export capabilities.

2. Architecture Design Using the feature list, the LLM designed a modern stack: React frontend, Node.js backend, PostgreSQL database, and AWS deployment. It generated the complete project structure, including Dockerfiles and CI/CD configurations.

3. Implementation The LLM wrote the code in iterative passes:

- First pass: Core data models and API endpoints

- Second pass: Frontend components and state management

- Third pass: Authentication and authorization

- Fourth pass: Deployment scripts and monitoring

4. Testing and Refinement The author used the LLM to generate test cases, identify edge cases, and fix bugs. The entire process took 20 minutes of human time, though the LLM's processing time was longer.

The Implications

This experiment highlights several critical points:

SaaS Vulnerability: Many micro-SaaS products survive on inertia rather than innovation. If a single developer can replicate a product's core functionality in an afternoon, the business model becomes fragile.

The "Good Enough" Threshold: The replacement wasn't perfect—it lacked polish and had fewer edge cases than the original. But it was functional. For many use cases, "good enough" is sufficient, especially when the alternative is paying for stagnant software.

Maintenance Burden Shift: The original SaaS had a maintenance burden (bug fixes, security updates, scaling). The LLM-generated code shifts this burden to the developer, but with modern tooling (automated testing, cloud services), the ongoing cost may be lower.

Industry Pulse

Claude Code's IDE Evolution Anthropic's Claude Code has added a diff view feature, making it more like a traditional IDE. Users can now see suggested changes side-by-side with existing code, accept or reject individual modifications, and iterate more precisely. This addresses a key limitation of AI coding assistants: the "black box" problem where users couldn't understand or control the changes being made.

ChatGPT's Ad Experiment OpenAI is testing ads in ChatGPT, while Google's Gemini remains ad-free. This reflects different business models: OpenAI needs to monetize its massive user base, while Google can afford to keep Gemini ad-free as part of its broader ecosystem strategy. For developers, this means evaluating tools based not just on capability but on business model sustainability.

Anthropic's Interview Process Anthropic uses take-home exercises for technical interviews, but with a twist: candidates are expected to use AI tools during the exercise. This acknowledges the reality that developers will use AI in their daily work and tests candidates' ability to effectively collaborate with these tools rather than pretending they don't exist.

Cloudflare Acquires Astro Cloudflare's acquisition of the Astro framework signals a strategic move toward edge computing and modern web development. Astro's island architecture and focus on performance align with Cloudflare's edge network capabilities. For developers, this means Astro's future is now tied to Cloudflare's platform, which could affect adoption decisions.

The Broader Pattern

What we're witnessing isn't just a new tool category—it's a fundamental rethinking of the software development lifecycle. The traditional model:

- Requirements → 2. Design → 3. Implementation → 4. Testing → 5. Deployment

Is being replaced by:

- Intent → 2. AI Generation → 3. Human Curation → 4. Validation → 5. Deployment

The human role shifts from creator to curator, from writing every line to guiding the process, from implementation to quality assurance and architectural oversight.

This transition creates both opportunities and challenges:

Opportunities

- Faster iteration cycles

- Lower barrier to entry for prototyping

- Ability to explore more solutions

- Reduced time on boilerplate

Challenges

- New skills required (prompt engineering, AI debugging)

- Quality control at scale

- Understanding AI-generated code

- Maintaining architectural coherence

Practical Takeaways

For teams adopting AI-assisted development:

Start with Code Review: Use AI to review pull requests before having it write code. This builds trust and understanding.

Establish Guidelines: Create team standards for AI usage—when to use it, how to review AI-generated code, and what to avoid.

Invest in Testing: AI can generate code faster than humans can review it. Comprehensive automated testing becomes essential.

Focus on Architecture: As implementation becomes easier, architectural decisions become more critical. Senior engineers should double down on system design.

Embrace the Shift: Fighting the trend is futile. The teams that learn to work effectively with AI will outperform those that resist.

The claim that "writing code by hand is almost dead" may be dramatic, but it captures a real shift. The question isn't whether this change is coming—it's already here. The real question is how we adapt our skills, processes, and mindset to thrive in this new reality.

The Pragmatic Summit is happening in San Francisco on February 11. With 90% of spaces filled, this is your last chance to join. Paid subscribers get priority registration and early access to recorded sessions. Apply here.

Comments

Please log in or register to join the discussion