Modern servers rival early supercomputers, packing up to 128 cores and 8TB of RAM at a fraction of historical costs. This analysis challenges cloud-native dogma, showing how vertical scaling with a single robust server—plus strategic backups—often outperforms distributed systems in cost, simplicity, and efficiency for most workloads. Discover why paying the 'cloud premium' rarely justifies the complexity for applications under 10k QPS.

The Unspoken Power of One Big Server: Rethinking Scalability in the Cloud Era

Amidst endless debates over microservices versus monoliths, a fundamental question lingers: are distributed systems worth their overhead for most applications? The answer, increasingly, is no. Modern hardware has evolved so dramatically that a single server now offers capabilities once reserved for entire data centers—making vertical scaling not just viable, but often superior.

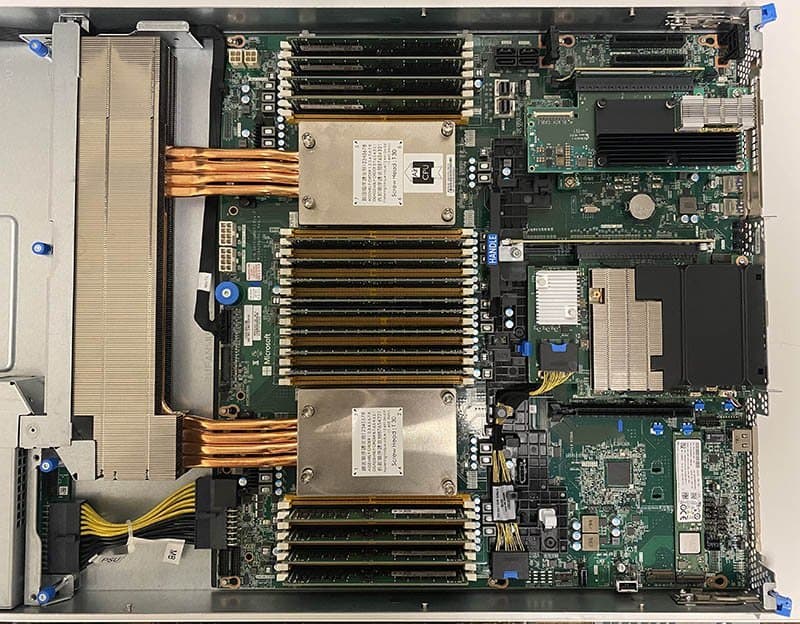

{{IMAGE:2}} The Azure server pictured above—with 128 cores, 256 threads, and 8TB RAM capacity—exemplifies today's powerhouse hardware. (Source: ServeTheHome)

Meet the Titan: What One Server Can Do

Today's servers, like AMD's third-generation EPYC CPUs, redefine performance boundaries:

- Compute: 128 cores handling 4 TFLOPs—equivalent to a top-500 supercomputer in 2000.

- Memory: Up to 8TB RAM with 200Gbps throughput.

- I/O: 128 PCIe lanes supporting 30+ NVMe SSDs and 100Gbps networking.

Benchmarks reveal staggering real-world potential:

- 500k requests/sec via nginx

- 1M+ IOPS on NoSQL databases

- Linux kernel compiled in 20 seconds

- 4K video rendered at 75 FPS

This isn't theoretical. For under $1,400/month (e.g., Hetzner), you rent 32-core/128GB RAM servers. Even purchasing a 128-core beast outright (~$40k) breaks even against cloud alternatives in months.

The Cloud Premium: When Does It Make Sense?

Cloud providers push distributed architectures, but costs reveal a stark reality. Renting AWS’s m6a.metal (96 cores, 768GB RAM) costs ~$6,055/month—over 4x OVHCloud’s similar offering. Serverless markup is worse: Lambda runs 5-25x pricier than reserved instances for sustained workloads.

"Cloud salespeople push microservices and serverless because it benefits them—not necessarily you. The complexity tax is real," the article notes. Bursty workloads (e.g., sporadic simulations) justify cloud elasticity. But for steady traffic? Vertical scaling wins.

Debunking Myths: Availability and Workload Realities

Objection: "One server risks downtime!"

Solution: Run primary/backup pairs in separate data centers. A 2x2 setup (two servers per DC) mitigates most failures. Correlated hardware flaws? Diversify server models—Backblaze-style.

Objection: "Cloud-native speeds up development!"

True initially—but technical debt compounds. As one engineer quips: "Cloud Ops replace sysadmins but cost 5x more." Simpler architectures reduce long-term overhead.

The Strategic Shift: Less Complexity, More Value

Distributed systems add O(n) coordination overhead. One server eliminates that tax. Even microservices can run in containers on a single machine—but ask: does the complexity pay off? For CDNs or global low-latency needs, third-party services trump in-house builds.

Ultimately, today's hardware lets you scale tall, not wide. As servers grow cheaper and denser, the cloud’s auto-scaling allure dims for all but the spikiest workloads. Embrace simplicity: start with one big server, add backups, and scale vertically until metrics scream for distribution. Your budget—and ops team—will thank you.

Source analysis based on: specbranch.com

Comments

Please log in or register to join the discussion