TranscribeX leverages OpenAI Whisper and NVIDIA Parakeet to deliver lightning‑fast, highly accurate transcriptions and translations—all locally on the Mac. With speaker diarization, AI‑driven summarization, and a full suite of export options, it redefines how developers and content creators handle audio and video on the desktop.

TranscribeX: The Privacy‑First, AI‑Powered Transcription Tool that Turns macOS into a Language Hub

In an age where cloud‑based transcription services dominate, TranscribeX offers a compelling alternative: a fully local, macOS‑native application that turns your machine into a high‑performance language engine. Built on top of OpenAI’s Whisper and NVIDIA’s Parakeet models, it promises both speed and accuracy while keeping your data on the device.

Why Local Matters

"The biggest concern with most transcription services is data privacy. By keeping everything on‑device, TranscribeX eliminates the risk of leaking confidential conversations or proprietary content." – Ethan Oawlly, creator

Local execution means no network calls, no reliance on external APIs, and no exposure to the typical data‑leak vectors that plague SaaS offerings. For developers working with sensitive interview footage, legal depositions, or confidential corporate meetings, this is a game‑changer.

Core Features at a Glance

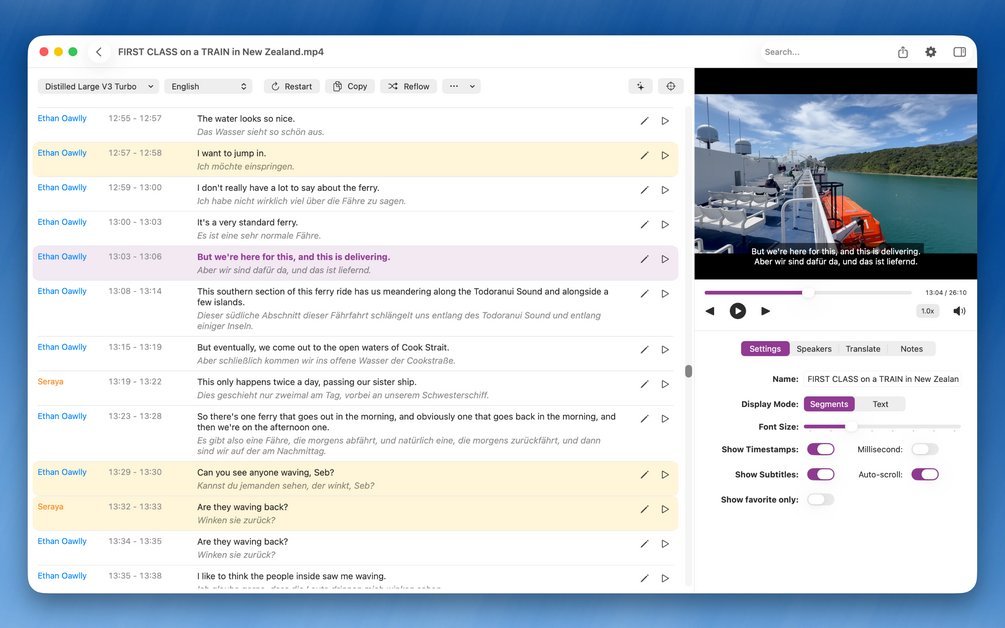

- Massive language support: Transcribe and translate into 100+ languages using Apple Translate or DeepL.

- Automatic Speaker Diarization: Segments audio by speaker, labeling each portion for clarity.

- Batch processing: Import thousands of files and let the engine transcribe them in parallel.

- GPU acceleration: Leverages the M1/M2 GPU to run Parakeet up to 20× faster than Whisper.

- AI‑driven summarization: Plug in ChatGPT, Gemini, or your own API key to auto‑summarize.

- Export versatility: TXT, PDF, SRT, VTT, and professional formats with custom line‑length controls.

- Edit‑friendly workflow: Word‑level timestamps, manual segment creation, and time‑range adjustments.

Whisper vs. Parakeet: The Performance Edge

Whisper is renowned for its accuracy, but it can be slow on large files. NVIDIA’s Parakeet, a distilled variant of Whisper, offers comparable precision with a fraction of the compute time. On an M1‑based Mac, users report transcription speeds of 20–30 × real‑time for 30‑minute videos.

# Example command line usage (via CLI wrapper)

transcribex --model parakeet --input video.mp4 --output transcript.srt

The CLI interface is optional; the GUI remains the primary user experience.

Beyond Transcription: A Full‑Featured Toolchain

TranscribeX isn’t just a transcription engine. Its integration with Apple’s native services and third‑party APIs creates a seamless pipeline:

- Capture – Record from any macOS application or import a local file.

- Transcribe – Run Whisper/Parakeet locally.

- Translate – Offload to Apple Translate or DeepL.

- Summarize – Invoke ChatGPT/Gemini via API.

- Export – Choose the format that fits your workflow.

This end‑to‑end flow eliminates context switching and reduces the friction that typically accompanies multi‑tool pipelines.

Privacy‑First Design Choices

- No data sent to the cloud: All models run on‑device; no network traffic for transcription or translation.

- Local GPU utilization: Keeps processing inside the Mac’s hardware boundaries.

- Optional API keys: Users must explicitly provide keys for ChatGPT/Gemini; otherwise, local models suffice.

The result is a tool that respects user confidentiality while still harnessing the power of modern AI.

Who Should Use TranscribeX?

- Developers working with audio‑heavy documentation or code‑review videos.

- Content creators needing quick, accurate captions for YouTube or podcasts.

- Legal professionals handling depositions that must remain confidential.

- Researchers requiring high‑fidelity transcripts for linguistic analysis.

The one‑time purchase model, coupled with a 7‑day refund guarantee, lowers the barrier to entry for experimentation.

Final Thoughts

TranscribeX demonstrates that powerful AI can live entirely on the edge. By marrying Whisper’s accuracy with Parakeet’s speed—and wrapping it in a privacy‑centric macOS app—it offers a compelling alternative to cloud‑centric services. For anyone who values data sovereignty without sacrificing performance, TranscribeX is worth a test run.

Comments

Please log in or register to join the discussion