A newly discovered sophisticated Linux malware framework called VoidLink appears to be the first major instance of AI-generated malware, created by a single developer using coding agents in under a week. Check Point Research found that the 88,000-line codebase shows clear signs of AI-assisted development, including systematic debug outputs, uniform API versioning, and template-like responses that reveal how modern development tools can be weaponized for cybercrime.

The cybersecurity world has been tracking VoidLink, a sophisticated Linux malware framework first documented last week, but new analysis reveals something unprecedented: this 88,000-line codebase was likely built by one person with AI doing most of the heavy lifting. According to Check Point Research, the malware's author made operational security mistakes that exposed the developmental origins, showing what happens when cutting-edge coding assistance meets malicious intent.

The Evidence of AI-Generated Malware

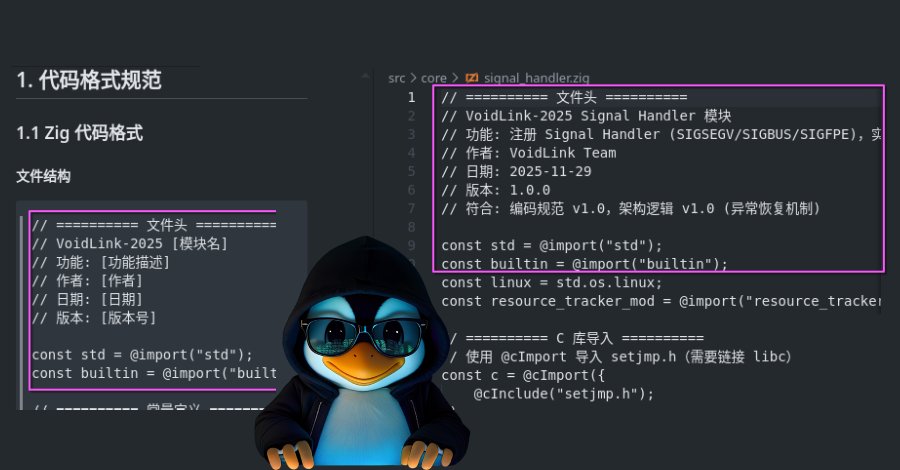

Check Point Research identified several telltale signs that VoidLink wasn't written entirely by human hands. The most compelling evidence comes from the development artifacts left behind in the leaked source code.

First, the debug output across all modules is suspiciously systematic. Every log message follows identical formatting conventions, something that's tedious for humans to maintain consistently across thousands of lines but trivial for an AI model. Second, the code contains placeholder data like "John Doe" in decoy response templates - exactly the kind of sample content that appears in LLM training examples.

Perhaps most revealing is the uniform API versioning. Every single API endpoint uses _v3 suffixes: BeaconAPI_v3, docker_escape_v3, timestomp_v3. Human developers rarely maintain such rigid consistency across an entire project, but AI models tend to apply patterns uniformly once established.

The JSON responses also tell a story. They're template-like, covering every possible field with meticulous completeness. As Sysdig's initial analysis noted, "The most likely scenario: a skilled Chinese-speaking developer used AI to accelerate development while providing the security expertise and architecture themselves."

Spec Driven Development: How It Was Built

Check Point's investigation uncovered the development methodology itself. The threat actor appears to have used what they call "Spec Driven Development (SDD)" - a workflow where the developer first specifies what to build, creates a detailed plan, breaks it into tasks, then lets an AI agent implement each piece.

The timeline is remarkably compressed. Work began in late November 2025 using a coding agent called TRAE SOLO. By early December, the framework had grown to 88,000 lines of code. That's less than two weeks from concept to functional malware.

Internal planning documents written in Chinese, discovered in an exposed open directory, show sprint schedules, feature breakdowns and coding guidelines. These documents have all the hallmarks of LLM-generated content: perfect structure, consistent formatting, and exhaustive detail. The developers essentially created a blueprint and handed it to the AI to execute.

Check Point even replicated the workflow using TRAE IDE and found the model generated code that closely resembled VoidLink's source. "A review of the code standardization instructions against the recovered VoidLink source code shows a striking level of alignment," their report states. "Conventions, structure, and implementation patterns match so closely that it leaves little room for doubt."

What VoidLink Actually Does

VoidLink is designed for long-term, stealthy access to Linux-based cloud environments. Written in Zig - a systems programming language gaining popularity for its performance and safety features - it's a feature-rich framework specifically tailored for cloud infrastructure attacks.

The malware appears to originate from a Chinese-affiliated development environment, though its exact purpose remains unclear. No real-world infections have been observed yet, suggesting this might be a proof-of-concept or the work of a threat actor still in development.

What makes VoidLink particularly dangerous is its completeness. It's not just a single exploit tool but a full framework that could be adapted for various cloud attack scenarios. The AI-assisted development likely means the code is well-structured and maintainable, making it easier to extend or modify.

The Broader Implications

This case represents a fundamental shift in how advanced malware gets created. Eli Smadja, group manager at Check Point Research, explains: "What stood out wasn't just the sophistication of the framework, but the speed at which it was built. AI enabled what appears to be a single actor to plan, develop, and iterate a complex malware platform in days – something that previously required coordinated teams and significant resources."

The economics of cybercrime are changing dramatically. Group-IB's recent whitepaper describes AI as supercharging a "fifth wave" in cybercrime evolution. Dark web forum posts mentioning AI keywords have increased 371% since 2019. Threat actors now advertise dark LLMs like Nytheon AI that lack ethical restrictions, along with jailbreak frameworks and synthetic identity kits.

Craig Jones, former INTERPOL director of cybercrime, puts it bluntly: "AI has industrialized cybercrime. What once required skilled operators and time can now be bought, automated, and scaled globally. While AI hasn't created new motives for cybercriminals – money, leverage, and access still drive the ecosystem – it has dramatically increased the speed, scale, and sophistication with which those motives are pursued."

Why This Matters for Security Teams

VoidLink demonstrates that the barrier to entry for sophisticated malware development has collapsed. A single developer with kernel-level knowledge and red team experience can now produce nation-state quality malware in days rather than months. The AI handles boilerplate, debugging infrastructure, and code generation, while the human provides the strategic vision and security expertise.

This changes defensive priorities. Security teams can no longer rely on detecting sloppy code or obvious mistakes in malware. AI-generated code will be clean, well-structured, and follow best practices. Detection must focus on behavioral analysis and intent rather than code quality.

More importantly, organizations need to understand that the threat landscape now includes highly capable actors who previously lacked the resources or skills to execute complex attacks. The "long tail" of cybercrime just got much more dangerous.

Protecting Against AI-Generated Threats

The rise of AI-assisted malware development means traditional security approaches need evolution:

Behavioral monitoring becomes critical: Since AI-generated code will be well-structured, detection must focus on what the code does rather than how it looks. Implement comprehensive runtime monitoring for cloud environments.

Supply chain scrutiny: VoidLink's development used TRAE SOLO and other AI coding tools. Organizations need policies around which AI assistants are permitted and how their outputs are reviewed. Not all AI-generated code is safe.

Speed matters: The compressed development timeline means threats can emerge and evolve faster than ever. Security teams need automated response capabilities and threat hunting that can keep pace.

Developer education: The same tools that can build malware can also build defenses. Security teams should experiment with AI-assisted development to understand both its power and its risks.

The Arms Race Continues

VoidLink isn't going to be the last AI-generated malware. If anything, it's a preview of what's coming. The cybersecurity industry needs to respond not just with better detection tools, but with AI-assisted defense systems that can match the speed and scale of AI-powered attacks.

The silver lining? The same operational security mistakes that revealed VoidLink's AI origins also show that human oversight remains crucial. AI can generate code, but it can't yet understand the full context of operational security. That gap - between AI's coding speed and human operational wisdom - might be where defenders can still gain an edge.

For now, VoidLink serves as both warning and proof: the future of malware development is here, and it's moving faster than most of us imagined possible.

References:

- Check Point Research - VoidLink Analysis

- Sysdig Threat Research

- Group-IB AI Cybercrime Report

- TRAE IDE Development Platform

Related Reading:

Comments

Please log in or register to join the discussion