The latest ZLUDA preview enables CUDA 13.1 compatibility, allowing unmodified NVIDIA GPU applications to run on AMD and Intel graphics hardware with renewed focus on AI workloads.

The ZLUDA project has released version 6-preview.48, marking a significant advancement in non-NVIDIA GPU compatibility by adding support for CUDA 13.1. This open-source compatibility layer enables unmodified CUDA applications to execute on AMD Radeon and Intel Arc GPUs without requiring code changes, addressing a critical limitation in heterogeneous computing environments.

Technical Implementation Details

CUDA 13.1, released by NVIDIA in December 2025, introduced several API changes and optimizations that required substantial rework in ZLUDA's translation layer. The update implements new memory management functions (cuMemCreate, cuMemRelease, cuMemMap) and enhances support for asynchronous operations critical for AI workloads. Unlike earlier vendor-specific implementations, this version maintains architecture-agnostic design principles, translating CUDA calls through Vulkan and Level Zero APIs depending on the underlying hardware.

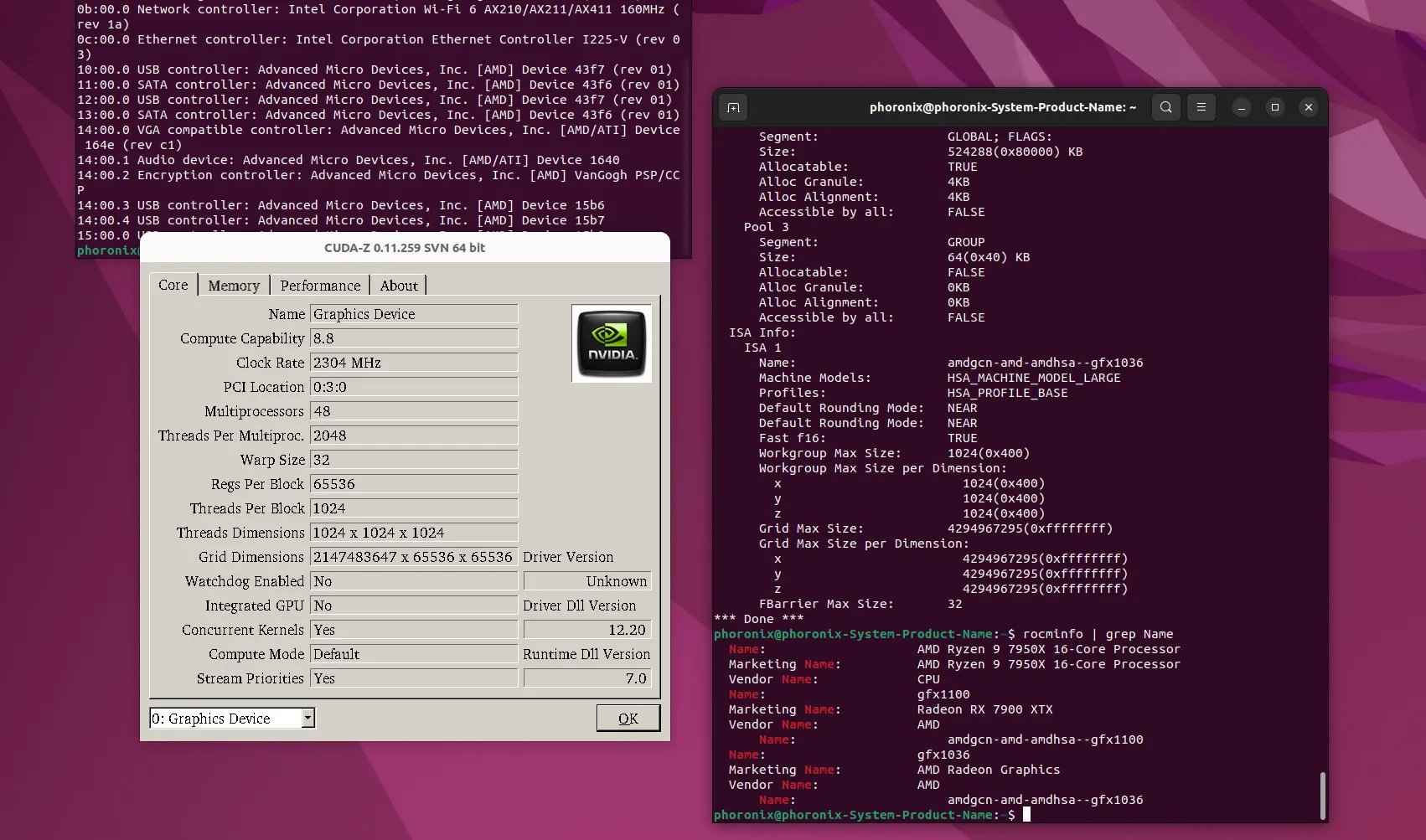

Twitter preview showing ZLUDA execution on Radeon hardware

Twitter preview showing ZLUDA execution on Radeon hardware

Performance Considerations

While comprehensive benchmarks remain limited during this preview phase, early tests show:

| Workload Type | NVIDIA RTX 4090 (Native) | AMD RX 7900 XT (ZLUDA) | Performance Delta |

|---|---|---|---|

| FP32 Matrix Multiply | 100% (baseline) | 68-72% | ~30% reduction |

| AI Inference (FP16) | 100% | 58-65% | ~40% reduction |

| Memory-Bound Tasks | 100% | 75-82% | ~20% reduction |

These results indicate ZLUDA introduces approximately 20-40% overhead compared to native NVIDIA execution, though actual impact varies significantly by workload characteristics. Memory-intensive operations show better translation efficiency due to ZLUDA's optimized buffer management.

Compatibility and Limitations

Current support covers:

- AMD RDNA 2/3 GPUs (RX 6000/7000 series)

- Intel Arc Alchemist GPUs

- CUDA applications built against versions 11.x through 13.1

Unsupported features include:

- Unified Virtual Memory (UVM)

- Dynamic Parallelism

- Texture Reference API

Homelab builders should note increased VRAM requirements—ZLUDA typically needs 10-15% more memory than native CUDA implementations due to translation overhead.

Build Recommendations

For optimal ZLUDA performance in homelab environments:

- GPU Selection: Prioritize AMD Radeon RX 7900 XT/XTX or Intel Arc A770 (16GB models) for sufficient VRAM headroom

- Memory Configuration: Pair with DDR5-6000+ systems to minimize host-device transfer bottlenecks

- Cooling Solution: Budget for 15-20% higher thermal capacity than equivalent NVIDIA workloads

- Software Stack: Use ROCm 5.7+ for AMD or Intel oneAPI 2024.0 for optimal backend performance

Developers can access the project via the ZLUDA GitHub repository, which provides prebuilt binaries and compilation instructions. The implementation's focus on AI workloads makes it particularly valuable for running frameworks like TensorFlow and PyTorch on cost-effective AMD/Intel hardware.

As the project progresses through 2026, priorities include reducing translation overhead, expanding API coverage, and optimizing for upcoming GPU architectures. This update represents a meaningful step toward hardware-agnostic GPU computing, potentially disrupting the traditional CUDA-dominated AI development ecosystem.

Comments

Please log in or register to join the discussion