Major AI labs are turning to Pokémon Blue as an unconventional benchmark to test their models' long-term planning, risk assessment, and strategic decision-making capabilities, moving beyond traditional performance metrics to evaluate how AI handles complex, multi-step challenges.

The competitive landscape of artificial intelligence benchmarking has taken an unexpected turn toward the Kanto region. Google, OpenAI, and Anthropic are now using Pokémon Blue as a testing ground for their frontier models, creating a novel evaluation framework that measures strategic reasoning rather than raw computational power.

This initiative began in 2023 when Anthropic applied AI lead David Hershey launched "Claude Plays Pokémon" on Twitch, streaming the company's Claude model navigating the classic Game Boy RPG. The project has since evolved into a competitive arena where Gemini and GPT models compete against each other and against human players, with official recognition from the respective AI labs.

Why Pokémon Blue Represents a Complex Benchmark

Unlike simpler games like Pong or Chess, Pokémon presents a multi-layered challenge that tests several AI capabilities simultaneously. The game requires:

Strategic Resource Management: Players must balance limited resources (Poké Balls, healing items, money) against long-term goals. An AI must decide whether to invest in current team members or capture new Pokémon, a decision that affects the entire game's trajectory.

Risk Assessment: The game presents constant risk-reward scenarios. Should the AI challenge a high-level trainer for a rare Pokémon, or grind against weaker opponents for safer experience points? These decisions mirror real-world scenarios where AI systems must weigh potential gains against probable losses.

Long-term Planning: Completing Pokémon Blue requires executing a sequence of approximately 50-60 hours of gameplay, demanding consistent decision-making across thousands of discrete choices. The AI must maintain strategic coherence over extended periods.

Adaptive Learning: The game's difficulty curve requires the AI to adjust its strategy as it encounters new Pokémon types, gym leaders with specialized teams, and evolving challenges.

As Hershey explained to the Wall Street Journal, "The thing that has made Pokémon fun and that has captured the [machine learning] community's interest is that it's a lot less constrained than Pong or some of the other games that people have historically done this on. It's a pretty hard problem for a computer program to be able to do."

Current State of Competition

The competitive results reveal interesting patterns in AI capabilities:

Gemini and GPT: Both Google's Gemini and OpenAI's GPT models have successfully completed Pokémon Blue. Their respective labs have actively supported these efforts, occasionally tweaking model parameters during gameplay to optimize performance. Having conquered the original game, both models have progressed to sequels like Pokémon Gold and Silver, which introduce additional complexity through day/night cycles, breeding mechanics, and expanded type matchups.

Claude's Challenge: Anthropic's Claude model, despite being the pioneer of this benchmarking approach, has not yet completed the original game. The latest Claude Opus 4.5 model continues streaming its progress, providing a real-time view of its decision-making process. This slower progress isn't necessarily a negative indicator—it may reflect different architectural choices or optimization priorities in Anthropic's model design.

Practical Applications for AI Development

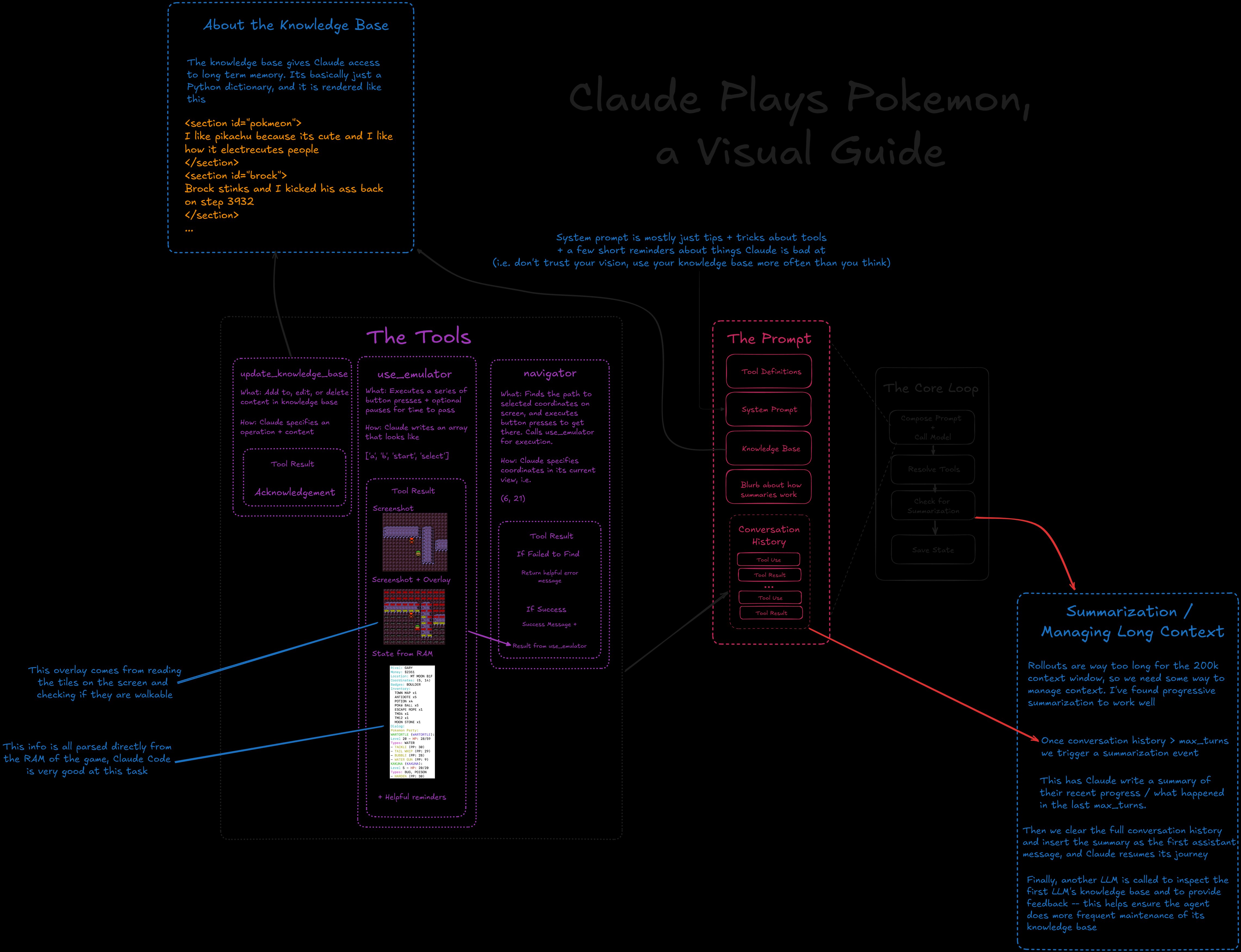

Hershey applies insights from these Pokémon streams to improve AI performance for enterprise clients. The key concept is the "harness"—the software framework that surrounds a model and directs its computational resources toward specific tasks.

By observing how Claude navigates Pokémon's decision trees, Hershey identifies patterns in how the model allocates attention, processes game state information, and makes sequential choices. These observations translate directly to improving compute efficiency for real-world applications:

- Customer Service Bots: Better long-term conversation management

- Research Assistants: Improved multi-step problem solving

- Business Planning Tools: Enhanced risk assessment capabilities

The Pokémon benchmark provides quantitative metrics for these improvements: completion time, resource efficiency, and strategic success rate.

Broader Implications for AI Evaluation

This trend represents a shift in how AI capabilities are measured. Traditional benchmarks like GLUE or SuperGLUE test specific linguistic tasks, while game-based evaluation tests integrated reasoning.

Quantifiable Progress: Unlike subjective assessments of AI responses, Pokémon completion provides clear success/failure criteria and measurable progress metrics.

Transferable Skills: The strategic planning tested in Pokémon directly correlates to business planning, research methodology, and complex project management—areas where AI systems are increasingly deployed.

AGI Pathway: As the industry moves toward artificial general intelligence, systems must handle long-running, sequential tasks. Pokémon provides a controlled environment to test these capabilities before deployment in critical applications.

Comparison with Other AI Benchmarks

This approach contrasts with simpler programming challenges. In a previous exercise where models were asked to clone Minesweeper, OpenAI's Codex succeeded while Google's Gemini failed to produce a playable game. Pokémon represents a significant step up in complexity, requiring not just code generation but continuous strategic execution.

The gaming benchmark also differs from traditional AI evaluation in its emphasis on emergent behavior. Unlike supervised learning tasks with clear right/wrong answers, Pokémon requires models to develop their own strategies based on incomplete information and changing conditions.

Future Directions

As AI models continue to evolve, expect this gaming benchmark to expand:

- Multiplayer Scenarios: Models competing against each other in real-time strategy games

- Procedural Challenges: Unseen game variants to test adaptability

- Cross-domain Transfer: Evaluating whether strategies developed in Pokémon generalize to other complex tasks

The Pokémon benchmarking trend demonstrates the AI industry's creative approach to evaluation. By using a beloved childhood game, researchers have created an engaging, quantifiable, and surprisingly comprehensive test of artificial intelligence capabilities that goes far beyond traditional metrics.

For those interested in following these benchmarks live, the "Claude Plays Pokémon" stream continues on Twitch, providing a real-time view of AI strategic reasoning in action.

Comments

Please log in or register to join the discussion