AMD unveils Venice CPUs and Instinct MI400/MI500 GPUs featuring rack-scale Helios systems and UALink technology, positioning itself as a competitive alternative to Nvidia's proprietary AI infrastructure.

At CES 2026, AMD declared war on Nvidia's AI supremacy with detailed announcements about its Epyc Venice CPUs and Instinct MI400-series GPUs, while teasing a future MI500-series promising unprecedented performance gains. The strategic move targets enterprises and hyperscalers seeking alternatives to proprietary AI infrastructure.

Questionable performance metrics

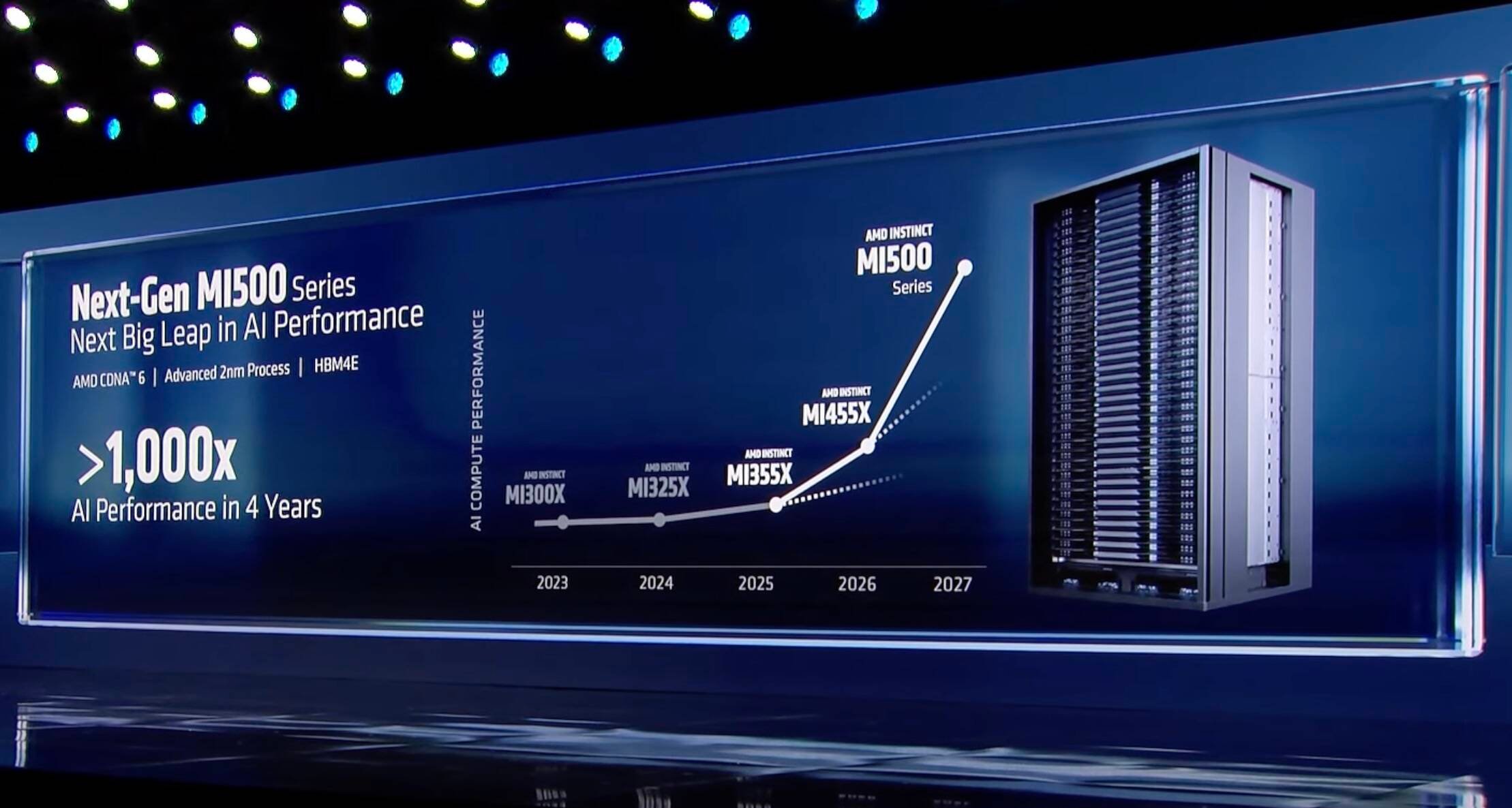

AMD CEO Lisa Su claimed the upcoming MI500-series GPUs would deliver a 1000x performance boost over 2024's MI300X GPUs. However, the comparison appears disingenuous – AMD later clarified it contrasts an eight-GPU MI300X node against an entire MI500 rack with unspecified GPU count.

"These astronomical performance claims require scrutiny," notes hardware analyst Elena Rodriguez. "AI workload efficiency depends on interconnect bandwidth, memory architecture, and software optimization – factors AMD hasn't fully detailed. Without knowing MI500's configuration, the 1000x claim remains marketing theater."

MI400-series: Three-tiered assault

AMD revealed concrete specifications for its 2026-bound MI400-series GPUs:

- MI455X: AI-optimized for Helios rack systems (40 petaFLOPS FP4 inference)

- MI440X: Traditional eight-GPU configuration for enterprise deployment

- MI430X: Hybrid AI/HPC design for supercomputing applications

The MI455X employs twelve 3nm/2nm chiplets with twelve 36GB HBM4 stacks, delivering 432GB memory at 19.6TB/s bandwidth. Its packaging confirms AMD's commitment to chiplet architecture, though exact performance across precision formats (FP8, FP16) remains undisclosed.

Helios: AMD's rack-scale answer to NVL72

AMD's Helios compute blade integrates four MI455X GPUs with one Venice CPU per node. A full rack contains 72 GPUs and 18 CPUs, delivering:

- 2.9 exaFLOPS FP4 performance

- 31TB HBM4 memory

- 1.4PB/s memory bandwidth

Crucially, Helios uses Ultra Accelerator Link (UALink) – AMD's open alternative to Nvidia's NVLink – tunneling through Ethernet. "UALink could reshape AI infrastructure economics," observes data center strategist Marcus Tan. "Enterprises gain negotiation leverage when proprietary technologies aren't mandatory."

Venice CPU: Architectural evolution

The Venice CPU marks AMD's highest-core-count processor yet, featuring:

- Up to 256 Zen 6 cores

- 128 PCIe 6.0 lanes

- 16-channel DDR5 8800 memory

- Dual I/O dies with eight 32-core CCDs

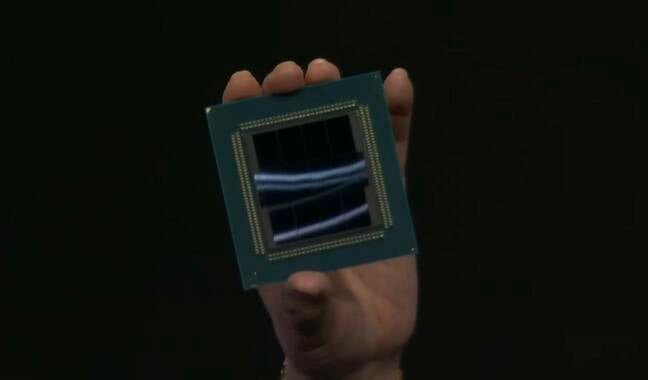

Die shots reveal eight auxiliary chiplets of unknown function flanking the I/O dies. While AMD hasn't confirmed whether Venice uses high-frequency or dense cores, its 3D-stacked cache variants will likely target HPC workloads requiring terabytes of L3 cache.

Competitive implications

By combining Venice CPUs with UALink-based Helios racks, AMD positions itself as the primary challenger to Nvidia's AI hegemony:

| System | FP8 Performance | Memory Bandwidth | Interconnect |

|---|---|---|---|

| AMD Helios | 1.4 exaFLOPS | 1.4 PB/s | UALink (open standard) |

| Nvidia NVL72 | 5 exaFLOPS | 4.6 PB/s | NVLink (proprietary) |

"AMD's open approach could disrupt Nvidia's pricing power," suggests tech economist Dr. Leah Simmons. "When hyperscalers like Meta and xAI deploy Helios, it pressures Nvidia to justify premium margins."

Environmental considerations

The Helios rack's 600kW power draw mirrors Nvidia's Rubin systems, raising sustainability concerns. AMD hasn't disclosed power efficiency metrics for MI455X or Venice, leaving unanswered questions about operational costs at scale.

Path forward

AMD expects MI400-series availability in late 2026, with MI500-series following in 2027. The coming months should reveal whether AMD's architectural bets – particularly UALink's real-world performance – can erode Nvidia's ecosystem advantages. For enterprises locked into proprietary AI stacks, viable alternatives could finally emerge.

Comments

Please log in or register to join the discussion