Microsoft's Azure Monitor pipeline transformations now in public preview enable granular telemetry processing before ingestion, fundamentally altering cost and analytics dynamics for multi-cloud environments.

Microsoft's release of Azure Monitor pipeline transformations in public preview represents a strategic shift in cloud-native observability, enabling organizations to preprocess telemetry data before ingestion. This capability fundamentally changes how enterprises manage observability costs, data quality, and analytics efficiency across hybrid and multi-cloud environments.

Transforming Observability Economics

Azure Monitor pipeline transformations allow preprocessing of logs and metrics using Kusto Query Language (KQL) at the collection point. Key financial impacts include:

- Cost Reduction: Filtering redundant events before ingestion directly lowers Azure Log Analytics costs, which operate on a consumption-based pricing model

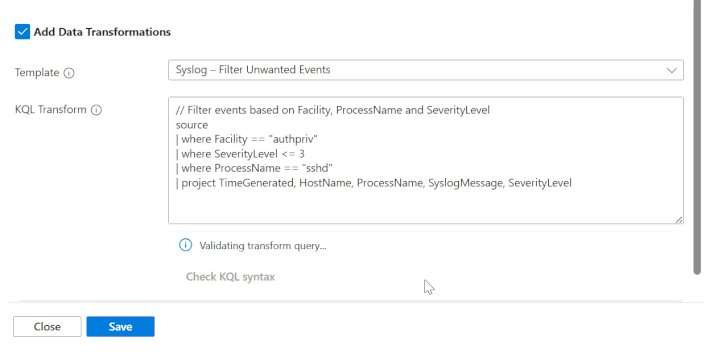

- Noise Reduction: Dropping low-value telemetry (e.g., verbose debug logs) through field-level filtering

- Aggregation Efficiency: Summarizing high-volume data streams (e.g., network logs) into minute-level aggregates

Schema validation prevents accidental compatibility breaks with standard tables

Schema validation prevents accidental compatibility breaks with standard tables

Multi-Cloud Comparison: Capability Alignment

| Feature | Azure Monitor | AWS CloudWatch | Google Cloud Operations |

|---|---|---|---|

| Pre-ingest Processing | Native KQL transformations | Limited via Lambda/Flows | Requires Dataflow pipelines |

| Schema Enforcement | Built-in validation | Manual configuration | Schema templates |

| Edge Caching | Supported | Via Greengrass | Limited |

| Cost Optimization | Pre-filtering reduces ingestion volume | Post-ingest filtering only | Partial via Log Router |

Azure's solution differentiates through native KQL integration and schema-aware processing, reducing architectural complexity compared to AWS's Lambda-based transformations or GCP's Dataflow requirements.

Migration Implications

For organizations standardizing on Azure Monitor:

- Data Pipeline Rationalization: Replace third-party log shippers (Fluentd, Logstash) with Azure's integrated pipeline

- Schema Standardization: Automatic conversion of CEF/syslog to structured formats simplifies multi-source onboarding

- Connectivity Resilience: Local caching maintains observability during network interruptions

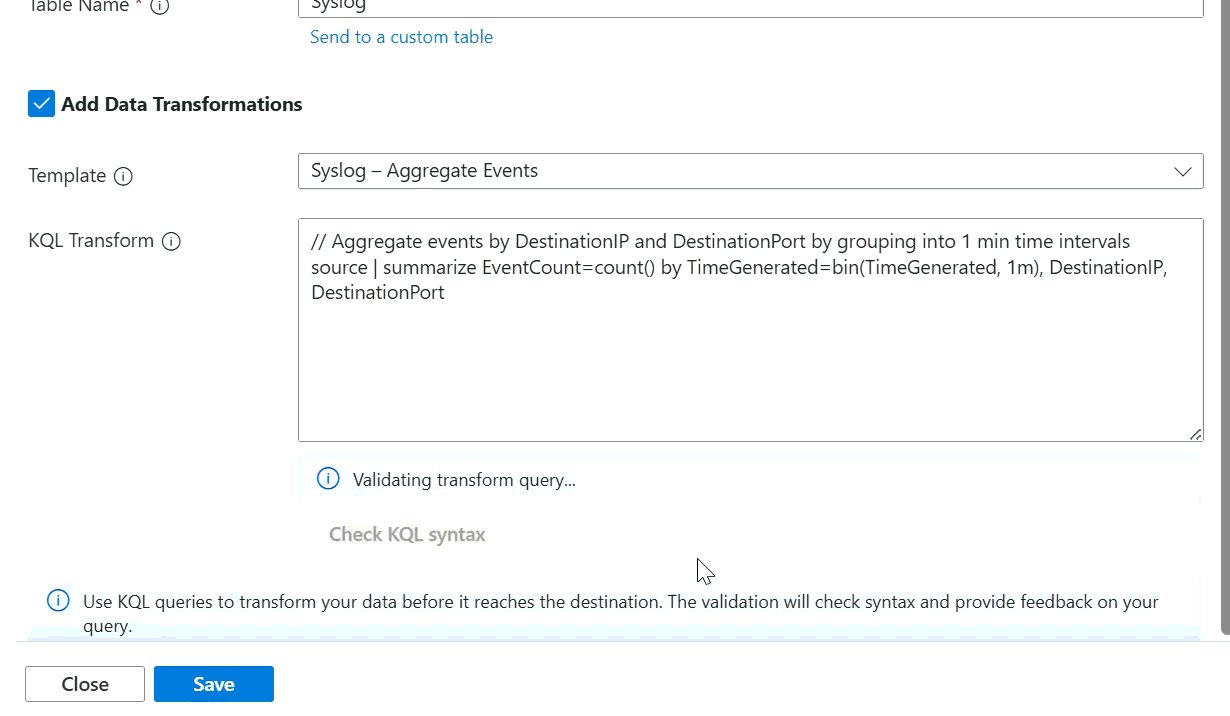

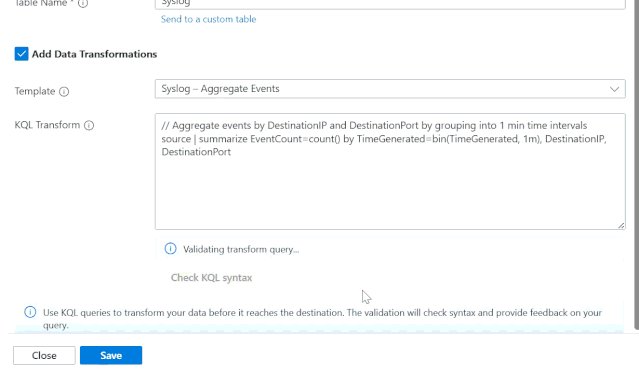

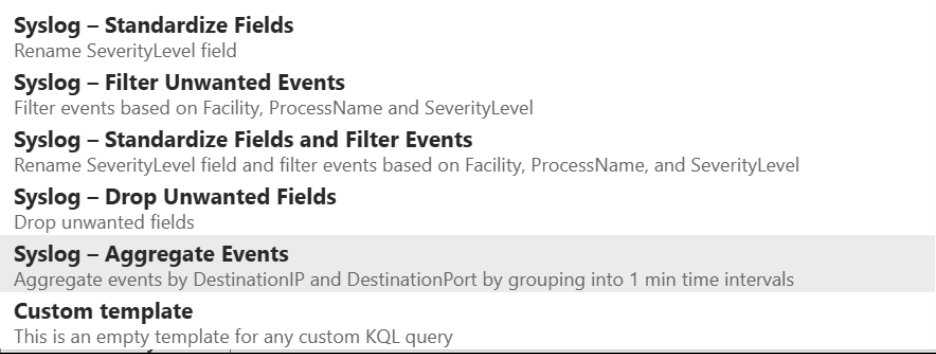

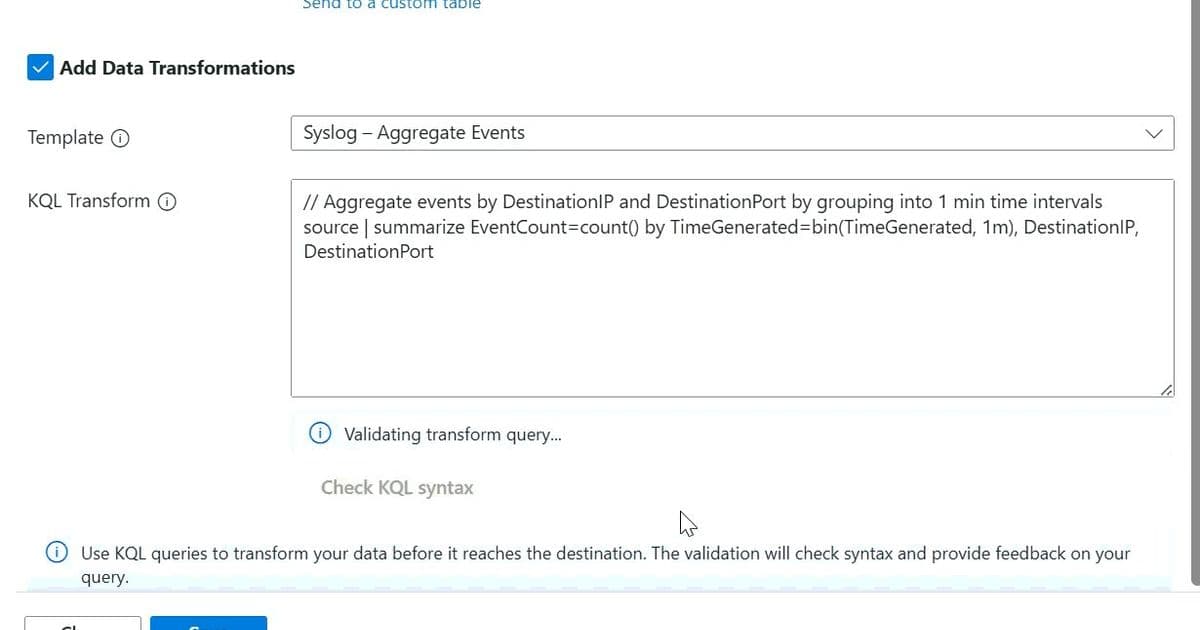

Pre-built KQL templates accelerate implementation of common transformations

Pre-built KQL templates accelerate implementation of common transformations

Strategic Implementation Guide

- Cost Analysis: Audit current Log Analytics ingestion to identify high-volume, low-value data streams

- Template Adoption: Leverage pre-built KQL templates for common patterns like security event aggregation

- Validation Workflow: Utilize built-in schema checking before deployment

- Hybrid Deployment: Deploy transformation nodes in edge locations using Azure Arc

Supported KQL operations include:

- Filtering:

where,contains, comparison operators - Aggregation:

summarize,bin,count - Schema Management:

extend,project,parse_json

Field-level filtering interface for syslog and CEF data sources

Field-level filtering interface for syslog and CEF data sources

Business Impact Analysis

- TCO Reduction: Early data reduction lowers ingestion costs by 30-60% for verbose workloads

- Analytics Acceleration: Standardized schemas improve query performance by eliminating parsing overhead

- Future-Proofing: Schema validation prevents analytics breakage during transformation updates

For implementation details, refer to the Azure Monitor pipeline documentation. The public preview is available globally through the Azure Portal, with GA expected Q3 2026.

Comments

Please log in or register to join the discussion