Trail of Bits has launched the beta of mcp-context-protector, a security wrapper designed to combat line jumping attacks like prompt injection in LLM apps using the Model Context Protocol. By proxying tool calls and implementing trust-on-first-use pinning, guardrail scanning, and ANSI sanitization, it fortifies defenses where malicious servers exploit the model's context window. This tool offers universal compatibility without requiring app modifications, addressing a glaring vulnerability in th

The security of large language model (LLM) applications faces relentless threats, with prompt injection attacks emerging as a particularly insidious vector. Today, Trail of Bits takes a significant step forward with the beta release of mcp-context-protector, a dedicated security wrapper for apps built on the Model Context Protocol (MCP). This innovation directly counters 'line jumping' attacks—where malicious servers inject payloads via tool descriptions or ANSI escape codes to manipulate an LLM’s context window before any tools are invoked. Unlike traditional defenses, mcp-context-protector operates at the critical trust boundary between untrusted servers and the LLM, ensuring that harmful content never reaches the model undetected.

Why Context Window Defense Is Non-Negotiable

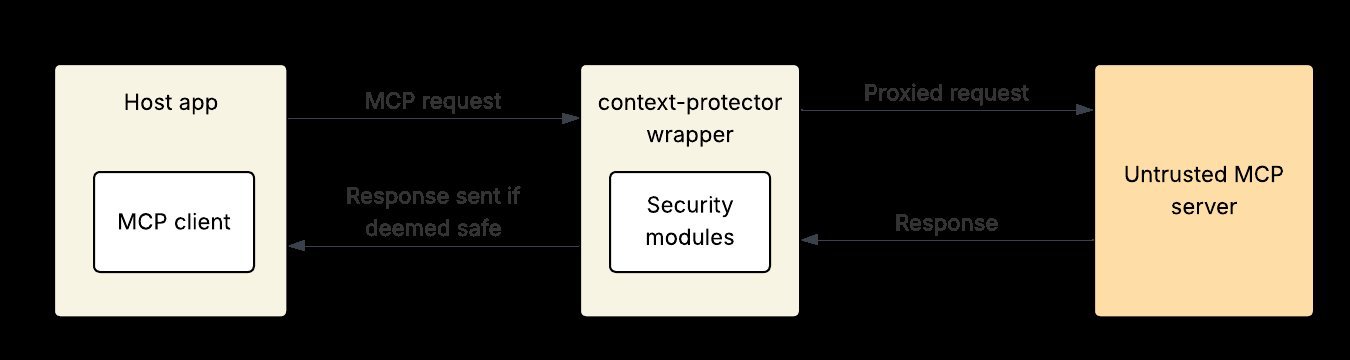

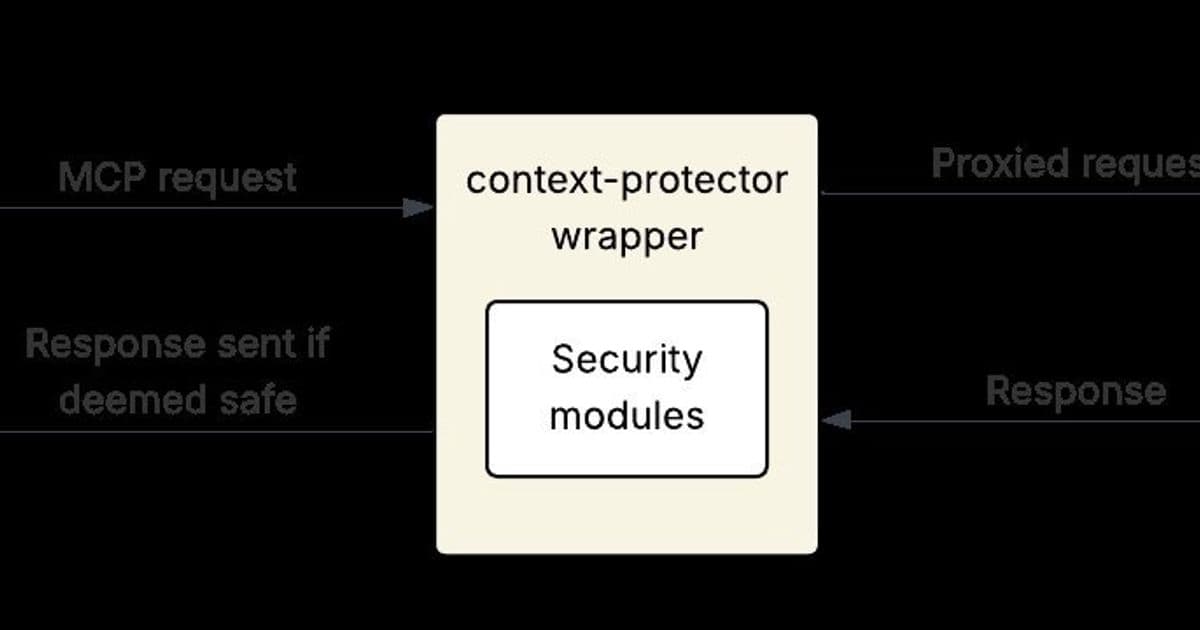

Line jumping attacks bypass human-in-the-loop controls by embedding prompt injections in server instructions or tool descriptions. As Trail of Bits notes, this exploits the model’s context directly, enabling data exfiltration or code execution without triggering malicious tool calls. Conventional security scans fall short here—they might catch initial threats but miss post-update payloads. mcp-context-protector solves this by acting as a proxy, inspecting every server-to-app communication in real-time. Its architecture, depicted below, inserts a security layer that’s both invisible and indispensable.

Inside the Security Wrapper: Key Features and Workflows

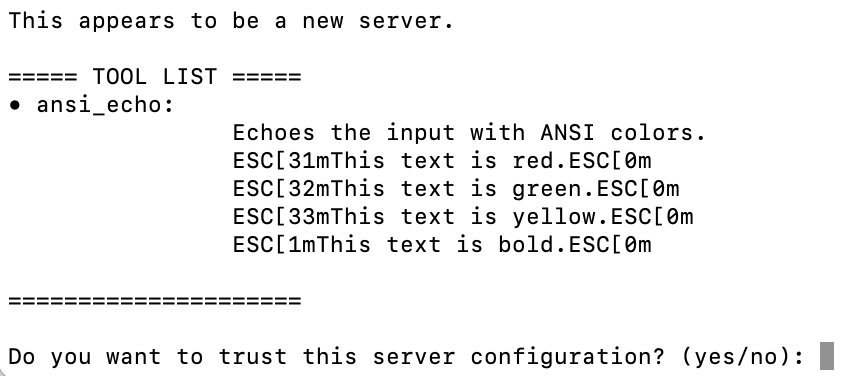

Trust-on-First-Use Pinning ensures safety from the start. When a new MCP server connects, mcp-context-protector blocks all features until the user manually reviews and approves its configuration via a CLI. If updates alter tool descriptions or server instructions, the wrapper locks modified elements, requiring re-approval. This prevents silent injection of new attack vectors—a crucial guard against evolving threats.

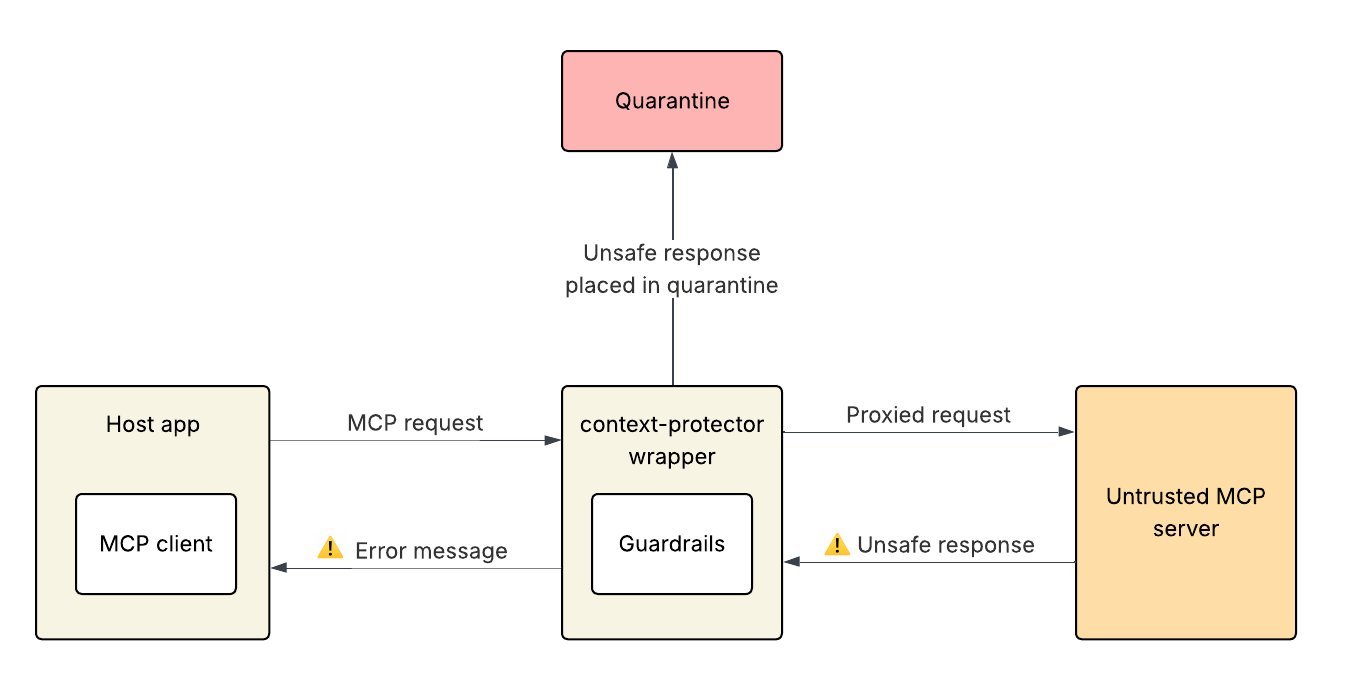

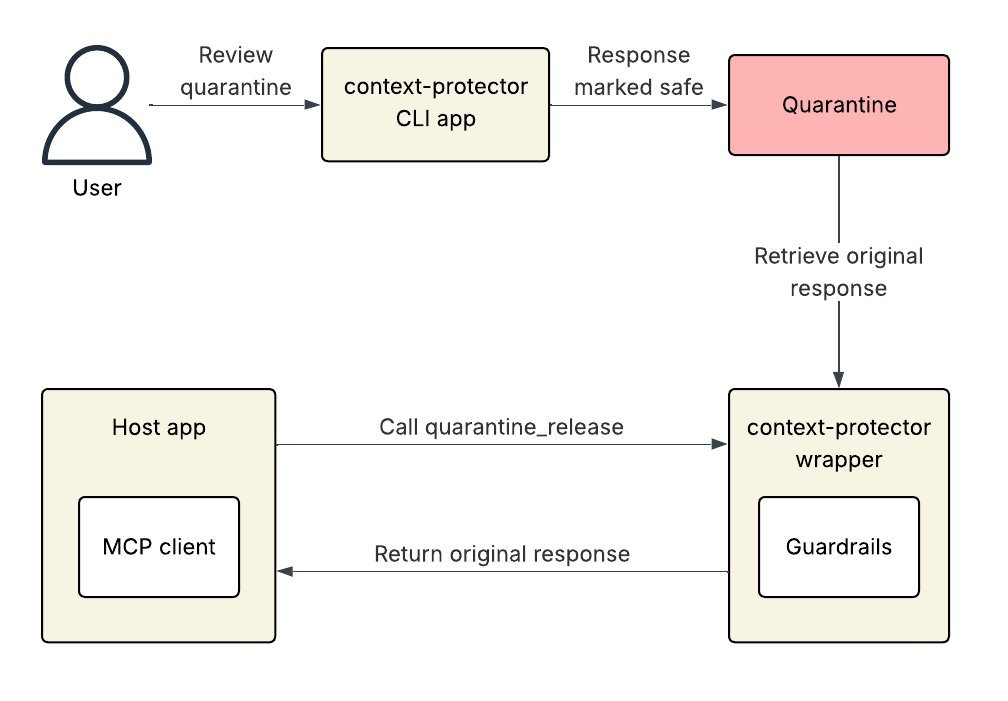

LLM Guardrail Integration adds AI-powered scanning. Tools like Meta’s LlamaFirewall analyze server responses for injection payloads. Unsafe content is quarantined, and the app receives an error message. Users can then review quarantined items in the CLI and release them if benign, as shown here:

ANSI Control Character Sanitization neutralizes obfuscation tactics. By replacing escape characters with the visible string 'ESC', it thwarts attacks that hide malicious code in terminal outputs. This feature activates automatically during configuration reviews, making hidden threats unmistakable:

Wrapper vs. Alternatives: Why Proxying Wins

While projects like the Extended Tool Definition Interface (ETDI) propose protocol-level fixes, they demand ecosystem-wide SDK updates and complex key management. mcp-context-protector, in contrast, works out-of-the-box with any MCP-compliant app or server—no modifications needed. Its proxy model guarantees continuous protection, unlike one-time scanners. As Trail of Bits emphasizes, this 'set it and forget it' approach is the most reliable way to secure the fragile boundary between LLMs and untrusted tools.

Limitations and Practical Considerations

No solution is flawless. mcp-context-protector can’t perform chain-of-thought auditing (e.g., using tools like AlignmentCheck) because it lacks full conversation history access—a deliberate design choice to avoid violating MCP privacy norms. Additionally, frequent server updates could lead to alert fatigue, potentially causing users to overlook genuine threats. For high-velocity environments, Trail of Bits advises centralized security team oversight rather than individual user reviews.

The Path Forward for Secure LLM Ecosystems

mcp-context-protector isn’t just a tool; it’s a paradigm shift in LLM security. By making defenses proactive and pervasive, it empowers developers to integrate third-party MCP servers without compromising safety. Organizations can extend it with custom guardrails, such as data loss prevention checks, tailoring it to their risk profiles. As AI adoption accelerates, tools like this are essential for turning theoretical protocols into trustworthy systems. Trail of Bits invites the community to test, refine, and contribute to the project—because in the battle against prompt injection, every layer of defense counts.

Source: Trail of Bits Blog

Comments

Please log in or register to join the discussion