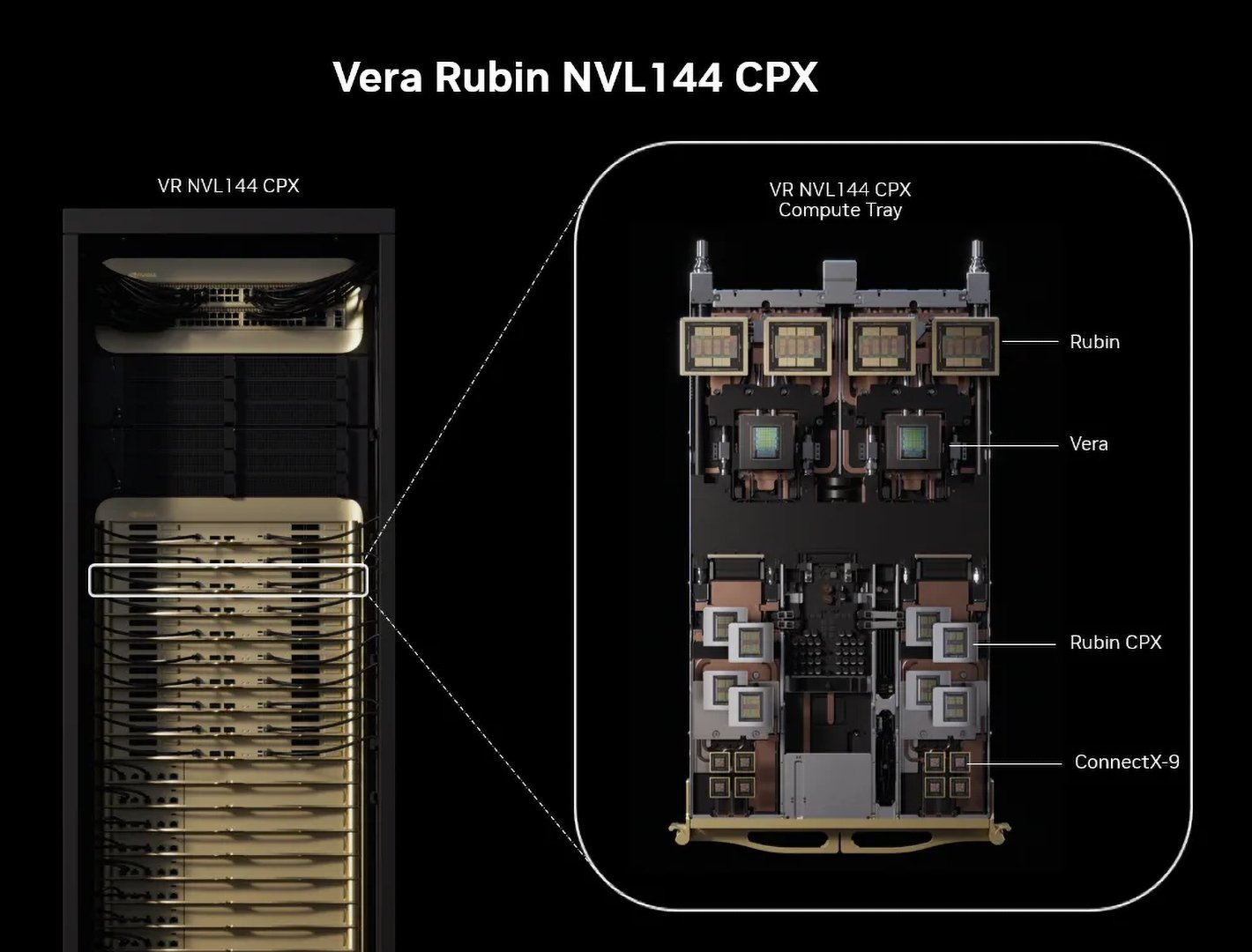

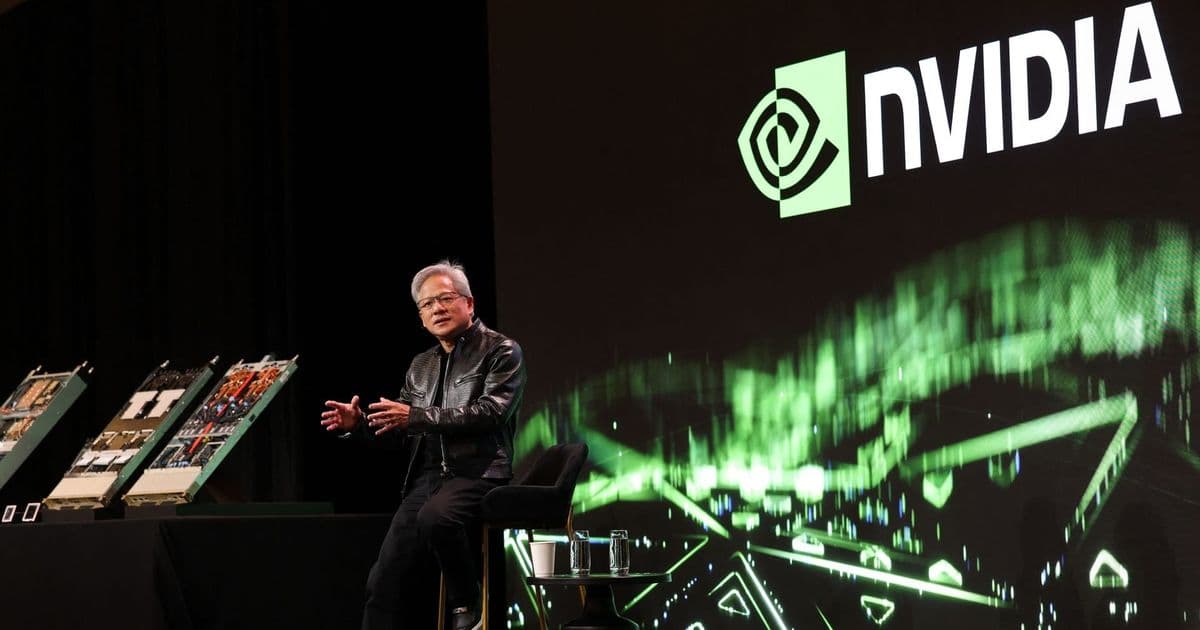

At CES 2026, Nvidia CEO Jensen Huang detailed how Vera Rubin's tray-based design tackles rack downtime, power spikes, and inference economics—revealing a strategic shift toward operational resilience over peak specs.

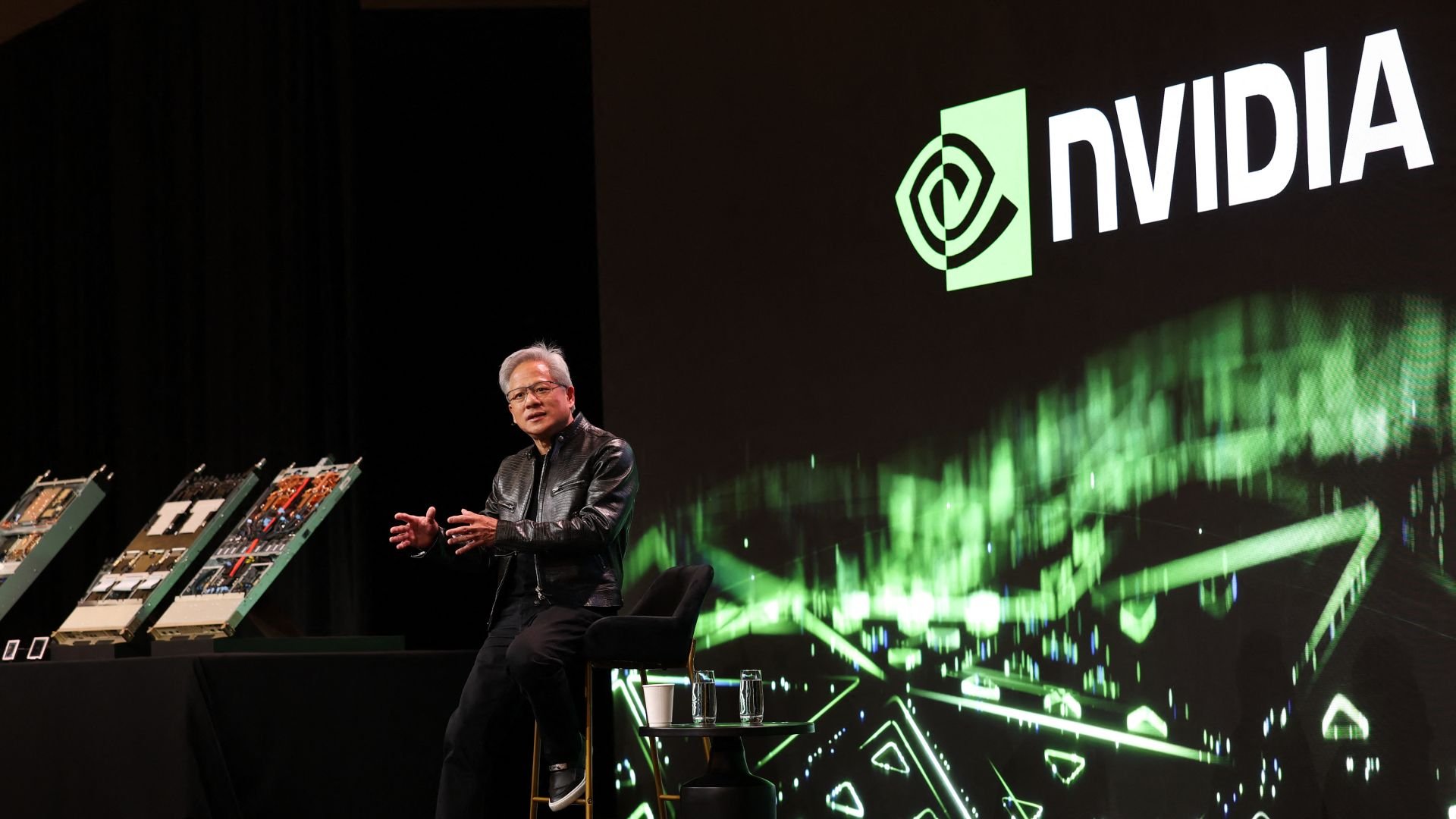

At Nvidia's CES 2026 press Q&A, CEO Jensen Huang moved beyond raw performance metrics to address the operational realities of large-scale AI deployment. With hyperscalers operating multi-billion-dollar AI factories, Huang emphasized three critical challenges: minimizing rack downtime during maintenance, managing instantaneous power spikes, and optimizing for continuous inference workloads. These priorities directly shape Nvidia's Vera Rubin architecture, set to ship in late 2026.

Serviceability as a System Metric

Modern AI racks represent $3M+ investments that generate revenue continuously. Huang highlighted the crippling cost of current maintenance workflows: "When we replace [a component] today, we literally take the entire rack down. It goes to zero... and stays down until you replace the NVLink or nodes." Each outage cascades across thousands of interconnected GPUs, costing operators up to $15,000/hour in lost inference output.

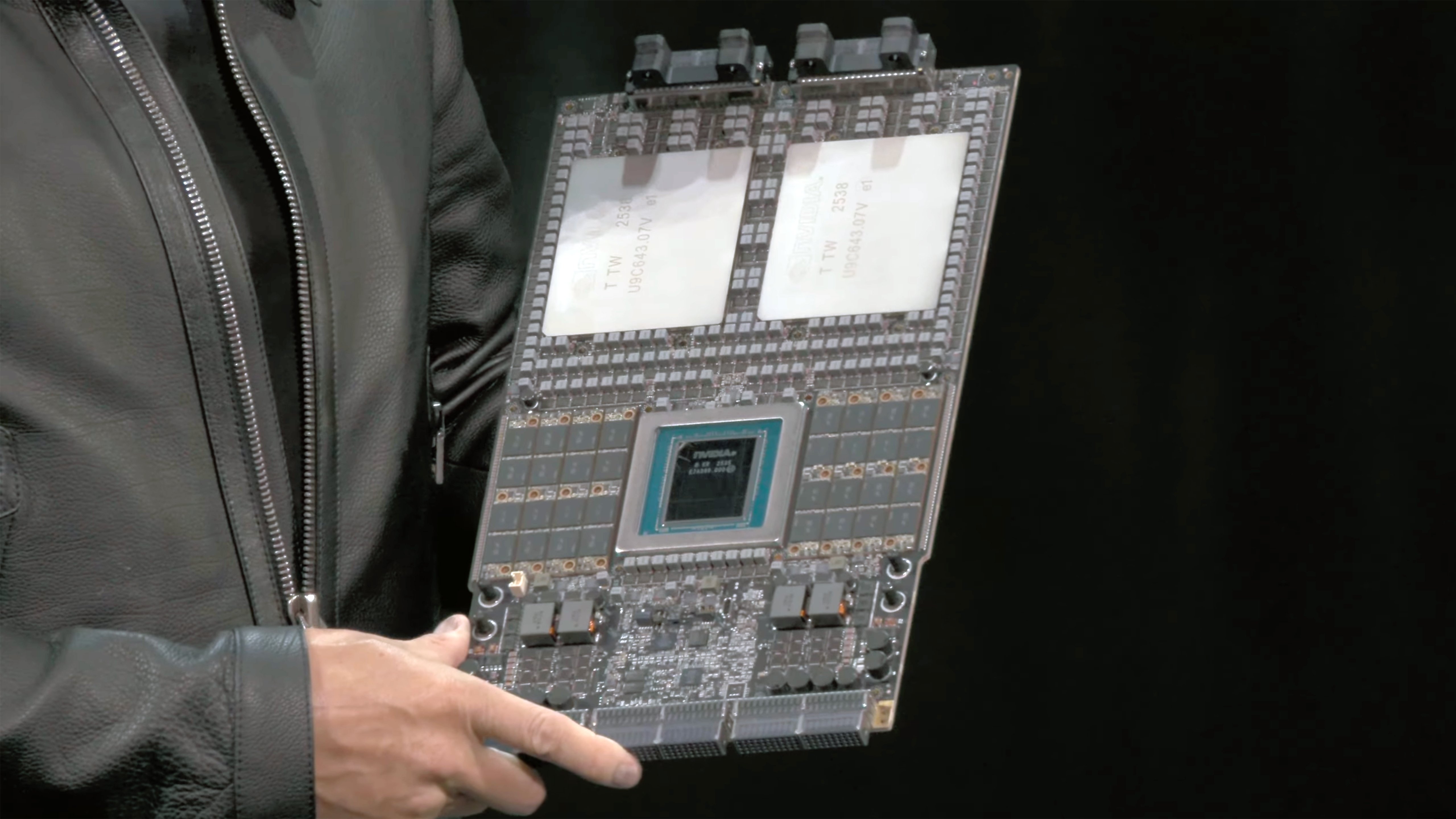

Rubin's solution is a cable-less, tray-based design enabling component-level hot-swapping. By replacing 43 cables with modular NVLink switch trays and liquid-cooled nodes, maintenance time plunges from 2 hours to 5 minutes per node. Crucially, the rack stays operational during replacements—addressing what Huang calls "the $300B problem" of stranded AI capacity. This design choice directly impacts supply chain economics, accelerating deployment velocity by 24x compared to Grace Blackwell systems.

Power: The Silent Scalability Killer

Huang identified transient power demand as the dominant constraint in AI scaling. Today's synchronized training workloads create instantaneous current spikes up to 25% above nominal TDP across all GPUs simultaneously. For Rubin's 1800W GPUs, this forces data centers to either:

- Overprovision power infrastructure by 25%, stranding capital ($500K/rack)

- Install massive battery banks to absorb spikes ($350K/rack)

Rubin attacks this via per-rack power smoothing electronics that flatten demand curves. By coordinating compute/memory power draws across nodes, racks maintain consistent loads within ±3% variance. This enables operators to safely utilize 97% of provisioned power versus today's 75% practical limit. Combined with 100% liquid cooling (vs. 80% in Blackwell), Rubin supports higher coolant temperatures—reducing chiller energy by 30% and enabling deployment in water-constrained regions.

Inference Economics Reshape Architecture

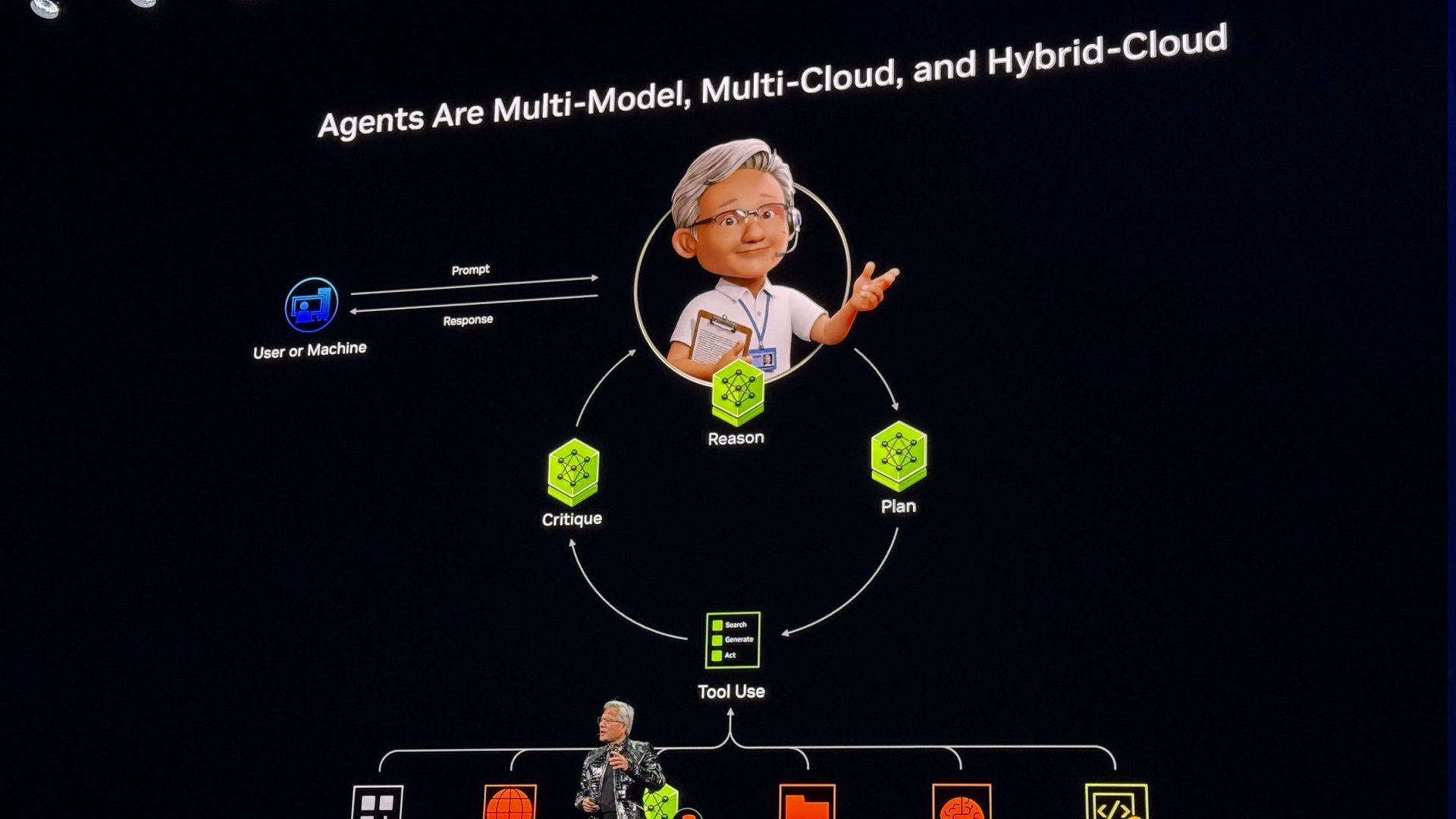

Huang revealed that inference now drives Nvidia's architectural priorities: "One of every four tokens generated today comes from open models." Unlike training bursts, inference operates continuously, making operational efficiency paramount. Key metrics have shifted from FLOPS to:

- Tokens per watt: +40% improvement in Rubin vs. Hopper

- Tokens per dollar: 35% better ROI over 5-year lifespan

- Uptime percentage: Target >99.95% for revenue-generating workloads

This refocus explains Rubin's unified memory model. While cheaper SRAM could reduce BOM costs by 15%, Huang warned: "You sell a chip once, but maintain software forever." Fragmenting memory architectures would require multiple software stacks, increasing long-term support costs by 200-300%. Rubin's HBM-only approach preserves a single optimized stack across all deployments—allowing fleet-wide performance gains via software updates.

Open Models Expand Addressable Market

The proliferation of open-source models like Llama-3 and Mistral is accelerating inference demand beyond hyperscalers. Huang noted open models now drive 25% of all tokens, deployed across:

- Enterprise data centers (40% growth YoY)

- Regional cloud providers (55% growth)

- On-premises clusters (30% growth)

This diversification pressures Nvidia to support smaller-scale deployments without sacrificing efficiency. Rubin's power smoothing and serviceability features specifically target these environments, where overprovisioning isn't feasible. The result is 22% higher utilization in sub-100kW deployments compared to Blackwell—directly enabling ROI for smaller operators.

The Resilience Premium

Nvidia's Rubin architecture represents a fundamental shift from peak performance to sustained productivity. By prioritizing serviceability (+99% rack uptime), power smoothing (+22% usable power capacity), and inference efficiency (+40% tokens/watt), Rubin addresses the hidden costs plaguing today's AI factories. As Huang concluded: "A rack that runs at 95% for five years outperforms one that peaks at 100% but averages 70%." With inference demand growing 5x annually, these operational gains may matter more than theoretical peaks.

For detailed specifications on Rubin's tray architecture, see Nvidia's Rubin Technical Brief. Power efficiency calculations are documented in the SC24 Power Smoothing Whitepaper.

Comments

Please log in or register to join the discussion