A decade of Apple Watch data analyzed by ChatGPT creates surprising health insights, but physicians caution about limitations and privacy implications.

The rise of consumer wearables has created an unexpected phenomenon in doctor's offices: patients arriving with AI-generated health reports. One individual recently shared their experience of feeding ten years of Apple Watch data into ChatGPT for analysis before consulting their physician, revealing both the potential and pitfalls of this emerging trend.

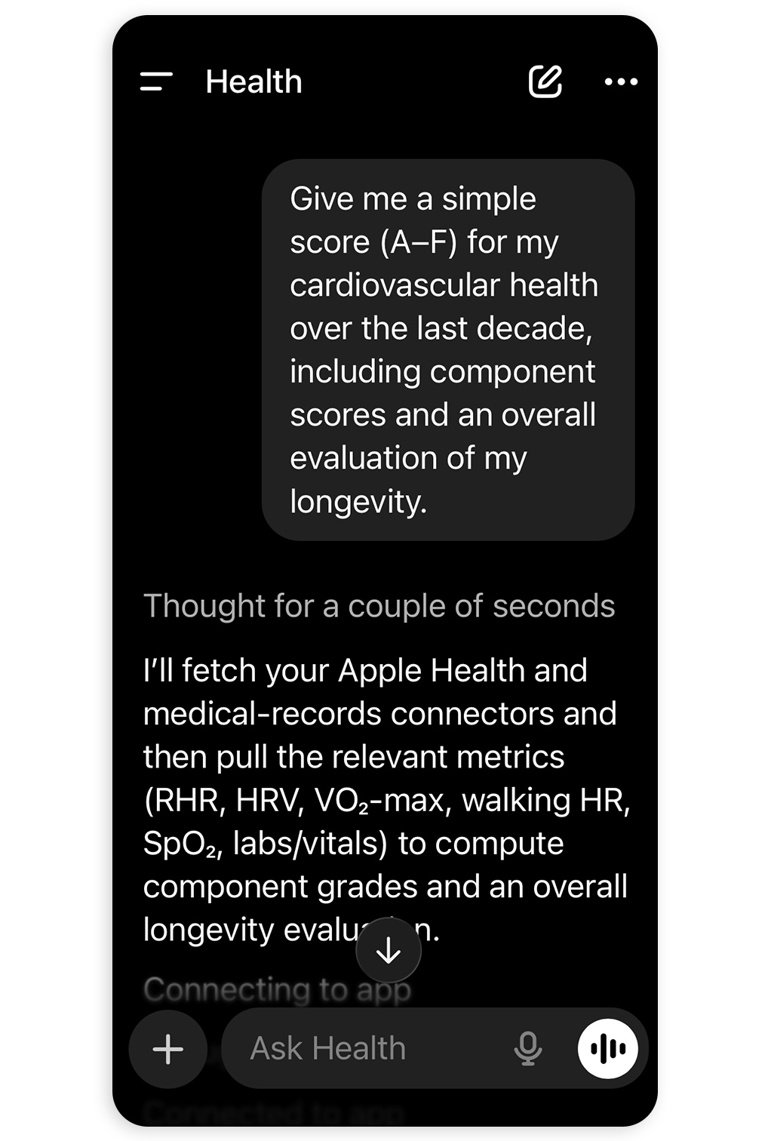

The Experiment After exporting heart rate variability, sleep patterns, activity levels, and workout metrics from Apple Health, the user prompted ChatGPT to identify patterns and potential health indicators. The AI noted correlations between periods of elevated resting heart rate and work deadlines, detected subtle changes in sleep architecture during stressful life events, and flagged potential overtraining patterns during marathon preparation cycles. Armed with these insights, the patient presented the findings during their annual physical.

Medical Reception Physicians report mixed reactions to such data dumps. "While longitudinal data is clinically valuable," explains cardiologist Dr. Anya Petrova, "raw sensor outputs lack medical context. What ChatGPT interprets as 'concerning heart rate variability' might simply reflect poor sleep hygiene." She acknowledges, however, that patients who actively engage with their data often demonstrate higher treatment adherence. The doctor in this case reportedly used the AI-generated observations as conversation starters but relied on clinical tests for actual diagnoses.

Critical Limitations Three significant concerns emerge:

- Algorithmic Blind Spots: Consumer wearables aren't medical devices. The FDA-cleared ECG feature on Apple Watch, for example, only detects atrial fibrillation—not other cardiac conditions. ChatGPT's analysis of non-validated metrics creates false precision.

- Privacy Implications: Exporting health data to third-party AI platforms violates HIPAA protections. As Johns Hopkins privacy researcher Mark Chen notes: "Once data leaves Apple's encrypted ecosystem, it becomes part of training sets with unknown governance."

- Confirmation Bias: Patients may fixate on AI-generated hypotheses rather than clinical evidence. Stanford's Human-Centered AI Institute recently published guidelines warning against "diagnostic anchoring" from unvalidated AI tools.

The Middle Path Forward-thinking clinics are developing structured approaches. The Mayo Clinic's pilot program teaches patients to generate PDF summaries via Apple Health's native tools instead of raw data exports. Cleveland Clinic incorporates wearable data through validated platforms like Epic's Apple Watch integration, where physicians view trend analysis within secure EHR systems.

As AI democratizes health data interpretation, the challenge lies in balancing patient empowerment with clinical rigor. While ChatGPT might spotlight trends worth investigating, its conclusions remain starting points—not endpoints—for healthcare decisions. The most valuable outcome of such experiments may ultimately be the heightened health literacy they foster in engaged patients.

Comments

Please log in or register to join the discussion