A new forecast from TrendForce reveals that data centers will account for over 70% of all high-end memory chip production in 2026, creating a severe supply constraint that won't ease until 2027. This shortage threatens to drive up prices across all electronics and could limit the ambitions of AI companies racing to scale their infrastructure.

The AI boom isn't just changing software—it's fundamentally reshaping the global semiconductor supply chain. According to a new analysis from market research firm TrendForce, data centers will consume more than 70% of all high-end memory chips manufactured in 2026, creating a supply crunch that won't see significant relief until new fabrication plants come online in 2027.

The Memory Hierarchy Problem

Modern AI workloads, particularly large language model training and inference, require massive amounts of high-bandwidth memory (HBM) and advanced DDR5 modules. Unlike traditional computing workloads that can be optimized for cache efficiency, AI operations are fundamentally memory-bound. The computational patterns of transformer architectures mean that data movement between memory tiers becomes the primary bottleneck, not raw processing power.

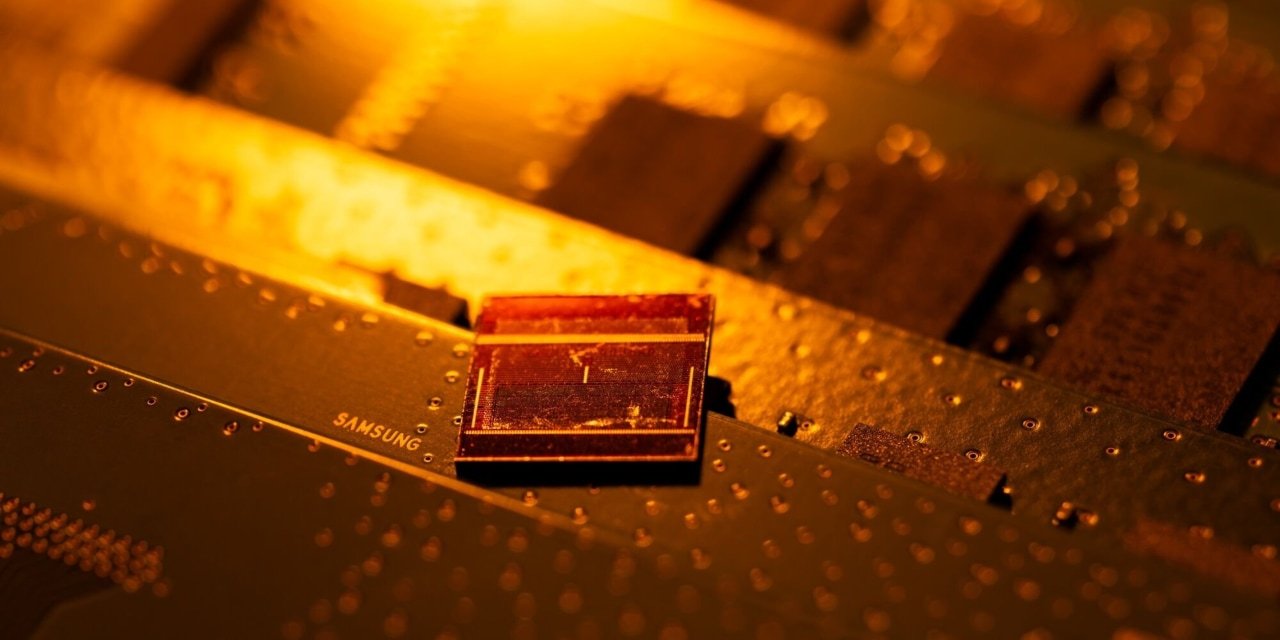

This creates a specific demand profile: AI data centers need the fastest, most power-efficient memory available. HBM3E and upcoming HBM4 chips, which stack memory dies vertically to achieve unprecedented bandwidth, are particularly sought after. These chips are manufactured using advanced packaging techniques like TSV (through-silicon vias) and are produced by a limited number of suppliers—primarily SK Hynix, Micron, and Samsung.

Supply Constraints and Manufacturing Realities

The semiconductor industry operates on long lead times. Building a new fabrication plant (fab) costs billions of dollars and takes 3-4 years from groundbreaking to production. Even expanding existing facilities requires significant capital and time. TrendForce's projection that little new capacity will come online until 2027 reflects these physical constraints.

Micron's recent groundbreaking in New York for what it claims will be the largest semiconductor facility in the United States is a case in point. Announced in 2022, the facility won't begin production until later this decade. Similarly, SK Hynix and Samsung are investing heavily in new capacity, but these investments won't alleviate the 2026 shortage.

The problem is compounded by the concentration of advanced manufacturing. While memory chips are produced in multiple countries, the most advanced packaging and assembly operations are concentrated in Taiwan and South Korea. Geopolitical tensions and supply chain vulnerabilities add another layer of risk to the forecast.

Market Implications

The immediate consequence of this supply-demand imbalance is price inflation. High-end memory chips already command premium prices—HBM3E modules can cost 3-5 times more than conventional DDR5 memory. As data centers compete for limited supply, prices will rise further, affecting:

AI Company Margins: Companies like OpenAI, Anthropic, and Google will face higher operational costs. While these costs might be absorbed initially, sustained price increases will pressure profitability and could slow the pace of model scaling.

Consumer Electronics: The shortage will ripple through the broader electronics market. Smartphones, laptops, and gaming consoles all use memory chips, though typically less advanced variants. However, as manufacturers compete for wafer allocation at foundries, prices across the board will rise.

Enterprise Hardware: Server manufacturers like Dell, HPE, and Lenovo will see increased costs for AI-optimized servers, potentially slowing enterprise AI adoption.

The Broader Pattern: AI's Hardware Hunger

This memory shortage is part of a larger pattern where AI workloads are consuming an ever-increasing share of semiconductor production capacity. Nvidia's GPUs, TSMC's advanced packaging capacity, and now memory chips are all facing similar supply constraints.

The trend reveals a fundamental shift in computing economics. Traditional cloud computing was largely compute-bound, with memory and storage as secondary considerations. AI workloads invert this relationship—memory bandwidth and capacity become the primary constraints, while compute is relatively abundant.

This has implications for system architecture. We're seeing the emergence of new memory-centric designs, such as:

- Memory-Centric Computing: Architectures that place memory closer to compute units to reduce data movement

- In-Memory Computing: Processing data directly in memory arrays to avoid costly data transfers

- Advanced Packaging: 3D stacking and chiplet designs that integrate memory and compute in novel ways

What Comes Next

The 2026-2027 timeline creates a critical window for the industry. Companies have several strategies to navigate the shortage:

Short-term (2024-2026):

- Memory Optimization: More aggressive use of techniques like quantization, sparsity, and model compression to reduce memory requirements

- Architectural Innovation: Shifting from dense models to mixture-of-experts architectures that activate fewer parameters per inference

- Supply Chain Diversification: Securing long-term supply agreements with memory manufacturers

- Alternative Technologies: Exploring emerging memory technologies like MRAM or ReRAM for specific use cases

Long-term (2027+):

- New Manufacturing Capacity: The fabs currently under construction will begin production, gradually easing supply constraints

- Process Innovation: Next-generation memory technologies like HBM4 and beyond will enter production

- Geographic Diversification: New facilities in the US, Europe, and Southeast Asia will reduce concentration risk

Practical Considerations for AI Practitioners

For teams building and deploying AI systems, this shortage has immediate implications:

Memory Budgeting: Models must be designed with memory constraints in mind from the start. The era of simply scaling up model size without considering memory costs is ending.

Inference Optimization: Techniques like model pruning, quantization, and knowledge distillation become essential, not optional.

Hardware Selection: Choosing the right memory configuration for specific workloads becomes a critical optimization problem.

Cloud Cost Management: Cloud providers will likely pass memory cost increases to customers, making efficient memory usage directly tied to operational expenses.

The Bigger Picture

This memory shortage highlights a fundamental tension in the AI industry: the drive for ever-larger models versus the physical and economic constraints of hardware production. While model scaling has driven impressive capability improvements, the hardware supply chain is now imposing hard limits.

The industry's response will likely accelerate several trends:

- Specialization: More domain-specific models that require less memory

- Efficiency Focus: Renewed emphasis on algorithmic efficiency over brute-force scaling

- Vertical Integration: AI companies may invest directly in semiconductor production or secure exclusive supply agreements

- Alternative Architectures: Exploration of neuromorphic computing, optical computing, and other paradigms that might circumvent memory bottlenecks

Conclusion

The forecast that data centers will consume 70% of high-end memory chips in 2026 isn't just a supply chain statistic—it's a marker of the AI industry's maturation. The early days of AI development were characterized by rapid scaling enabled by readily available hardware. Now, the industry must confront the physical realities of semiconductor manufacturing.

This constraint, while challenging, may ultimately lead to more sustainable and efficient AI development. The memory shortage forces a focus on optimization, specialization, and architectural innovation—areas that have been somewhat neglected in the race for scale.

For practitioners, the message is clear: memory efficiency is no longer a secondary concern. It's a primary design constraint that will shape the next generation of AI systems. The companies and researchers who adapt most effectively to this new reality will be best positioned to thrive in the constrained environment of 2026 and beyond.

The semiconductor industry's long development cycles mean that relief is years away. Until then, the AI community must innovate within constraints, turning a supply limitation into an opportunity for architectural breakthroughs.

Sources and Further Reading:

- TrendForce Market Analysis

- Micron New York Fab Announcement

- HBM Technology Overview

- Semiconductor Manufacturing Lead Times

- AI Hardware Efficiency Research (academic papers on memory optimization techniques)

Comments

Please log in or register to join the discussion