ElevenLabs has released an album featuring original tracks created in collaboration with artists like Liza Minnelli and Art Garfunkel using its Eleven Music AI model, signaling AI's deepening foray into music composition and production.

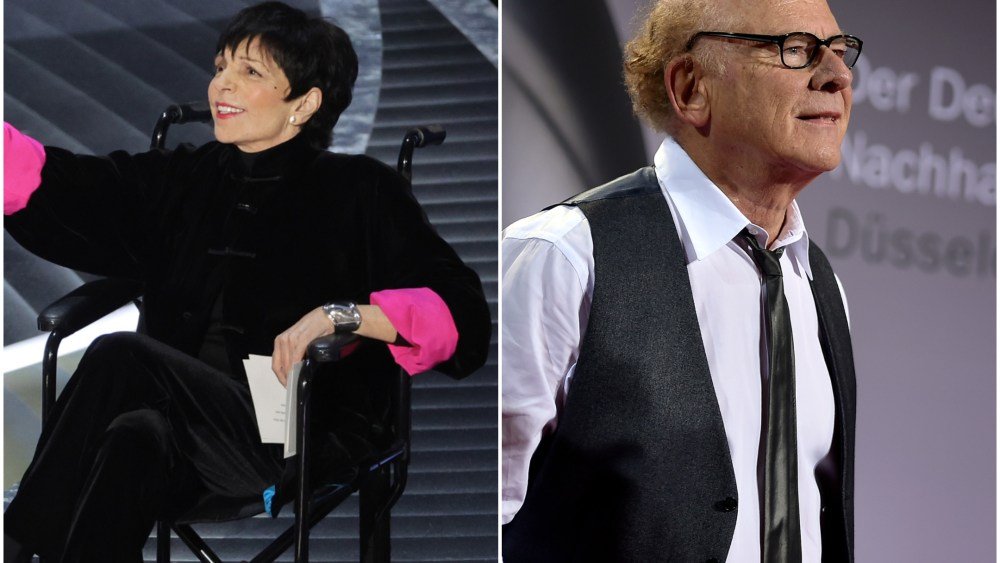

The release of ElevenLabs' Eleven Album, featuring original tracks created alongside artists including Liza Minnelli and Art Garfunkel, represents a strategic expansion beyond the company's established voice synthesis technology into full-fledged music production. This project positions Eleven Music—ElevenLabs' generative audio model—as a creative collaborator capable of contributing melodic structures, harmonic arrangements, and rhythmic patterns based on artist inputs. According to ElevenLabs, musicians provided thematic concepts and stylistic direction, which the AI transformed into compositional frameworks refined through iterative human feedback. The resulting tracks span genres from orchestral ballads to contemporary pop, marketed not as AI replacements but as "co-created" works.

Industry reactions reveal polarized perspectives on AI's role in artistry. Proponents highlight efficiency gains: composers can rapidly prototype ideas while preserving distinct creative voices, with Minnelli describing the process as "working with an endlessly adaptable digital arranger." Independent musicians report experimenting with similar tools to bypass traditional production costs. Conversely, critics argue this blurs authorship boundaries, with the Future of Music Coalition noting contracts for such collaborations rarely clarify royalty splits or IP ownership when AI generates foundational elements. Ethical concerns also persist about artists lending legitimacy to systems that could eventually reduce demand for human composers.

Technically, Eleven Music appears to build on ElevenLabs' voice synthesis architecture but extends into polyphonic generation. Unlike pattern-based tools like OpenAI's Jukebox, Eleven Music claims to interpret abstract descriptors ("a rainy-day jazz riff with melancholic undertones") into coherent arrangements. Early developer documentation suggests it uses diffusion models trained on licensed music libraries, though training data specifics remain undisclosed. Sound engineers testing the tool note occasional harmonic dissonance in complex compositions, suggesting current limitations in contextual awareness.

This release arrives amid tightening regulatory scrutiny. The Human Artistry Campaign, backed by major music unions, recently petitioned the U.S. Copyright Office to deny protection for AI-generated works lacking "meaningful human creative control." ElevenLabs counters that their model functions as an instrument—comparable to synthesizers in the 1980s—with artists directing all meaningful decisions. Yet the debate intensifies as streaming platforms like Spotify face pressure to label AI-assisted works, a move that could impact discoverability.

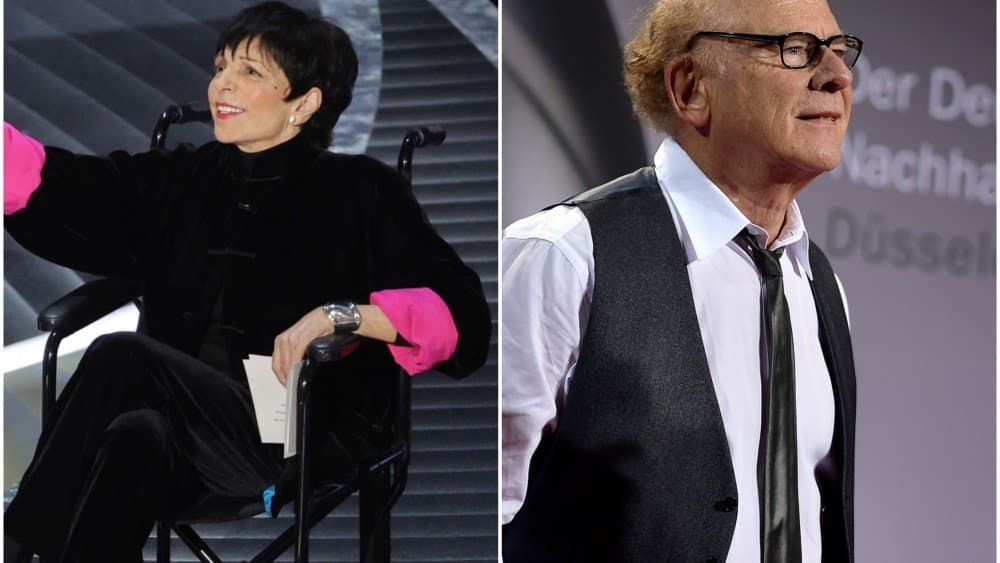

Art Garfunkel's participation in the project underscores a generational divide; while some legacy artists view AI as a novel collaborator, younger creators increasingly resist tools trained on unlicensed datasets. With ElevenLabs planning API access for developers later this year, the industry faces critical questions about attribution norms and whether human-AI collaboration should remain a niche experiment or become a mainstream production pipeline.

Comments

Please log in or register to join the discussion