Intel engineers have developed a Linux kernel patch that improves NVMe storage performance by up to 15% through CPU cluster-aware IRQ affinity handling, particularly benefiting high-core-count systems.

CPU Clustering Meets NVMe Storage: Solving the IRQ Bottleneck

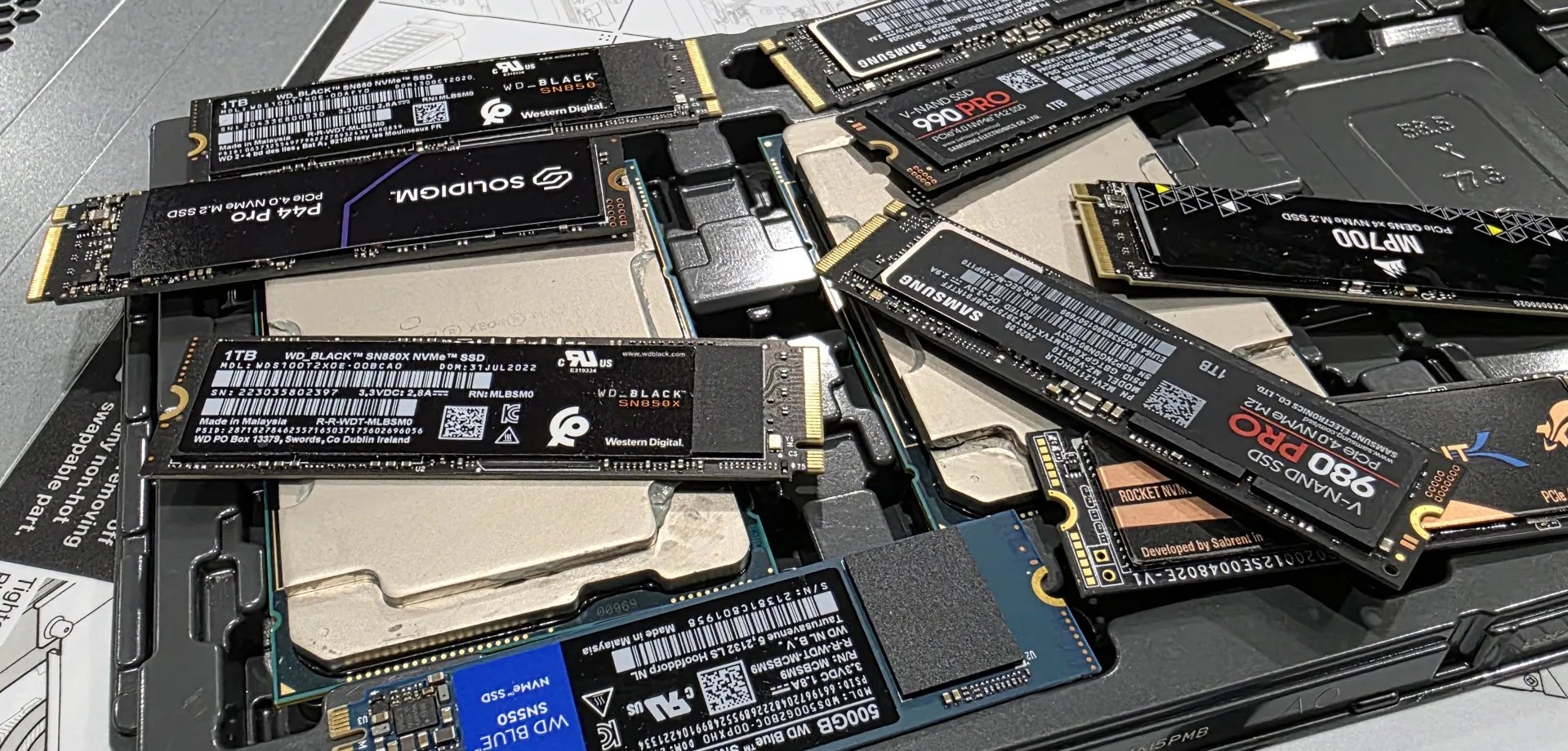

Intel Xeon processors with NVMe SSDs – the test platform for the new kernel patch

Intel Xeon processors with NVMe SSDs – the test platform for the new kernel patch

Linux systems running on modern high-core-count processors often face storage bottlenecks that defy conventional tuning. Intel engineers have addressed one such limitation with a kernel patch that optimizes interrupt request (IRQ) distribution for NVMe storage devices. The core innovation lies in making the kernel's CPU grouping logic cluster-aware within each NUMA domain.

Here's the technical breakdown: When NVMe storage generates interrupts, the Linux kernel assigns them to CPU cores via IRQ affinity settings. On systems where the number of NVMe IRQs is less than the total CPU count (common in dense storage configurations), multiple cores must share IRQs. Performance suffers significantly when the IRQ-affined cores reside in different CPU clusters than the cores processing the storage I/O requests – a topology mismatch that increases memory latency.

Benchmark Results: Quantifying the Gain

On an Intel Xeon E-series server platform, the patch demonstrated a consistent 15% improvement in random read performance using the industry-standard FIO benchmark with libaio engine. This was measured using 4K random reads across multiple NVMe drives – a common homelab and datacenter workload. While Intel hasn't published full test matrices, the improvement specifically targets:

- Systems with CPU cluster architectures (like Intel's Core/Atom hybrid designs)

- NUMA-enabled multi-socket configurations

- Storage-heavy workloads where IRQ overhead dominates latency

Implementation and Availability

Hardware optimization remains critical for storage performance

Hardware optimization remains critical for storage performance

The 271-line patch modifies lib/group_cpus.c to prioritize CPU cluster locality when assigning IRQ affinity masks. As Intel engineer Wangyang Guo explains: "Grouping CPUs by cluster within each NUMA domain ensures better locality between CPUs and their assigned NVMe IRQs, eliminating unnecessary cross-cluster communication penalties."

Currently residing in Andrew Morton's mm-everything Git branch, the patch targets inclusion in Linux 6.20~7.0 kernels. Homelab builders should note these implementation details:

- Kernel Requirement: Linux 6.20+ once merged

- Tuning Benefit: Maximized on systems with >16 cores and multiple NVMe controllers

- Power Implications: Reduced cross-cluster communication may lower overall energy consumption

Build Recommendations for Storage-Optimized Systems

- CPU Selection: When building NVMe-heavy systems, prioritize processors with unified L3 cache clusters (e.g., Intel's monolithic die designs) over chiplet architectures where cache coherency penalties are higher

- IRQ Monitoring: Use

irqbalance --debugto visualize IRQ distribution across cores before/after patch application - NUMA Alignment: Physically install NVMe drives in slots connected to the same CPU socket handling primary storage processes

- Kernel Testing: Once available, validate with

fiotests comparingnuma_mem_preferredandnuma_mem_localpolicies

This optimization exemplifies how low-level kernel improvements continue to unlock hardware potential. As core counts increase, such topology-aware scheduling becomes increasingly critical for storage performance – especially relevant for homelabs running database servers, VM clusters, or high-throughput storage arrays.

Social preview image showing the performance context

Social preview image showing the performance context

Comments

Please log in or register to join the discussion