A new PyTorch implementation leverages RAFT optical flow and occlusion masking to create temporally consistent DeepDream videos, solving the flickering problem that plagued previous methods.

The persistent flickering that marred early attempts at video-based DeepDream implementations may finally have a solution. A new PyTorch project combines RAFT optical flow estimation with occlusion masking to create hallucinatory videos that maintain temporal consistency across frames. This approach addresses a fundamental limitation in neural network-based image generation where traditional frame-by-frame processing creates distracting visual artifacts.

Developed as a fork of the neural-dream repository, this implementation introduces a dedicated video processing pipeline (video_dream.py) that warps hallucinated features from previous frames onto current frames using RAFT optical flow. RAFT (Recurrent All-Pairs Field Transforms) provides state-of-the-art optical flow estimation that accurately tracks motion between frames. The system then applies occlusion masking to prevent background elements from bleeding through moving foreground objects—a phenomenon known as ghosting that previously disrupted temporal coherence.

Key technical innovations include:

- Frame-to-Frame Propagation: Each new frame initialization incorporates warped data from the previous dream frame rather than processing independently

- Blend Ratio Control: Users can adjust the

-blendparameter (0.0-1.0) to balance raw frame input with inherited dream features - Occlusion Handling: Automatic masking prevents artifacts when objects overlap, maintaining object boundaries

- Progressive Preview: The

-update_intervalparameter enables partial renders during lengthy processing

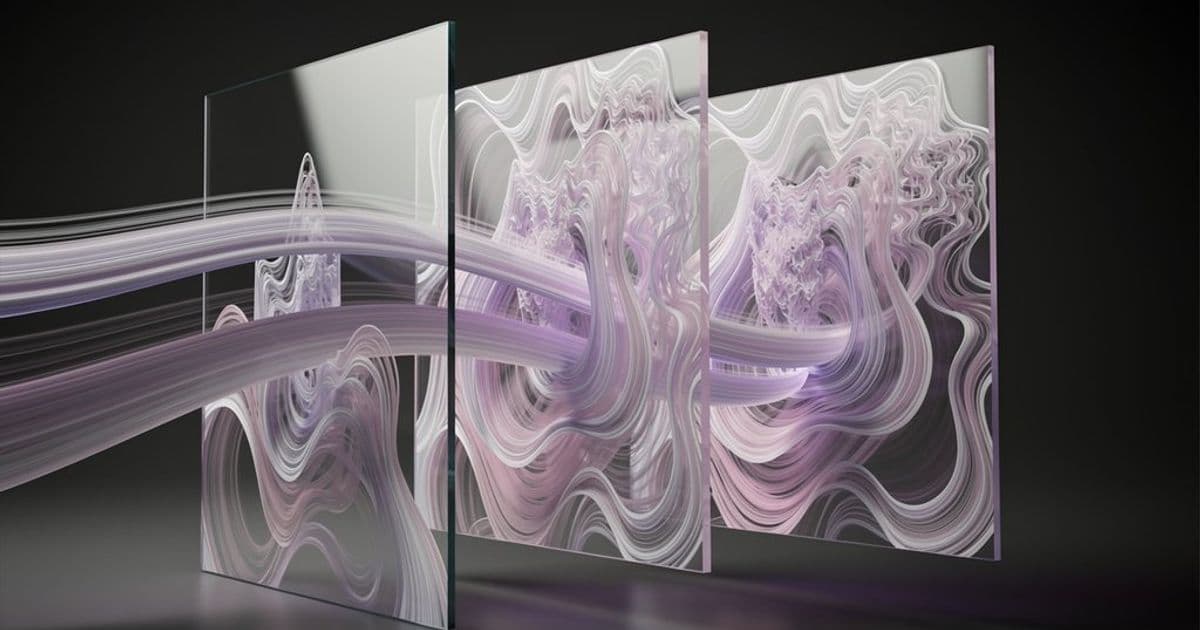

Visual comparisons demonstrate significant improvements. Sequences processed independently exhibit chaotic flickering (mallard_independent_demo.mp4, highway_independent_demo.mp4), while the temporal consistency version maintains stable hallucinations across frames (mallard_demo.mp4, highway_demo.mp4).

The implementation retains all functionality from the original neural-dream project for single-image processing while adding video-specific parameters. Notably, the temporal consistency enables practical video generation by reducing the required iterations per frame—the documentation specifically recommends -num_iterations 1 for video versus 10+ for still images.

Technical trade-offs include substantial computational requirements. The pipeline runs DeepDream processing plus optical flow calculations per frame, demanding significant GPU resources. The documentation advises reducing -image_size and using -backend cudnn to manage memory constraints. Multi-GPU support exists through PyTorch's parallelization strategies, though processing times remain substantial even with hardware acceleration.

For implementation, users must first install dependencies via pip install -r requirements.txt and download models using the provided scripts. The project supports various neural network architectures beyond the default Inception model, accessible through the -model_file parameter.

This approach represents a meaningful advancement in neural media generation, offering artists and researchers practical tools for creating temporally stable video hallucinations. While computational demands limit real-time applications, the occlusion-aware optical flow integration solves a fundamental technical challenge that has hindered video-based DeepDream applications since their inception.

Comments

Please log in or register to join the discussion