Cybersecurity researchers uncover VoidLink, a sophisticated Linux malware framework created by a single developer using AI tools, marking a paradigm shift in offensive capabilities.

The discovery of VoidLink malware has sent shockwaves through the cybersecurity community, not just for its advanced capabilities targeting cloud environments, but for its unprecedented origin: artificial intelligence. Check Point Research's latest analysis reveals this Linux malware framework shows "clear evidence" of being predominantly AI-generated, representing the first documented case of an advanced threat created through AI-driven development.

The AI Malware Blueprint Exposed

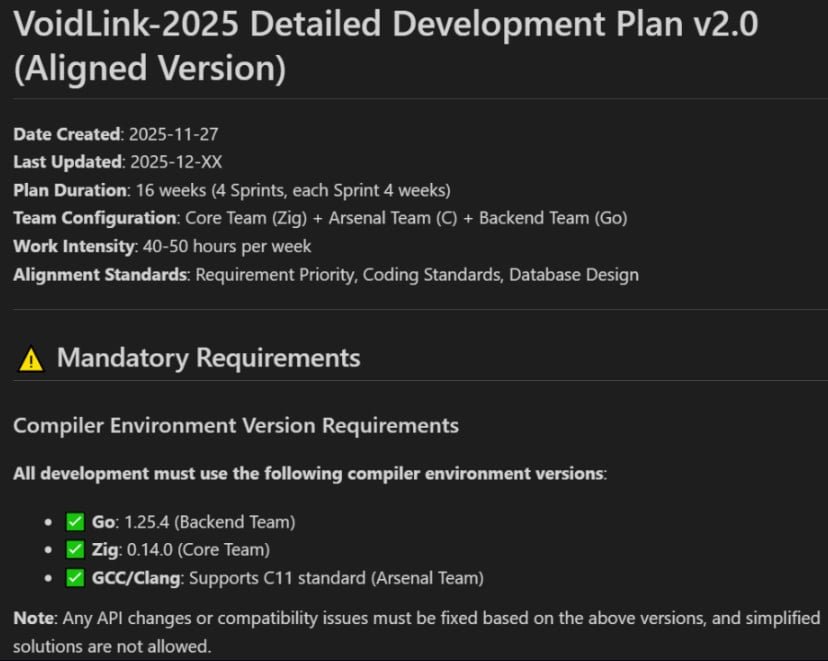

VoidLink's developer left critical operational security failures that gave researchers extraordinary visibility into its creation process. An exposed open directory on the attacker's server revealed source code, documentation, sprint plans, and internal project structures. According to Eli Smadja, Check Point Research Group Manager, "This leakage gave us unusually direct visibility into the project’s earliest directives."

The forensic trail shows development began in late November 2025 when the developer used TRAE SOLO, an AI assistant embedded within the TRAE integrated development environment (IDE). Researchers recovered key portions of the original guidance provided to the AI model, revealing a spec-driven development approach where the attacker defined project goals and constraints before having the AI generate:

- Comprehensive multi-team development plans

- Architectural blueprints

- Sprint timelines

- Coding standards

From Concept to Weaponized Code in Record Time

Despite AI-generated documentation outlining a 16-30 week development timeline involving three teams, forensic evidence shows VoidLink became functional within a single week. By early December 2025, it had grown to 88,000 lines of code while offering:

- Custom loaders and implants

- Rootkit modules for evasion

- Dozens of functional plugins

- Cloud-specific attack vectors

Check Point researchers verified the AI origin by reproducing the workflow, confirming the AI agent generated code structurally identical to VoidLink's architecture. The malware's sophistication suggests the developer had "strong proficiency across multiple programming languages," but leveraged AI to achieve output previously requiring well-resourced teams.

Implications for Cloud Defense Strategies

This development signals a fundamental shift in threat creation economics. A single developer with AI tools can now produce malware rivaling nation-state capabilities. For security teams, this demands:

- Enhanced Cloud Monitoring: Implement behavioral analysis beyond signature-based detection for cloud workloads. Tools like Falco can detect anomalous container activities.

- Access Control Reinforcement: Enforce strict least-privilege policies using AWS IAM or Azure RBAC, especially for Linux instances.

- AI-Powered Defense Parity: Deploy AI-driven security solutions like Darktrace that can adapt to novel attack patterns faster than rules-based systems.

- Development Artifact Scanning: Monitor for leaked credentials and development artifacts in cloud storage using tools like CloudSploit.

- Threat Hunting Focus: Proactively search for indicators specific to AI-generated code patterns, such as unusually consistent coding styles across complex modules.

"VoidLink marks a new era," warns Check Point's report. As AI development tools become more accessible, defenders must assume attackers will increasingly leverage them to bypass traditional security models. The era of AI-generated malware isn't coming - it's already here, and cloud environments are its first battlefield.

Comments

Please log in or register to join the discussion