How proper indexing, incremental updates, and time-window queries enabled a real-time event tracking system to scale to millions of records without performance degradation.

When I started building a real-time event tracking system a few months ago, I never expected it would grow to process 900,000 new records per day. What began as a small experiment now holds over 7 million total events and continues to scale. The biggest surprise wasn't the volume of data—it was how early architectural decisions determined whether the system could handle growth gracefully or struggle under its own weight.

The PostgreSQL Foundation

I chose PostgreSQL as the primary database for its proven reliability and performance at scale. This decision paid off, but not without careful attention to implementation details. Early on, I discovered that proper indexing made the single biggest difference in query performance. Queries that executed instantly with thousands of rows became noticeably slower with millions of rows—unless the correct columns were indexed.

The indexing strategy focused on the columns most frequently used in WHERE clauses and JOIN conditions. For time-series data like event tracking, this meant indexing timestamp columns heavily, along with any categorical fields used for filtering. The difference was dramatic: well-indexed queries maintained sub-second response times even as the dataset grew into the millions.

Avoiding the Recalculation Trap

One of the most important architectural decisions was avoiding full historical recalculations. Many systems fall into the trap of querying the entire dataset repeatedly to generate metrics. This approach works fine with small datasets but becomes exponentially slower as data grows.

Instead, I implemented incremental updates. As new events arrive, the system updates metrics in real-time rather than recalculating everything from scratch. This keeps performance consistent regardless of dataset size. The trade-off is slightly more complex code, but the performance benefits are worth it.

Time Window Optimization

Another key optimization was limiting queries to rolling time windows whenever possible. Rather than querying all 7 million+ events to generate a report, the system typically queries only the most recent day, week, or month of data. This dramatically reduces database load and keeps response times fast.

For historical analysis spanning longer periods, the system pre-aggregates data at different time granularities. Daily aggregates are stored separately from raw events, allowing quick access to historical trends without scanning millions of individual records.

Real-Time Processing Architecture

The system continuously ingests and processes new data in real time. This requires careful consideration of write performance alongside read performance. PostgreSQL handles concurrent reads and writes well, but proper table design and indexing are crucial.

I implemented a queue-based ingestion pipeline where events are first written to a staging table, then processed and moved to the main analytics tables. This decouples the ingestion rate from the processing rate, preventing slowdowns during traffic spikes.

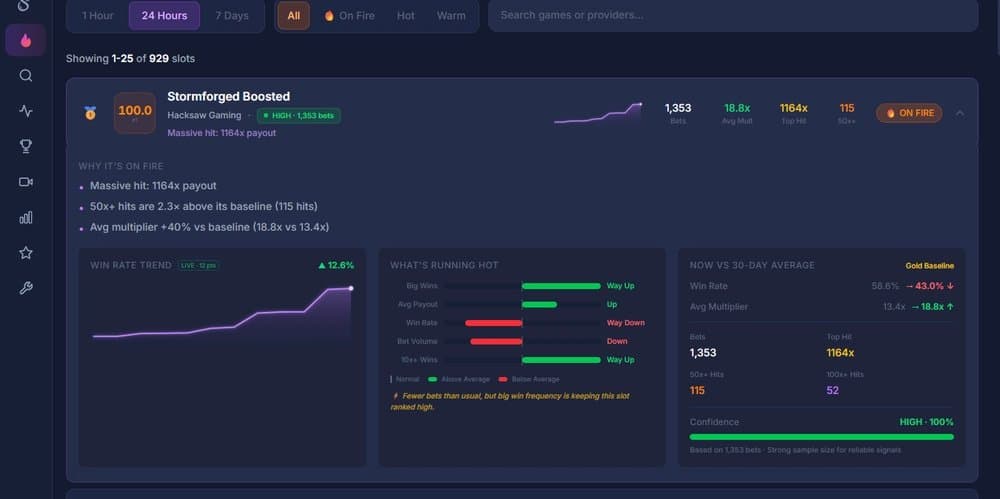

The live system is running at https://spindex.net/, continuously processing incoming events. You can see the real-time nature of the system—data appears within seconds of being generated.

Lessons in Scaling

The biggest lesson from building this system is that scaling problems usually stem from early architecture decisions, not traffic volume itself. A system designed correctly from the beginning can handle millions of records without major issues. A poorly designed system will struggle much sooner.

As the dataset continues to grow, efficiency becomes more important with every additional million records. The optimizations that seemed minor at 100,000 records become critical at 10 million. This creates a compounding effect where good early decisions pay dividends over time.

What Worked Well

- Incremental metric updates instead of full recalculations

- Heavy indexing on timestamp and filter columns

- Time-window queries to limit data scanned

- Pre-aggregation for historical data access

- Queue-based ingestion to handle traffic spikes

What I'd Do Differently

Looking back, there are a few things I'd adjust:

- Implement partitioning earlier for better query performance on very large tables

- Add more comprehensive monitoring from day one

- Consider a separate read replica for heavy analytical queries

- Use connection pooling more aggressively to handle concurrent requests

The Architecture Mindset

The key to building systems that scale isn't just choosing the right tools—it's thinking about how data will grow and how queries will perform at scale from the very beginning. Every design decision should be evaluated not just for current needs, but for how it will behave when the dataset is 10x or 100x larger.

This mindset shift—from building for today to building for tomorrow's scale—is what separates systems that gracefully handle growth from those that require complete rewrites. The 900,000 events per day running smoothly today are a testament to getting those early decisions right.

Comments

Please log in or register to join the discussion